Study on evaluation standard of uncertainty of design wave height calculation model*

2021-07-29BaiyuCHENYiKOUFangWULipingWANGGuilinLIU

Baiyu CHEN , Yi KOU , Fang WU , Liping WANG , Guilin LIU ,

1 College of Engineering, University of California Berkeley, Berkeley 94720, USA

2 Dornsife College, University of Southern California, Los Angeles 90007, USA

3 Statistics and Applied Probability, University of California Santa Barbara, Santa Barbara 93106, USA

4 School of Mathematical Sciences, Ocean University of China, Qingdao 266071, China

5 College of Engineering, Ocean University of China, Qingdao 266071, China

Abstract The accurate calculation of marine environmental design parameters depends on the probability distribution model, and the calculation results of diff erent distribution models are often diff erent. It is very important to determine which distribution model is more stable and reasonable when extrapolating the recurrence level of the studied sea area. In this paper, we constructed an evaluation method of the overall uncertainty of the calculation results and a measurement of the uncertainty of the design parameters derivation model, by incorporating the influence of sample information on the model information entropy,such as sample size, degree of dispersion, and sampling error. Results show that the sample data size and the degree of dispersion are directly proportional to the information entropy. Within the same group of data,the maximum entropy distribution model has the lowest overall uncertainty, while the Gumbel distribution model has the largest overall uncertainty. In other words, the maximum entropy distribution model has good applicability in the accurate calculation of marine environmental design parameters.

Keyword: uncertainty; information entropy; extreme value distribution model

1 RESEARCH BACKGROUND

The calculation of marine environmental design parameters has important applications in marine engineering and coastal disaster prevention. The design and construction of marine engineering, the prediction and the early warning of marine disasters all need to calculate the precise recurrence of multipleyear level disasters. The statistical analysis is inseparable from the probability distribution pattern that matches the actual problem and is necessary to accurately calculate the design parameters of the marine environment. In the past, the selection of design parameter estimation models under marine environmental conditions is a mostly human biased process. The commonly used methods include probability paper and suitable line method. Although the measured data in the same group of marine environments can be tested by hypothesis, the calculated results are diff erent in values. More often than not, it is uncertain that which set of calculation results can be used as the design standard, despite its determination has very important practical engineering significance.

The accuracy of the marine environment design parameter estimation is closely related to the distribution function used to study the statistical characteristics of the measured data, and the current research on the marine environment design parameter estimation model worldwide mainly focuses on the determination of the parameters within the model. In fact, this research work is only one side of the uncertainty research of the marine environment parameter estimation model. The uncertainty of the design parameter estimation model under the marine environment will bring uncertainty to the overall estimation results due to the uncertainty of the parameters to be determined by the model and the data sampling threshold. Thus, it aff ects the design and construction of marine engineering. Therefore, it is necessary to study the uncertainty of the return level for the model projection, find a suitable measure to evaluate the uncertainty of the model, and then find a distribution model structure that can reflect the statistical characteristics of the elements for the marine environment more realistically.

At present, there are relatively few studies on the overall uncertainty evaluation standards of the theoretical model for the marine environment design parameters, and the existing studies mainly focus on the improvement of diff erent theoretical models with the study of the parameters determined in the theoretical model. It is well known that uncertainty can be thought of as the degree of uncertainty for the true value, encompassing some range of the true value in its manifestation, in order to characterize the dispersion reasonably assigned to the measured value(Deng et al., 2019; Liu et al., 2019d; Nabipour et al.,2020). Generally, we will expand the scope of the model and improve the parameter estimation and prediction accuracy of the model that has been seen applied in many various modeling fields such as electronic engineering, computational problems(Chen et al., 2017a, 2019a, b, 2020), and biological structures refinement (Wang et al., 2013, 2016, 2017;Liu et al., 2019a). The larger the uncertainty range,the greater the uncertainty of the data. The refinement generally includes the observation error and the random error, etc. For the same set of data, if the same model is selected, diff erent sampling will bring distinctive uncertainty.

The uncertainty in the choice of the distribution model must be taken carefully in the process of design parameter extrapolation, and additionally, data instability, parameter estimation error, and others will bring internal uncertainty. This part of the uncertainty has often been overlooked in previous statistical analyses, and in fact these should be considered in the analysis of overall system uncertainty (Zeng et al.,2018; Liu et al., 2020a, b).

Based on information entropy, this paper analyzes the data volatility, dispersion degree, sampling error of sample data, and the instability of the overall calculation results caused by the errors from parameter estimation, etc., can construct an evaluation criterion for the overall uncertainty of the distribution model.And the study of the overall uncertainty of diff erent models of the same measured data can be made under this criterion. The study of these theoretical contents will provide new criteria and basis for the selection and evaluation of models.

2 STUDY ON CRITERIA OF UNCERTAINTY EVALUATION

Information entropy is a measure of the uncertainty of a random event and the amount ofinformation and represents the overall information measure of a source in the sense of average. The higher the degree of disorder, the higher the information entropy. The amount of uncertainty and the amount ofinformation can be expressed by the amount of uncertainty that is eliminated, and the magnitude of the uncertainty of the random event can be described by a probability distribution function.

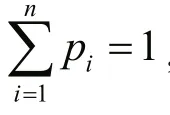

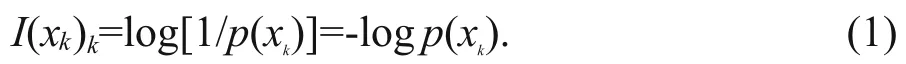

The information entropy of a random variableX(herein referred to as entropy) is defined as the mathematical expectation of a random variableI(X)in probability space:

Recorded asH(X). It is a measure of the uncertainty of the system describing the probability, that is, our measure of the “unknown” of the probability structure of the system.

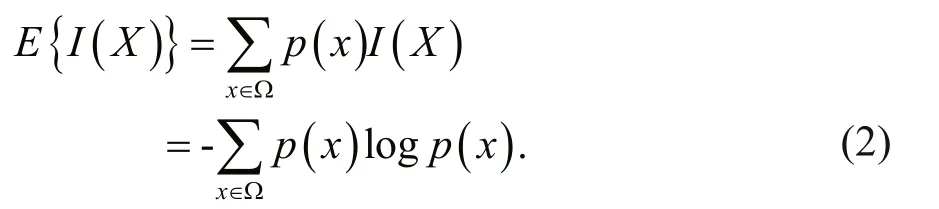

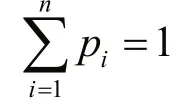

Lemma For a discrete system ofnevents with information entropyHnit can be expressed as:

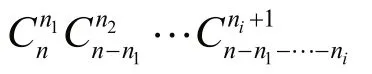

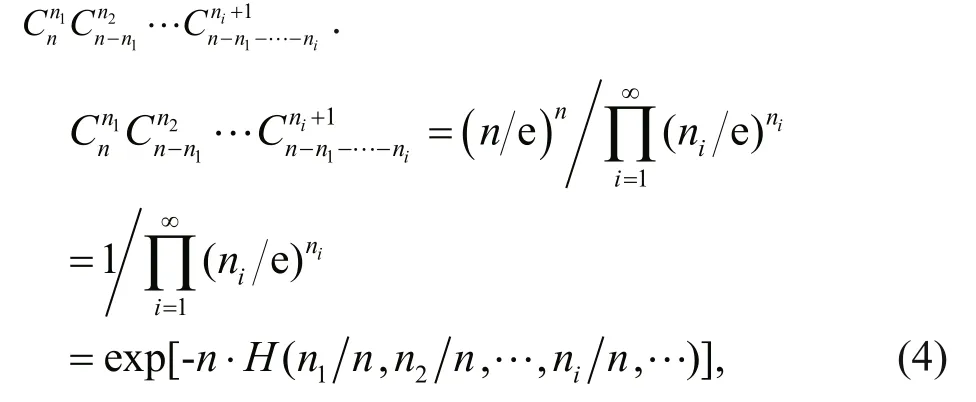

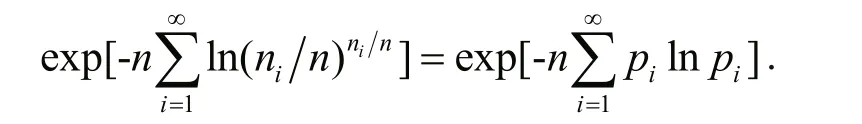

Proof The theoretical distribution model can be abstracted by the information entropy with the specific corresponding events. The following is to take the number of typhoons as an example for derivation:

Denoteni/n=pas the frequency of occurringityphons in every year duringnyears, therefore,

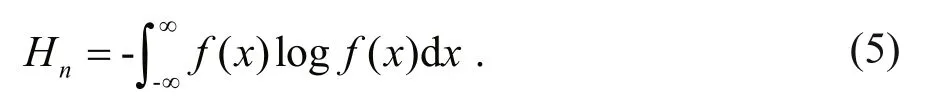

If the event of the system can be described by a continuous random variableX, the information entropyHnis:

Among themf(x) is the probability density function ofX. The natural logarithm is used as the unit of calculation for entropy.

It can be seen from Eqs.3 & 5 that information entropy is actually a function of the distribution of random variableX. It does not depend on the actual value, but only depends on its probability distribution.It can be said that each probability distribution corresponds to a unique information entropy value,and each distribution function corresponds to a unique information entropy value.

Information entropy is a representation of uncertainty. When the probability of all information appearing equal, we cannot judge which message will appear. At this time, we have the greatest uncertainty about the whole system. Thus, at this time, the information entropy takes the maximum value. On the contrary, if we know that something is an inevitable event and its probability of occurrence is 1,then the probability of other events occurring is 0.Thus, at this moment, our uncertainty about the whole system is the smallest, and the information entropy takes the minimum. From this point of view, the event equal probability takes the largest entropy, and the entropy takes zero value when the event is determined.This indicates that the information entropy is completely correct as a representation of uncertainty,that is, the information entropy is the appropriate measure function of uncertainty. Literature (Chen et al., 2017a) further points out the specific link between information entropy and uncertainty:

whereHrepresents information entropy andUrepresents uncertainty. Equation 6 provides a new method for calculating uncertainty by information entropy. When the uncertainty analysis needs to be performed on the object, to some extent, only the information entropy needs to be studied to reflect the uncertainty of the research object.

At present, the research on the uncertainty of the system model is often limited to determine which type of distribution model is more suitable for the research problem. In fact, the applicability of the model is related to many inputs, such as the number of data samples, the degree of dispersion, and the estimation of model parameters, the sampling error of the quantity, etc. If the uncertainty contained in the information is taken into account in the overall uncertainty of the model, the overall uncertainty thus obtained is more true, accurate, and comprehensive.

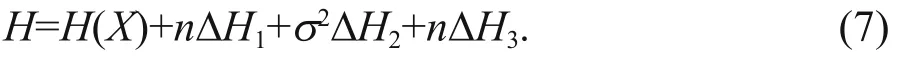

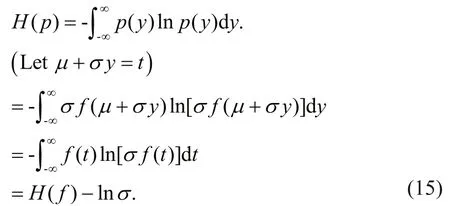

This paper connects the main aspects of the number of data, the degree of dispersion, the sampling error of estimator, to uncertainty through the application ofinformation entropy and gives the formula for estimating the information entropy of the model:

In the formula,H(X) is the information entropy of the estimation model itself,nis the number of sample data,σ2represents the variance of the sample, and ΔH1, ΔH2, and ΔH3represent the change of the information entropy when the number, variance, and error are changed by one unit respectively.

When conducting uncertainty studies, ifit is possible to replace the random variables in previous studies with a family of random variables and add a time perspective on a spatial basis, then it is possible to discuss the uncertainty over diff erent periods and the probability of this uncertainty influencing the future uncertainty.

2.1 Study of data sample capacity on model stability

Considering the sample size for the study of model uncertainty, is to consider the change of the uncertainty of the model when the number of samples increases or decreases. When the number of samples increases,the information contained in them increases correspondingly, and thus the entropy as the information metric also shows an upward trend. It is diffi cult to directly find the increase of entropy in relation to the number of samples. Under the given constraints, the distribution with the largest uncertainty is selected as the distribution of random variables. Therefore, the distribution of the maximum entropy is the most random distribution from the perspective ofits uncertainty (Chen et al., 2017b;Deng et al., 2020). The principle of extremum and maximum entropy of entropy tells us that there is always a distribution that maximizes the information entropy in the sample distribution (Liu et al., 2019b,2020). More often it considers the diff erence between the entropy of the corresponding distribution and the maximum entropy distribution, if this diff erence decreases as the sample number increases, then, vice versa, the entropy of the corresponding distribution increases as the number of samples increases.

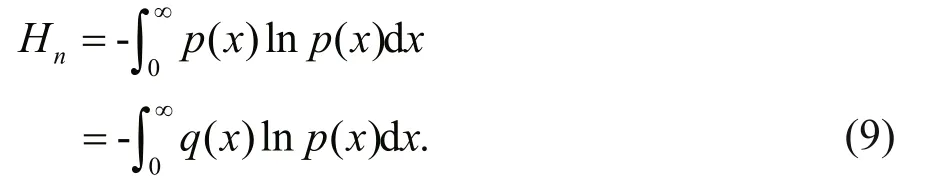

Let the continuous random variableXreach the maximum entropyHpwhen the probability density function isp(x), and the entropy isHqwhen the probability density function isq(x) other thanp(x),then the diff erence between the two entropiesHp-Hqcan represent the information diff erence between the two types of density functions, that is, the information change diff erence.p(x) is the probability density function at the maximum entropy, which is called the maximum entropy density function.

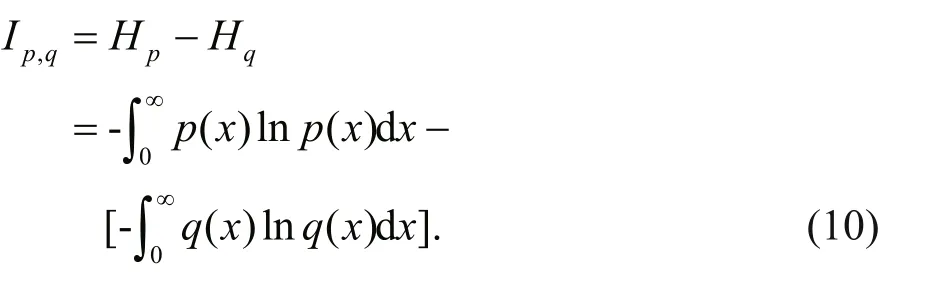

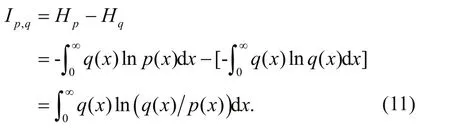

Theorem Let the continuous random variableX(x1,x2, ···,xn) reach the maximum entropyHpwhen the probability density function isp(x), and the entropy isHqwhen any probability density function other thanp(x) isq(x), then the information diff erence change is:

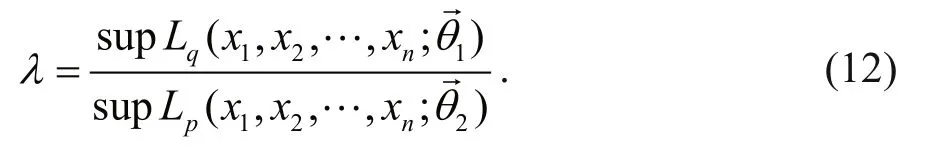

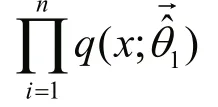

wherenis the number of samples for continuous random variables,λis the ratio of the likelihood function betweenq(x) and the maximum entropy density functionp(x).

To prove the above theorem, the following lemma is first introduced.

Lemma Under the same constraint condition, letp(x) be the probability density function that maximizes the entropy valueHn,q(x) is the probability density function that also satisfies the constraint condition.Then we have:

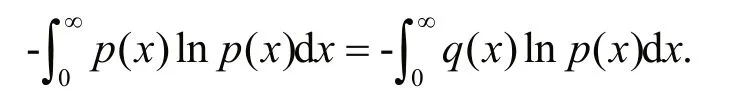

Theorem proof The information change diff erence:

According to Lemma, and under the same constraints forp(x) andq(x), by substituting Eq.9 into Eq.10, we can have:

And the ratio of the likelihood function ofp(x) to the maximum entropy density functionq(x) is defined by:

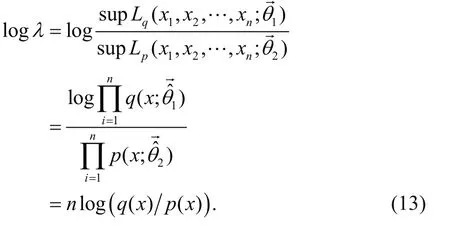

Take the logarithm of both sides of the Eq.12, and from the maximum value of the likelihood function we know:

The parameter part in Eq.13 is the value obtained by the maximum likelihood estimation, which is omitted here and is abbreviated asq(x) andp(x).

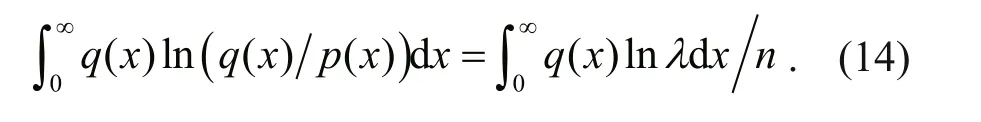

Multiply both sides of Eq.13 byq(x) and complete the integral, we can have:

From Eqs.11 & 14 thus we know:

Ip,q=Hp-Hq=(lnλ)/n.

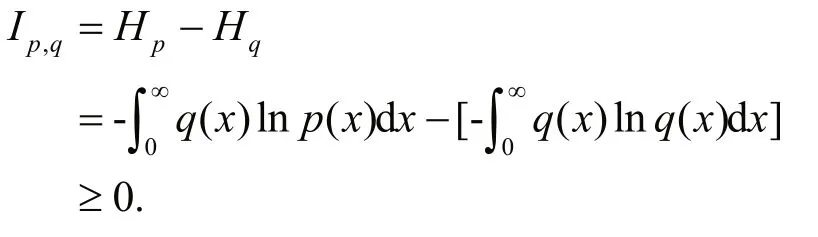

Theorem tells us that the information variation is essentially the logarithmic mathematical expectation of the likelihood function ratio, and thus a very important inference can be obtained:

Inference The entropy diff erence between the nonmaximum entropy probability density function and the maximum entropy probability density function:Ip,q≥0, and gradually approaches zero as the number of samplesnincreases.

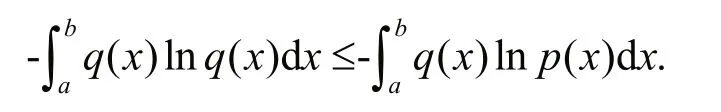

Inference p roof It is known from the literature(Escalante et al., 2016; Deng et al., 2021) that for anyp(x) andq(x), there is

The density function set to reach the maximum entropy isp(x), a density function other than the maximum entropy function is set asq(x), as can be seen from Lemma, we have:

Substitute it in Eq.11

2.2 Study on the sensitivity of the model resulted from data samples dispersion

The influence of data samples on the uncertainty of the model is not only reflected in the quantity, the range and dispersion degree of the sample also have certain eff ects on the uncertainty of the model. This is mainly reflected in the sensitivity of the extreme data to the model and the degree of application for the model. In fact, based on the Chebyshev inequality (Niculescu and Pečarić, 2010; Chen et al., 2016; Shamshirband et al., 2020), a set of observation sequences with the same number and the same mathematical expectation, if one group has a larger range of variation than the other group, then the corresponding variance will also be larger than the other group, that is, the mathematical expectation reflects the distribution center of the random variable, and the variance reflects the degree of dispersion of the random variable and its mean. It is based on variance that the literature (Liu et al., 2018,2019c; Ponce-López et al., 2016) has proved the relationship between information entropy and the degree of data dispersion.

Let a continuous random variable beX, whose density function, mathematical expectation and standard aref(x),μ, andσ, respectively, we can normalize the set of random variables, i.e.:

Y=(X-μ)/σ.

Then the standardized density function is:

p(y)=σf(μ+σy).

The entropy after standardization is:

It can be seen from Eq.15 that the information entropy of the random variable is diff erent from the normalized information entropy by a constant. And this constant is exactly equal to the logarithm of the standard deviation, so for the same kind of random variable, its information entropy increases with the increase of the standard deviation. Information entropy measures the uncertainty of random variables.The standard deviation or variance measures the degree of dispersion of random variables. Therefore,the larger the range of data samples, the greater the uncertainty. For example, under the condition that the value range is limited, the maximum entropy value is reached when the probability distribution of the random variable is uniformly distributed, and the variation range of the random variable is reaching the biggest with the entire value interval.

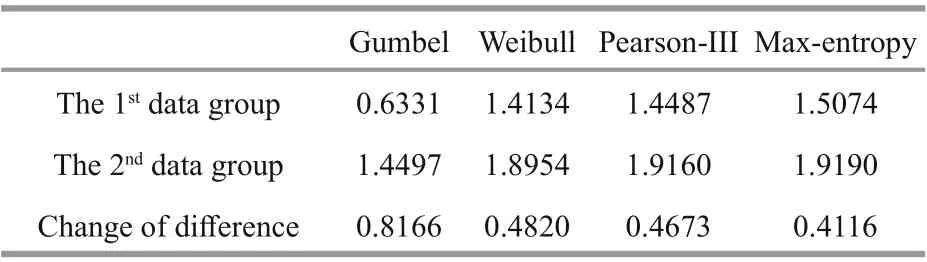

Table 1 Eff ect of sample quantity on information entropy

2.3 Research on the overall uncertainty of the model

Consider the impact of data samples on information entropy. Equation 8 shows that as the number of samples increases, the information entropy of the distribution model increases accordingly. Therefore,the ratio of the amount ofinformation entropy increase to the amount of sample increase can be regarded as the rate of change of sample size to information entropy. It is ΔH1in the Eq.7.

Consider the impact of data dispersion on information entropy. Since the variance can represent the degree of dispersion of the data and the Eq.15 indicates that when the degree of dispersion is larger,the variance is larger, and the information entropy of the model is larger. In fact, the degree of dispersion of the data has the greatest impact on the information entropy of the model and its influence is easy to be understood. Under the principle of maximum entropy,when the value of the data is limited to a certain range,the maximum entropy is reached when the probability density of the data is uniformly distributed; for the same reason, the greater the degree of dispersion of the sample (the larger the variance), the closer it is to the uniform distribution, meaning the closer it is to the maximum entropy. Considering the two sets of diff erent variances but with the same number of samples, the ratio of the increase ofinformation entropy to the increase of variance can be regarded as the rate of change of the sample to the information entropy, which is ΔH2in Eq.7.

The estimation of the model parameters also aff ects the uncertainty. In this paper, the information entropy of sampling error based on the Monte Carlo method is used. For the parameter estimator of the distribution model, a large number of random numbers corresponding to the parameter distribution are generated respectively, the estimated value satisfying the design parameters is obtained by using the definition of the empirical distribution function, and then the error between the estimated value and the theoretical value of the distribution is calculated. The information entropy of the error is calculated, and the influence of the sampling error on the information uncertainty and the rate of change for the sample number are obtained as ΔH3.

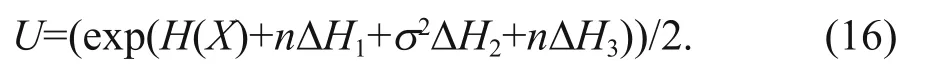

Based on the information uncertainty such as sample size, dispersion degree, sampling error, etc.,this study proposes a formula for calculating the overall uncertainty:

The above formula uses information entropy as its measurement tool, because information entropy represents the uncertainty of random variables, thus using information entropy to describe the probability distribution of random variables has its incomparable advantages (Jiang et al., 2019; Song et al., 2019; Xu and Lei, 2019).

3 ENGINEERING EXAMPLE

The uncertainty calculation method described in this paper is of great practical significance for the accurate calculation of the overall uncertainty of diff erent models and the reasonable selection of the type of distribution model, which provides a theoretical basis for the selection of the model with the lowest overall uncertainty so as to reasonably determine the design criteria of the off shore structures under various marine environmental factors.

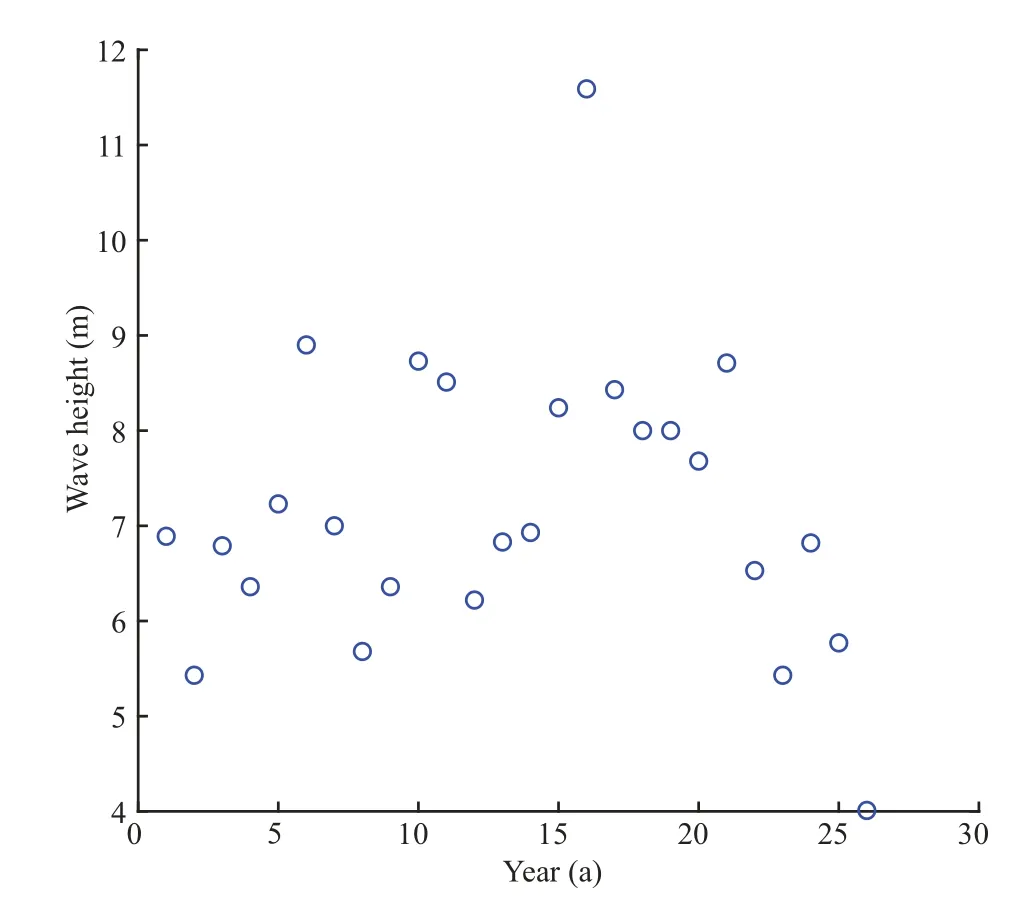

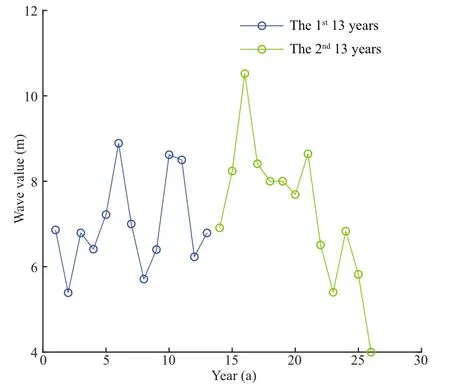

The application of the model uncertainty study is illustrated by the 26-year extreme wave height data of an island hydrological observation station in the Yellow Sea. First, the data scatter (Fig.1) of the extreme wave height sequence is given. The set of sequences uses the block maximum (BMM) method,so the data can be regarded as the sample from the Generalized Extreme Value (GEV) distribution(Jaynes, 1957; Zeng et al., 2017).

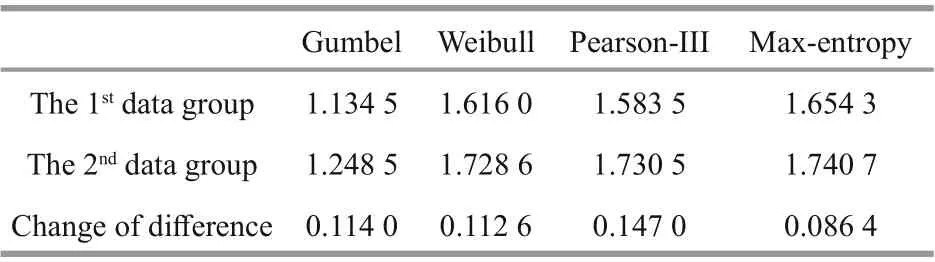

First, we analyzed the impact of changes in the number of data samples on the uncertainty of the model. Since the estimation model under marine environmental conditions generally considers the data representative with at least 15-20 years of continuous observation, the data is divided into two groups. The first group is the first 20 data points, and the second group is all the 26 data points. Both were used to calculate the information entropy change of the model under the two sets of data. It can be seen from Eq.2 that as the number of sample data increases, the information entropy will gradually increase.Therefore, the small range ofinformation entropy indicates that the model is less aff ected by the change of the number of data, and its adaptability to the data is better. Otherwise, the model is more sensitive to changes in the number of data points. The calculation results are shown in Table 1.

Fig.1 Extreme wave height of a hydrological observation station in the Yellow Sea for 26 consecutive years

Table 2 Eff ect of data dispersion on information entropy

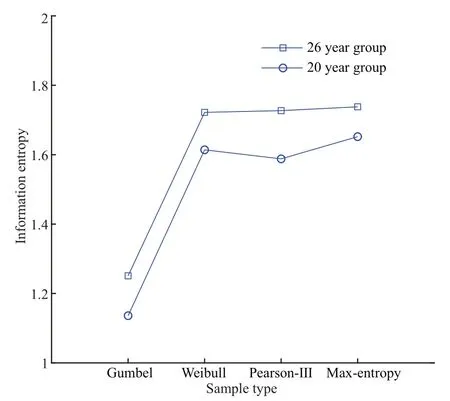

It can be seen from Table 1 that as the number of samples increases, the information entropy of each estimation model also increases. Among them, the maximum entropy model has the smallest change,the Pearson-III model has the largest change, and the change range for the Gumbel and Weibull model is between the two with minimal diff erence. These reflect the performance of the maximum entropy model in the case of a short data period. Max-entropy model can be chosen since the information entropy has the smallest range of variation, that is to say, the range of uncertainty is the smallest; on the other hand, the maximum entropy model also has greater stability in the case ofless data. Meanwhile, we can see from Fig.2 that the entropy value obtained by calculating the information entropy is always higher than the other models when the data acquiring period is either longer or shorter, which on the other hand shows that the model determined by the maximum entropy ofinformation is the model with the least interference of human subjective factors. From another perspective, it also reflects that the information entropy of various design parameters gradually unify with the increase of the number of data samples.

Fig.2 Information entropy of diff erent models for two groups of data

Fig.3 Sample data divided into two consecutive groups

Secondly, the influence for the degree of dispersion of the data sample on the uncertainty is analyzed.Since the information entropy of the distribution model has been gradually increased with the increase of the number of samples, in order to avoid the influence of the sample size on the calculation result,we divided the sample data into two consecutive groups, each containing 13 samples. The maximum of the 26-year data appeared in the 16thyear, and the minimum appeared in the 26thyear. Both are in the second group. It can also be seen from Fig.3 that the second set of data is significantly more variable than the first set of data. The range of fluctuation is large and the degree of dispersion is higher. Table 2 shows the information entropy values of the two sets of data corresponding to diff erent estimation models.

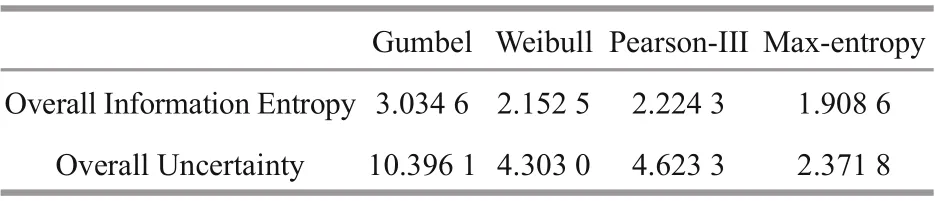

Table 3 Overall information entropy and overall uncertainty of each distribution model

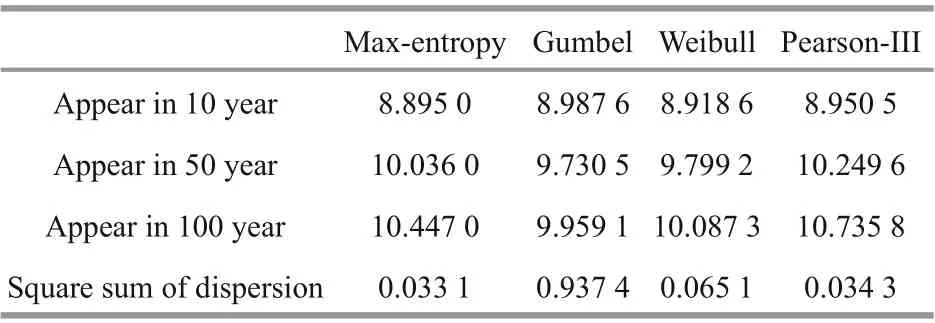

Table 4 Calculation of return wave height values of diff erent return period for diff erent models

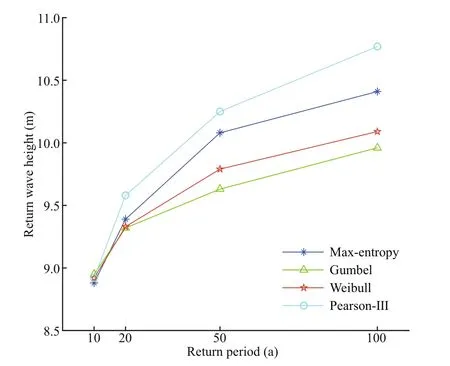

It can be seen from Table 2 that when the degree of dispersion of data increases, that is, when the fluctuation range of data changes is wider and the variance is larger, the information entropy shows a significant increasing trend. By comparing Tables 1 &2, it can be seen that the information entropy growth caused by variance significantly exceeds the increase in the number of samples and contributes to the growth ofinformation entropy. The information entropy calculated from the data of the last 13 years has even been greatly exceeding the information entropy that can be obtained from all the 26 years of data. Information entropy is a measure of the uncertainty of random events, according to the principle of maximum entropy, for the overall unknown random variables, in the case ofinsuffi cient data to solve the distribution function, the first requirement is to match the known data, and at the same time make the least assumptions about the unknown part of the distribution, the more the unknown part is the greater the entropy value. So far,we have calculated the information entropy changes of each distribution model when the number and variance of data samples change have been calculated.At the same time, the information entropy of each distribution model and the information entropy caused by the sampling error of the model parameter estimator are also given. Equations 7 & 16 can calculate the overall information entropy of the model, and then obtain the overall uncertainty of the model. The calculation results of the overall information entropy of each model are shown in Table 3. Table 4 and Fig.4 show the design wave height values of the diff erent distribution models.

Fig.4 Horizontal height map for the wave height of diff erent distribution models

It can be seen from Table 4 that for the commonly used extreme value distribution models for calculating design parameters of marine environment, Gumbel distribution has the largest global information entropy and global uncertainty, while max entropy distribution has the smallest, with values of 1.126 and 7.024 3, respectively. The overall information entropies of Weibull distribution and Pearson-III distribution are close, with a diff erence of 0.071 8. In addition, the diff erence between Weibull distribution and Pearson-III distribution, and the max entropy distribution are 0.243 9 and 0.315 7, respectively. In the case of short return period, the design wave heights calculated by diff erent distribution functions have little diff erence, and the diff erence between the maximum and minimum return levels of wave height is 0.092 6 (Table 4). With the increase of return period, the diff erence between the calculated results increases and the diff erence between the maximum and minimum design wave heights of 100-year return period is 0.776 7. The design wave height of max entropy distribution is lower than that of Pearson-III distribution specified in the hydrological code of the sea port (Table 4). In view of the above discussion, it is recommended to use the max-entropy distribution to calculate the design wave height in the determination of the design standards of breakwater and flood dike.

4 CONCLUSION

This study provides a method for calculating the overall uncertainty by considering the influence of sample information such as sample size, dispersion degree, and sampling error on the information entropy of the model, and the sampling error caused by the model estimator. The analysis was carried out with more convincing results obtained alongside.Specifically, the following conclusions are made:

(1) As can be seen from Table 3, in the given three alternative models, the overall uncertainty of the maximum entropy distribution model is the smallest for the 26-year continuous wave height data, followed by the Weibull and Pearson-III models, while the overall uncertainty of the Gumbel distribution model is the largest. This shows that we should first choose the maximum entropy distribution model when doing the model selection. The Pearson-III distribution model has little diff erence with the overall uncertainty of the Weibull distribution model, both of which can be the second alternative model.

(2) When the degree of dispersion of the data is large, that is, the variance of the data is large, the influence of the variance on the overall information entropy should be considered. It can be seen in Tables 1 & 2 that the influence of variance on information entropy is huge. The larger variance of the data in the last 13 years has increased the overall information entropy more than the increase ofinformation entropy in all data in 26 years.

(3) The overall uncertainty of the design parameter estimation model depends on its overall information entropy. For the overall information entropy of the model one can further consider the form ofits own component weighting. In addition, the appropriate scaling will increase the weight of the overall information entropy towards approaching the real situation (Zeng et al., 2018; Liu et al., 2020a, b).However, how to determine the weight reasonably and accurately is still a key problem to be solved.

(4) The research of this article shows that the overall uncertainty of the maximum entropy distribution model is the smallest when it is used to calculate the extreme wave height, which is the most consistent one with the actual situation, indicating that the extreme wave height calculated by the maximum entropy distribution model will be more accurate, and the application of this model to the determination of the design standards of off shore engineering will have certain theoretical guiding significance to the statistical prediction of typhoon disasters, the analysis of the power positioning design capability of drilling ships, and the determination of the bottom deck elevation of off shore platforms.

5 DATA AVAILABILITY STATEMENT

All data generated and/or analyzed during this study are not available to public. For readers that are interested, please contact corresponding author for details.

杂志排行

Journal of Oceanology and Limnology的其它文章

- Numerical study of the seasonal salinity budget of the upper ocean in the Bay of Bengal in 2014*

- A fast, edge-preserving, distance-regularized model with bilateral filtering for oil spill segmentation of SAR images*

- A Gaussian process regression-based sea surface temperature interpolation algorithm*

- Climatology and seasonal variability of satellite-derived chlorophyll a around the Shandong Peninsula*

- Sources of sediment in tidal flats off Zhejiang coast, southeast China*

- Characteristics of dissolved organic matter in lakes with diff erent eutrophic levels in southeastern Hubei Province,China*