Influence of Data Clouds Fusion From 3D Real-Time Vision System on Robotic Group Dead Reckoning in Unknown Terrain

2020-05-22MykhailoIvanovOlegSergyienkoVeraTyrsaLarsLindnerWendyFloresFuentesJulioCesarRodrguezQuionezWilmarHernandezandPaoloMercorelli

Mykhailo Ivanov, Oleg Sergyienko, Vera Tyrsa, Lars Lindner, Wendy Flores-Fuentes,Julio Cesar Rodríguez-Quiñonez, Wilmar Hernandez, and Paolo Mercorelli

Abstract—This paper proposes the solution of tasks set required for autonomous robotic group behavior optimization during the mission on a distributed area in a cluttered hazardous terrain.The navigation scheme uses the benefits of the original real-time technical vision system (TVS) based on a dynamic triangulation principle. The method uses TVS output data with fuzzy logic rules processing for resolution stabilization. Based on previous researches, the dynamic communication network model is modified to implement the propagation of information with a feedback method for more stable data exchange inside the robotic group.According to the comparative analysis of approximation methods,in this paper authors are proposing to use two-steps post-processing path planning aiming to get a smooth and energy-saving trajectory. The article provides a wide range of studies and computational experiment results for different scenarios for evaluation of common cloud point influence on robotic motion planning.

I. Introduction

NOWADAYS the development of distributed artificial intelligence (AI) of multi-agent systems is a subject of research highly demanded [1], [2]. Frequently this AI concept application is inspired by the social behavior of groups of insects, animals, and fishes. This behaviorism is based on simple rules for interaction with surroundings and communication within the group, so a group of the same species can achieve complex goals with less amount of energy spent by each individual. In [3] and [4] authors have overviewed some representatives models of the fauna. The adoption of such behaviorism models can be found in swarm intelligence/computations [5], [6].

Currently, swarm intelligence systems are specialized in multi-tasks that require coverage of unknown environments(cluttered or rugged terrains, indoor premises, etc.) by several robots. In such robotic group (RG) researchers are using the term swarm [7]–[9]. A detailed review of swarm robotics projects can be found in [10].

In [11] and [12] the main advantages of swarm robotics are defined:

1) Multithreading: individual members can perform various tasks simultaneously.

2) Scalability: a new member can be added without modifications of soft or hardware.

3) Job expansion: solving harder tasks that can not be performed by a single robot.

4) Graceful degradation: the swarm continues to perform tasks even if some of them are out of order.

5) Sensing network: located at different parts of the terrain swarm can be used for data acquisition.

However, according to [13] – [15], it can appear some tasks to take into consideration:

1) Possible non-static surrounding;

2) High level of informational entropy about the environment;

3) Task accomplishment options variety;

4) A complexity of teamwork rules;

5) Robotic swarm optimal localization;

6) Data transferring within the swarm;

7) Data loss and storage redundancy.

The main contribution of our work is the improvement of RG dead reckoning in the case of fuzzed knowledge about the environment. This paper presents a solution of combined dead reckoning, data transferring and machine vision, based on authors’ original real-time technical vision system (TVS).During the experiments, a set-up of three robots was tested to solve the task of target localization in a static cluttered terrain after some natural caused disaster (for example, earthquake).

The presented behavior model of the robotic group is the further improvement of the single robot algorithm motion planning proposed in [16]. It includes the advanced method of communication with A* dynamic path planning presented using the author’s real-time vision system in [10] and [17]where also comparisons of other path planning methods can be found.

In previous works authors used using path planning and obstacle avoidance, according to the local field of view [18],[19].

However, all the above-mentioned similar approaches (as well as many other previously known) to obstacle avoidance and path planning (dead reckoning) are mostly simplified function of visual servoing by paradigm “see something and avoid collision”. The herein described approach, due to its ability to measure the precise coordinates of the selected points on the obstacle surface, is another level of the theoretical generalization: to combine the function of trivial obstacle avoidance with the optimization of the robotic trajectory under two criterions, path length vs energetic losses(trajectory smoothness). Additionally, our case considers the robotic group movement in an unknown environment with no light conditions where the methodology stack provides the global mapping of the environment for further post-processing and 3D reconstruction.

So, theoretically, it is another kind of task, consideringndimensional path planning and optimization basing on the advantages of a scanning vision system.

Analyzing publications on the navigation of robotic swarms can lead to the following conclusions. Many of the already mentioned articles [20] – [22] do review the tasks of navigation, obstacle detection, etc. But most of them providing solution for one task at a time.

Proposed methodology and behavior model can be used in different tasks like environment monitoring, digitalization,foraging, etc. For example, implementation of robotic group and solution for terrain sectoring can improve the task of inspection for power facilities [23]. Changing the sonar sensors to the authors TVS can improve the tracking control for cushion robot [24] by adding more precise distance measurements and add possibility to create the map of static environment for further motion planning and scheduling. In case of [12] a big improvement can be dynamic network implementation for data transferring to improve the group navigation.

This paper is organized as follows: Section II covers the principals of used machine vision. Section III is dedicated to problems of group dead reckoning. Based on the previous research [10] and [17]. Section IV presents the advances of data transferring. Section V shows the simulations of system structure and experimental results.

The presented solution is a direct improvement of the previous publications [10], [16], [17] and [25].

This article presents for the first time a complex solution for SLAM tasks using a distributed group of robots [10], [17] and an improved data transferring algorithm comparing to [10]and [25]. Such a combined approach allows to obtain a novel contribution in order to improve the scanning quality and save time simultaneously, as well as a novel interesting tool for optimization of (field-of-view) FOV by sectorization, in order to maximize the quantity of information in a collective scan using the same quantity of n robots. We can mention as a useful novelty that this paper, takes into consideration the results of authors’ previous research [26] wherein the discussion about the optimal number of robots (n) for the finite scanning task in similar scenarios a group of three robots (n= 3) was proved.

II. Machine Vision

Many engineers and researchers prefer robots [27], [28] and drones [29] equipped with CCD or CMOS cameras or more expensive equipment such as time-of-flight (ToF) cameras[30]. In other cases, it is possible to use the inertial navigation system [31].

However, our RG highly probable moves in low light conditions with a large number of obstacles, where the aforementioned vision systems may not always give the correct results during post-processing. Therefore, working in such difficult conditions, the authors’ novel real-time vision system TVS (Fig. 1(a)) can satisfy with its accuracy and data representation.

The herein presented real-time TVS is appropriated novel tool for optimization of the considered task of the robotic group collective behavior improvement. The advantages of our system when compared with other methods of 2D/3D laser sensors, possessed in the following points:

1) Original TVS possess the property of natural physical filter [32], [33] of redundant information about robot surrounding, adjusting the quantity of the scanned points within FOV according to the requirements of current practical application.

2) This TVS in comparison to other methods of 2D/3D laser sensors has significantly wider FOV [32], [34], [35] due to its original patented [35] rotational sensory part. This circumstance permits two advantages: better simultaneous capture of the detailed data about surrounding [18], and wider possibility to vary the scenario sectorization betweennparticipants equipped with identical TVS [10], [17].

3) Present TVS, as shown in [16] represents the data naturally in the same Cartesian coordinate system where operating the robot, which delete the necessity of any postprocessing time, and in the same notation system as robot state matrix, which simplify any additional transformation within scenario.

4) The multiple experimental results [16], [36]–[38] shows that obtained scanning data are very appropriated for neuronal networks application in scanned surface rectification, and after this are significantly better that any surface reconstruction from stereovision systems.

5) The performance convenience in this case is given including the frequency of operation. According to [39] our TVS possess the feature of variable scanning step, which permits on the different stages of swarm mission adjust the fastness/accuracy to the current challenge; or even provide the variable scanning velocity based on enhanced control algorithms of DC motor of laser ray positioner, introduced in detail in [40] and [41].

The authors of TVS uses the principle of dynamic triangulation described in [32] (Figs. 1(b) and (c)). Further work of [36] proposes a method for improving the resolution of TVS and its implementation for surface recognition. This approach to obstacle recognition was implemented for single robot navigation in [16]. The concepts of data transferring within RG was firstly presented in [10]. Further, advances of authors’ 3D TVS found its development in [40] and [41]. Here the system received its internal changes and appliance as a machine vision system for UAV.

Fig. 1. Principle of laser scanning TVS.

3D TVS (Fig. 1(a)) is able to work in complete darkness and can obtain the real 3D coordinates of any point highlighted by laser rays on real surfaces. The theory is based on the dynamic triangulation method. The main components of the TVS are the positioning laser (PL) and the scanning aperture (SA) (Fig. 1(b)).

The system works are as follows: the laser emits a beam toward a fixed 45° mirror, then it redirects the beam orthogonally into a rotating 45° mirror driven by a stepper motor. For the guaranteed positioning of the laser direction,the PL is driven by a stepper motor. SA receives the reflected laser beam, which indicates that the system has detected an obstacle. However, the stepper motor has one weak point: on long scanning distances, dead-zones between two adjacent points of scanning are produced, which does not allow the detection of obstacles between these points. The solution to this problem can be found in [42] and [43]. The process of dynamic triangulation is fully described in [33], [34] and consists of detection of laser instantly highlighted point coordinates based on two detected anglesBijandCij(herei,jmeans the number of horizontal and vertical scanning steps consequently) and fixed distance between projector and receptor. In such a triangle (Fig. 1(b)), if three parameters are known, it allows calculating all others. AngleBijis calculated as a simple ratio of two counters codes: a number of clock pulses between two home pulses and in interval “home pulse –spot pulse” (Fig. 1(c)).

whereNAis the number of reference pulses when laser rays are detected by the stop sensor andN2πis the number of reference pulses when the 45° mirror completes a 360° turn detected by the zero sensor.

To calculatex,yandzcoordinates, the following equations are used:

whereais a length of the triangulation base (distance between the centers of positioning laser source and scanning aperture).

According to the specifics of proposed TVS, it returns the scanned surface as a point cloud (Fig. 2). As the TVS uses stepper motors, on short distances it gives high detailed objects (Fig. 2(c) contains 10 663 point), while on higher distances it loses its resolution depending to the opening angle of each step of scanning (Fig. 2(b) scan of “Mayan pyramid”,on sides the point cloud density is less than a part of a cloud with stairs has).

In the memory of a robot, each point obtained by TVS is represented by three variables:x,y,zof the Cartesian coordinate system. Each of them is stored using a double data type that equals to 64 bits per number. So, to store one point of environment 192bitst of memory is used. Using simple calculation it is possible to say that to store in memory“Object A” (Fig. 2(a)) 61.7 KB are needed, for “Object B”(Fig. 2(b)) 75.14 KB and for “Object C” (Fig. 2(c)) 249.9 KB are used. However, a robot needs to move and to explore environment sizes of what can be limitless. So the data that need to be processed can reach gigabytes and more for one autonomous vehicle, but for the RG it needs to be multiplied on the number of individuals. That is the main reason, why it is necessary to reduce the amount of stored point in memory to a minimum that is required for obstacle avoidance and dead reckoning (low-density scanning), and detailed object scanning (high-density scanning) to use on-demand.

References [16] and [34] show that the TVS FOV has several accuracy zones. That is why in the paper high, average and low accuracy zones (Fig. 3) will be allocated. For each of these zones, the opening angle [39] equivalent to store detected points on obstacles will be determined. This is made for saving the cloud point density on a distance and decrease the amount of data needed to store the environment scan.

As a starting point, the arc of one meter according to possible 160° FOV of TVS will be used. Using the research data and type of robots described in [16] the cloud point density (ρ) of the image is 11 points per meter.

Fig. 2. Examples of surfaces scanned by TVS.

whereλis the FOV angle,βis the opening angle equivalent(14.5636° for initial calculations),pis the length of an arc(one meter for initial calculations).

In general, the length of an arc can be calculated as follows:

whereris the radius of an arc (striking distance).

To prevent changes in the selected resolution, the opening angle will be calculated using

Average opening angle for each of the striking distance zone is defined by

完成前四步工作之后,需开始进行临时排水系统的建设,选择强夯技术的施工点。强夯技术是整个工程建设的重中之重,强夯技术运用是否成功,将直接影响该工程的建设质量。在强夯技术应用的过程中,需要注意强夯点的选择,强夯的应用次数以及强夯整体效果。

whereβiis an opening angle for each accuracy zone,βijis an opening angle for each striking distance in zonei.

Accuracy zones are separated in a next way: from 0 to 1 meter, from 1 to 3 meter and from 3 to 5 meter for high,average and low accuracy zones respectively. Taking into account such partitioning of zones the results of the calculation are represented graphically in Fig. 4.

Fig. 4(a) represents the general relation between opening angles and striking distances for the reviewed density of points. Fig. 4(b) represents the same but also taking into account point cloud density. Using the defined accuracy zones Fig. 4(c) demonstrates average values of such a ratio.

According to the calculation started on the initial point cloud density (11 points/m), the average angles will be 10.059° for “ low accuracy zone”, 3.011° for “average accuracy zone”, and 1.34° for “high accuracy zone”. As we can observe in the Figure, the average angle for the “low accuracy zone” range provides a small resolution equal to 5–6 points per meter. In this case, it is necessary to increase the resolution. That is why the low edge value of an opening angle for the “low accuracy zone” will be taken. The set of angles will be changed to the next values: 5.209°, 3.011°,1.34°.

Identification of the obtained point on the obstacle surface,belonging to a specific accuracy zone, is simplified to the solution of a point belonging to an ellipse in a Cartesian coordinate system. Using the extended algebra of algorithms it can be written as the next expression:

III. Obstacle Avoidance and Dead Reckoning

In applied mathematics exist well-developed algorithms for finding the way in an unknown or partially known environment (optimal and heuristic algorithms). For this purpose, the discrete mathematics (graph theory) and linear programming are usually used. Tasks of the shortest path search in the graph are known and studied widely (for example, Dijkstra’s, Floyd-Warshell’s, Prim’s, Kruskal’s,algorithms [44], [45], etc.).

In the case of our TVS, all the obstacles can be separated into two classes: positive obstacles (above the level of local zero of robot’s coordinate system, Fig 5(a) ) and negative(below the local zero of robot’s coordinate system, Fig 5(b)).

Fig. 4. Opening angle dependencies.

According to the principles of applied TVS, it is expedient to use the algorithm A* as the tool of obstacle avoidance in this research. The terrain can be represented as a matrix where each cell will have a linear size of the robot’s half diagonal.Cells are having two possible states: available for the path through (walkable) or saturated by obstacle without the possibility to the path through (non-walkable). Initially, each cell is walkable. After the detection of the obstacle within the cell, it changes the state. All the cells around it also change the state to “non-walkable” in order to create a safe zone and avoid collisions during the robot’s turns (Fig. 6(a)). After execution of such operations set, the robot obtains a matrix of surrounding state, where the results of TVS scanning (afterzcoordinate omission) are replaced by a matrix of the scanned sector (binary map) with a defined status of all inside cells(walkable/non-walkable, or occupied/empty). Preliminary, the robot’s control unit decides the ability to pass the sector under the attitudinal constraints: it is available if the obstacle height is lower than 0.1 of the wheel radius or it is above the 1.2 of robot’s height (basing on the omittedz-coordinate). Such operation permits to simplify the further path-planning, using a reduced 2D model.

Fig. 5. Obstacles types.

A*, according to its classical form [10], performs the wave propagation toward the goal (points of interest), searching for the first best match, which is the set of nearest “walkable cells”. This set of cells supposed to be visited by the robot during its movement (Fig. 6(b)). The trajectory in Fig. 6(b)represents the first match solution. However, this solution is not the best due to two reasons: it contains redundant information (5 low-informative points, which are not strictly required for trajectory planning) and non-smooth trajectory in this case, which increase the energetic load on driving mechanism and robot’s wheels and which finally leads to undesired additional losses of robot’s power source lifetime.To avoid these problems, we propose to perform additional post-processing. For continuous (smoother) trajectories in the first step, we will remove all unnecessary nodes from the trajectory, remaining only nodes where the direction of movement is changed (Fig. 6(c)). In the second step of the post-processing, the path trajectory approximation should be executed (Fig. 6(d)), in order to improve the robot’s movement smoothness. In this case, as the approximation, we consider the replacement of the polygonal line with a smooth curve. The selection of the appropriated method of individual trajectory approximation will be provided below. It was done to obtain coherence between the decisions interrelation of the navigation system actions and the ability to anticipate and provide feedback to events with sufficient speed [46].

The present research was reviewed and compared with several methods of approximation: point to point [16], Beziers approximation and Dubins path.

Fig. 6. World representation and dead reckoning with two-step post-processing.

Fig. 7. Obstacle avoidance with Dubins path and Bezier curves.

In [16] was shown that ten points are enough for building a smooth trajectory. This amount of data requires a repetitive calculation of the movement vector at every point, which is time-consuming. One of the simplified solutions is to use fewer points with Bezier curve as an approximation function(9) [47], [48].

wheretis a parameter,nis the degree of Bernstein’s polynomial basis,iis the summation index,Jn,i(t) basis functions of the Bezier curve, also known as Bernstein basis polynomials of degree n, andBirepresents theith vertex of the Bezier polygon. Such approach is useful for various tasks of path planning for autonomous vehicles [49], [50].

It this case only three points are needed to get the smoothen trajectory. As an example, let us solve the same task using a Dubins path [51]. The following equations describe it

where (x,y) is the position of the robot,θis the heading, a robot is moving using the constant speedV. The optimal path type always is based on the robot’s ability to perform the next basic actions: “right turn (R)”, “left turn (L)” or “driving straight (S)”. Every time the optimal path will be one of the next six types: RSR, RSL, LSR, LSL, RLR, and LRL.

Using the Dubins path (Fig. 7) the distance becomes longer and at the initial point, the robot by default is in an inconvenient position to start a movement along the recommended trajectory (additional inconvenient movement required). Making a detailed comparison of these two algorithms, the result of the path made by Bezier approximation is 10.3%–12.7% more effective than the solution of the same situations using Dubins path.

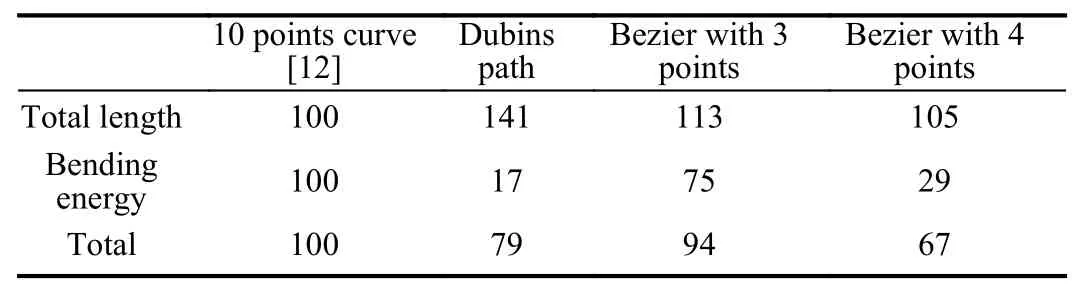

Further, these methods were compared using the parameters of total path length and trajectory smoothness, represented as an amount of trajectory bending energy [46].

The bending energy is a curvature functionk, used to estimate the smoothness of the robot’s navigation trajectory.Bending energy is calculated as the sum of the squares of the curvature at each point of the line along its length. The bending energy of the trajectory of a robot is given by

wherek(xi,yi) is the curvature at each point of the robot’s trajectory andnis the number of points within the trajectory.Here the curvature of the trajectory at thenth point is the inverse value of the circle radius built to the point of arc where it moves along at the given instance of time. The results are shown in Table I. The 10 point curve was taken as the basis of comparison (all represented as 100%). The summarized results are represented as an average value between the total length and bending energy characteristic. In other words, each of them has the same weight for calculation.

Comparing three motion planning methods (Dubins path,Bezier approximation using 3 and 4 points) we can get the next conclusions. The Dubins path received the highest length,but the best bending energy saving. The Bezier approximation using 3 points polygon shows the worst ability for optimized path planning. Ultimately, Bezier approximation using 4 point polygon gives the most satisfactory average result. In other words, its application permits to get the minimally extended trajectory, providing at the same time the maximum savings of the robot’s source and ware of mechanisms.

TABLE I Motion Planning Comparing Results (%)

In general, these methods are solving the task of motion planning for the independent robot in a group. It is obvious that data exchange between thenrobots in a group is a good tool to obtain additional information. It can serve for more efficient implementation of all mentioned above methods. The main idea is to give each individual robot in a group more knowledge about the sector as quickly as possible. Moreover,in some cases, a certain portion of information can be unavailable for theith individual from its own position.

IV. Communication

For such common knowledge inside the group, the efficient communication between allnindividuals must be organized.Our previous research [10] and [17] describes the case when the information needs to be transferred between all robots within the group, with a notification that the transfer is completed. This task is called the propagation of information with feedback (PIF) described in [52].

Previously two models of data transferring [10] were reviewed: the information exchange with centralized management (CM) and strategies of centralized hierarchical control (CHC). Using the strategy of CM in an RG, every robotri(i= 1, 2, … ,N) of the group transmits data toward the central node. On the other hand, CHC is represented as a tree,where information comes from end-nodes to root-node and merged on each parent node.

For the current case of three robots in a group, the leaderchanging method [10] replaces the distribution layer inside the group. To define the behavioristic roles for a group of robots a linguistic variablep= “pattern of layer” is implemented. It uses three scale levels ofM= {“lower layer”, “middle layer”,“top layer”}. According to this, many alternatives ofPcan be represented in the following form:

Then, it is necessary to determine the characteristics for evaluation of the robot, and the next is offered:

c1= “visibility of a goal”;

c2= “the heuristic distance to the goal”;

c3= “density of obstacles within FOV”;

c4= “visibility of other neighbor robots”;

c5= “availability of communication channel in-group”;

c6= “workload (amount of currently calculated tasks)”.

These characteristics are used in a set describing each of theith robots separately

Because each of the characteristics has a different value, it is necessary to make normalization for each of them for further calculation.

Visibility of a goal calculation can be performed using the following equation:

wherevisis the percentage of a visible part of the object.

The heuristic distance to the goal then is defined by:

wherelhminandlhmaxare the minimum (12) and maximum(13) heuristic distances to the goal among the robot;lhiis the current distance to evaluate.

c3describes the relation between the total length of obstacles that appeared along the same arc within FOV to the total length of this arc. The radius of the arc is equal to the distance to an object (striking distance).whereloiis the arc length ofith obstacle.

Each of the characteristics must have weight based on the predetermined set of weights

Evaluation of theith robot ability for data transferring takes the following form:

To determine the value of linguistic variable we use three types of membership functions [53], where the extreme values(“low level” and “top level”) will determine Z-shaped (17)and the S-shaped (18) functions, the degree of belonging to the “middle level” value is based on trapeze-like membership function (19). The general formulas are represented below:

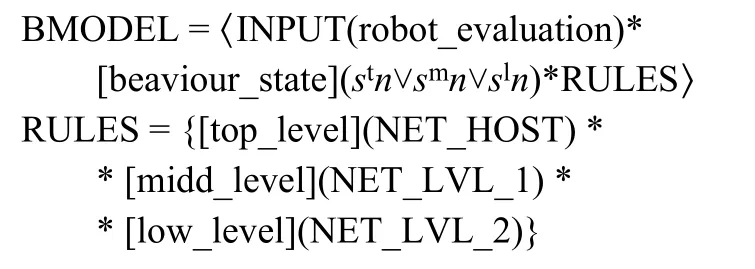

Using the previous method of describing (extended algebra of algorithms) for communication model, the hierarchical structure for data transfer is determined as follows:

where “NET_HOST” means robot becomes a host for data transferring (top-level), “NET_LVL_1” and “NET_LVL_2”for determining the network level for communication (middle and low levels);nis a kind of fixed state (low, middle or high).

In terms of fuzzy logic, it can be described using next IFTHEN rules type:

IF robot_evaluation IS top_level, THEN network_lvl EQUALS host

IF robot_evaluation IS mid_level. THEN network_lvl EQUALS lvl_1

IF robot_evaluation IS low_level, THEN network_lvl EQUALS lvl_2

The scheme of PIF for the current case represented in Fig. 8,as well as further shown as pseudocode (Algorithm 1).According to this, data transferring initiates when one of the robots in the group sends messages to others to start data transferring, and occurs when: 1) robot needs additional data for further navigation, or 2) robot has collected enough portion of information from TVS that seems to be transferred to others in the group. The voting process period used for the evaluation of each robot in a group by (16).

The compilation of data transferring channels performed at the network forming period. The data exchange period is used to interchange the accumulated data according to the topological structure of the network. After that comes the data merge. The last two periods have floating time depending on the amount of data accumulated by each robot. The proposed dynamic data exchange network forming method extends the potential of our novel TVS. It overlaps an ability of single robot navigation with a cloud-like common knowledge base within the RG to improve the efficiency of dead reckoning.

Fig. 8. Margin data about the environment.

Algorithm 1: RG Data Exchange Pseudocode Require: (Initial parameters)Robotic group: G = {r1,··, rs}Robotic group size: s 1: Estimate each robot in group 2: if ri is “top layer” then 3: Get, merge and send data using “mid layer”4: end if 5: if ri is “mid layer”6: if exists “low layer” then 7: Get data from “low layer” and merge it 8: end if 9: Send, wait and receive data from “top layer”10: if exists “low layer” then 11: Merge and send data to “low layer”12: end if 13: Merge and send data to “mid layer”14: end if 15: if ri is “low layer” then 16: Send, wait and receive data from “mid layer”17: end if

V. Algorithms Implementation for the RG

Nowadays simulation platforms cover a lot of tools and features, which can provide simulations very close to real life.Most of them use different C/C++ like algorithmic languages,LabVIEW, MATLAB, etc. Following applications are the most common software to simulate the robotic processes:

1) Virtual Robotics Toolkitis focused on STEM and is appropriate for students and researcher teams who are involved in robotics competitions.

2) V-REP [54]:is a useful 3D simulator for the educational process, allows the modeling of complex systems, individual sensors, mechanisms, etc.

3) Webots [55]:is a software product of Swiss Company Cyberbotics. It provides supports of different programming languages like C/C++, Java, Python, URBI, and MATLAB.Moreover is compatible with third-party software through TCP/IP.

4) Gazebo [56]:can simulate complex systems and a variety of sensor components. It is helpful in developing robots intended for interaction, to lift, to push or grab objects;and for activities that require recognition and localization in space.

To prove the theoretical basis of the above-presented tasks the framework for simulation and RG collaboration was used.This presented framework has been developed in Unity 5 [57],which is a multiplatform engine provided with different features and tools. The framework is developed using the programming language C# using the MonoDevelop integrated development environment (IDE) for Windows 10. In the simulation, four different random scenes (Fig. 9) were analyzed.

All the methods described in Sections II–IV can be combined in one RG behavior algorithm (Algorithm 2). It considers an initial robotic groupG. Each robot is described as a set of Cartesian coordinates, velocityviand scanning accuracy (resolution)ai. For each robot, the algorithm initializes these variables and maps (lines 1–4). Next,according to terrain dimensions, it splits the map into equal sectors (line 5). Each ofirobots for own sector creates the queue of secondary objectivesXi(for optimal trajectory planning) and pushes to the end of the queue the main objectivexm. While the robot did not reach the main objective in the queue, it will repeat the lines 10–34. The robot constantly provides the feedback for data exchange process initiation (lines 11–13), if such exchange requested it participates in the process. After visual data frame obtaining from TVS (lines 14–15) it reviews each point in the frame and sets as non-walkable all corresponding cells in the map (lines 16–19).

According to Section II, after obstacle detection (line 20)the algorithm uses a resolution and speed control based on the accuracy zone range (lines 21–29). Then the robot initiates data exchange to update and share its knowledge about the environment (line 30) with other group members, gets the path to the current objective in the queue (line 31). If the current objective is reached, it pulls next from the queue (lines 32–34).

Algorithm 2: RG Behavior Algorithms Pseudocode Require: (Initial parameters)Robotic group: G = {r1,…, rs}; Group size: s Terrain: T; Terrain dimensions: d Speed and limits: {Slow, Savg, Shigh},Resolution: {Rlow, Ravg, Rhigh}Accuracy zones radiuses: {Zlow, Zavg, Zhigh}Main objective locations: xm Secondary objectives locations Xi = [xi1,…, xin]Pathfinding function: A(T, xij), where T = {t1,…, ts}Distance to obstacle function: D(li, F)where F is the frame obtained from TVS TVS to map coordinates function: C({x,y,z}, {b,a}),where {x,y,z} – Cartesian coordinate from TVS,{b,a} – dimensions of map 1: foreach ri in G 2: Initialize vi, ai and li for robot.3: Initialize map M = [t11,..., t1a;...; tb1,..., tba]4: end foreach 5: Split T in s parts → T = {t1,..., ts}6: Generate secondary objectives locations 7: Xi = [xi1,..., xin] for each robot ri in G 8: Xi add xm → Xi = [xi1,..., xin, xim]9: while xm not reached 10: foreach ri in G 11: if received data exchange request then 12: Initiate Data Exchange (Algorithm 1)13: end if 14: get frame F from TVS 15: F = [f11,..., f1r;...; fq1,..., fqr]16: foreach f in F.17: c ← C(f, dimensions(M))18: M(c) ← not walkable 19: end foreach 20: obstacle ← D({xi,yi,zi}, F)21: if obstacle ≤ Zlow then 22: {vi, ai} ← {Slow, Rlow }23: end if 24: if Zlow < obstacle ≤ Zavg then 25: {vi, ai} ← {Savg, Ravg}26: end if 27: if Zavg < obstacle ≤ Zhigh then 28: {vi, ai} ← {Shigh, Rhigh}29: end if 30: Initiate Data Exchange 31: path ← A(T, xij)32: if xij is reached then 33: xij ← pull (Xi)34: end if 35: end foreach 36: end while

Fig. 9. Graphical representation of modeling scenes.

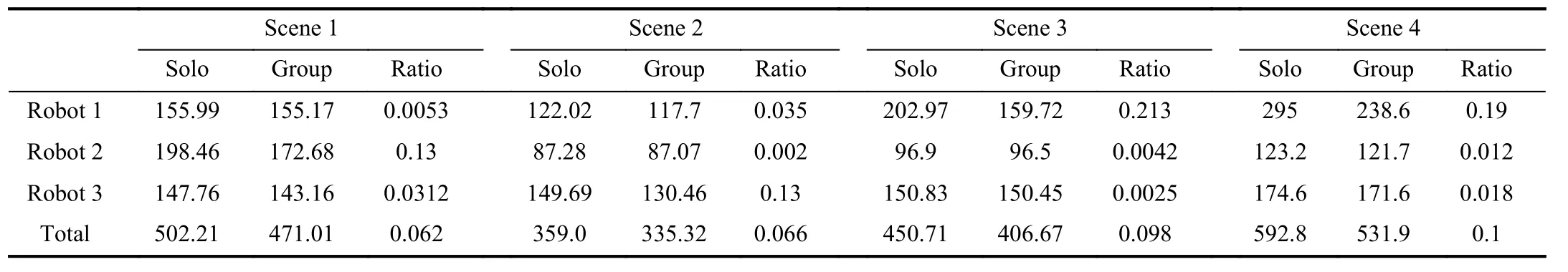

To receive the results for each scene three scenarios were modeled. In the first scenario robots are moving independently among each other (no knowledge sharing and data exchange), i.e., “no common knowledge”. In the second scenario (“with common knowledge”) three robots are fusing obtained information and are using common knowledge about terrain for path planning (implemented data exchange method). In the last scenario, the group is moving with the map of terrain, i.e., “with the pre-known territory”. In each scenario, robots have to reach their personal goals and then get to a common point. A group of three Pioneer 3-AT-like robots with TVS is modeled. This robotic platform was overviewed previously in work [16], also this article describes the kinematics of a single robot. For all of the scenarios in each scene, 100 simulations were executed. Each simulation in the start point uses the same initial parameters (position,velocity, the accuracy of scanning, main and secondary goals)of robots. The results of the modeling and accumulated data are represented in Fig. 10.

Reviewing the obtained results, it becomes visible on the graphs that exist trajectory lengths deviation for each robot in all three scenarios (Table II). For example, Robot 1 in Fig.10(b) for the case of “no common knowledge” has a deviation of 4.32%. It means that for each of the modeling iterations the robot had different routes to achieve its goals. In general, its average route maintains the same. Still for some cases, shown as high peaks in Fig. 10(b), the route becomes an outlier, in regard to the average route length.

Another observation can be mentioned where pre-known territory does not always give a shorter trajectory length. This can be found in Fig. 10(a) (Robot 2), Fig. 10(b) (Robot 1,Robot 2, Robot 3), Fig. 10(d) (Robot 3) for the case “with the pre-known territory”.

Both mentioned observations are caused by specialties of applied A* algorithm and, for the first case, by unknown territory uncertainties. According to the principle of used TVS, as mentioned earlier in Section II, for the dead reckoning were decided to adopt the A* algorithm. Its heuristic nature and structure provides a suitable solution for our needs in path planning. The matrix-based orientation of the algorithm solves the task of discrete mapping used for robots dead reckoning and gives next benefits: fast conversions of coordinates obtained from TVS to path planning map reduces the need to post-process the point clouds from TVS to map rebase in case of data exchange and gives a faster calculation time, compared to Dijkstra algorithm[58] for example.

Implementation of the common knowledge base decreases trajectories deviation and tends them to the optimal solution(not taking into account the individual anomalies that have occurred (peaks on Fig. 10(a) and Fig. 10(b), Robot#3 “with common knowledge”)). TVS cause all the deviations in robots routes, mostly because of the order of obstacle detection.

The summary of the modeling is presented in Table III.Comparing averaged distances obtained during the modeling can be observed that the use of a common knowledge base has advantages in all of the scenes. The result shows that RG with implemented data exchange method has averaged group trajectory length shorter from 6.2% (Table III, Scene 1) up to 10% (Table III, Scene 4), comparing to distances of individual autonomous robotic trajectories (when using non-group movement). Under the group trajectory length, a sum of the individual trajectories was considered. Scaling the results for individual robots in-group the improvement of trajectories length can reach up to 21.3% (Table III, Scene 3, Robot 1).

Fig. 10. Trajectory lengths (a) “Scene 1”; (b) “Scene 2”; (c) “Scene 3”; (d) “Scene 4”.

Now it is obvious that the use of the data exchange method for merging individual knowledge into common has a positive influence on RG movement and dead reckoning. Another question occurs: What is the effectiveness of individual robots in the group while sectoring the terrain in case of a dispersed initial placement (sectoring the terrain).

The most important goal of the present research is to offer the best solution to the stated problem. Fig.10 in generalshows that our solution (green graph, applying the common knowledge obtained by fusion of scanned data fromngroup members) always propose appropriated solution in regard to path planning, which sometimes even can be better than“almost ideal case”, when the configuration of environment is preliminary known (particular the second case) in Fig.10. This case corresponds to the practical situation when the real scene is cluttered by a mix of continued and small obstacles randomly placed within the considered frame.

TABLE II Standard Deviation of Trajectory Lengths (%)

TABLE III Motion Planning Comparing Results (The Normalized Units of Framework Used for Distances)

In our opinion, such a variant is most probable in real life for the considered task. Moreover, in the considered task the existence of preliminary known environment (inspection after disasters) is almost impossible. So, this circumstance we consider as one of the significant obtained contributions in our research.

Regarding the reasoning of such unexpectable behavior of our algorithm, we can mention the next.

Search A* (in a pre-known territory) in computer science and mathematics, is the search algorithm for the first best match on a graph that finds the least cost route from one vertex (initial) to another (target, final). The particular Scene 2 in Fig. 10(b) demonstrates this principle:

In the case of “with the pre-known trajectory” the algorithm not consider all the possible set of trajectories, but stops on the first arbitrary obtained an appropriated solution (by the nature of any heuristic algorithms), i.e., robots are using not the closest path but the first best match existed on the map in current environment.

However, such a conclusion based only on simulation analysis cannot be final, for its proof strongly requires real experimentation, because any complex phenomena in real life can have many intercrossed reasons or sub-components.

The trajectory lengths deviation decreases on each particular scene. According to our goal, the mentioned effect should perform on the most possible versatility of the considered scenes, with the quite different situation of configurations.This effect really appears in herein presented results, if we will consider the averaged entropy on all above simulated situations. That is illustrated in Tables II and IV.

TABLE IV Standard Deviation of Trajectory Lengths(Averaged Values) (%)

The trajectory lengths deviation decrease in Table II is obvious in all scenes for “with common knowledge” and“with the pre-known trajectory” cases. However, presenting the data as the averaged values for each robot in all scenes(Table IV), it can be found that the trajectory lengths deviation decrease in the next order “no common knowledge”> “ with common knowledge” > “ with the pre-known trajectory”. The greater is the value of informational entropy,the greater is the repetitive route deviation.

Laser scanning TVS, as an alternative to camera vision, can give the exact coordinates of any selected point on the surface of the obstacle. However, they cannot process all the surfaces at the same time and require a certain time to scan a 3D sector.The good solution to this problem is to split the terrain into sectors, sharing the task among robots in the group. Such a distributed scanning will give more explicit information about the position of obstacles inside this sector, and possible deadends for robots, which maybe cannot view this sector part from its current position.

Fig. 11. Obstacles types.

According to the TVS specifics, the RG will split terrain into sectors for their movement. To back this up the example(Fig. 11) will be considered. While a single robot moves(Fig. 11(a)), it detects an obstacle “A” and the goal point still is in a “blind spot”. Fig. 11(b) represents another situation,similar to the group movement in [59]. Here the first robot still detects obstacle “A”, the second robot moves closer to it.In the FOV of the second robot, part of obstacles “A” and “B”appears, but not both of them completely. In this case, the robots have over-detailed knowledge about one part of obstacle “A” and not so complete knowledge about the obstacle “B”. The goal is still invisible in this particular case.In Fig. 11(c) the zone is separated by distancing of the robots into two sectors (“sector A” for the first robot, “sector B” for the second). As can be observed in the case of Fig. 11(c),agents have enough detalization of an obstacle “A”,information about the existence of obstacle “C” , and moreover, they have found the goal (object of interest). Using this information, the trajectories of each robot is recalculated for reaching the goal. Let us review the effectiveness of robots basing on the “Scene 4” (Fig. 9(d)). The current article under effectiveness will suppose the amount of unique data obtained by a single robot comparing to the common data fusion.

As was mentioned above each robot creates during its movement a binary map of the obtained data about obstacles(i.e., individual mapsRm1,Rm2,…,Rmnof allnparticipants of the robotic group, for the current particular casen= 3). The map of obstacles is

For further calculations obtained matrixes will be considered as matrixes of integer values (“true” equals 1 and “false” to 0).Applying the simple operation of matrix addition we will have resulting matrixMr(25) of fused data with overlapped density(the overlapping density, in this case, is ranked by discrete values 1,2,…,n, and it can be defined as the higher the number,the more data repetitions occur in this location). On Figs. 12(a),(b), (c) are presented the individually obtained maps byn(n=3) robots and Fig. 12(d) are fused data. The white areas are the locations where obstacles are not detected, green where obstacles were detected once, blue by two robots, and red by three. So, Fig. 12 shows, that according to the overlapped results of the individually obtained maps by each robot can be estimated the efficiency of environment sectoring and territorial group distribution.

By subtracting the overlapped data from the resulting matrixMr(cells with values more than 1) will get the unique data obtained in the group. The sum of these values will give a total amount of unique values. To calculate the group effectiveness (Ge) of terrain sectoring will be used in the next equation:

whereTouis a total amount of unique detected obstacle(Mrxy= 1) andTois a value of total detected obstacles on a scene.

wherewandhare width and height of the matrixMrcorrespondingly.

Besides the overlapped data, it can be allocated another characteristic, i.e., the ratio of individual data obtained by the robot to total data. Table V shows the results of the analysis of the obstacles. Additional data comparison is presented in Fig. 13.

For these scenes, the average group efficiency equals 75.98%, the data obtained by two robots is 15.53% and by three robots is 8.48% (Fig. 13(a)). Comparing the results of obtained unique data from each robot (Fig. 13(b)) will receive the following values of 36.29%, 13.82%, and 25.86% for first,second, and the third robot respectively.

The approach of sectoring the terrain improves the individual FOV’s of each robot to the extended joint FOV of RG. It gives a sufficiently detailed point cloud of surrounding,combining data from each individual TVS. Such extended FOV helps to avoid unnecessary scanning and decreases the number of calculations required to pass through the already scanned territory for all robots as a whole.

VI. Conclusion

This paper offers the original solution able to improve the collaboration inside RG. All the tasks discussed in this paper have a common objective. The group of robots needs to reach the final goal guided only by information obtained by the author’s original TVS in local interactions with the environment, using motion planning and communication algorithms.

The method of accuracy distances ranging and angle equivalent storage based on [39] provides the data reduction for faster data transferring. The implemented method gives an additional option of the resolution adjustment according to specifics of the environment or current task.

Fig. 12. Examples of robots’ individual data fusion.

TABLE V Quantitative Analysis of Obstacle Maps

The justified application of Bezier curves based on a polygon with four control points did show the improvement of the feasibility of the collective trajectory ofnrobots and its better efficiency. Individually for each trajectory ofn= 3 robots, despite an insignificant general length increase of 5%(comparing to 10 point curves in previous research [16]), the result in bending energy saving is decreased by 29%.Moreover, the approach gives an ability to build continuous and smoother trajectories, and according to Table I provides the averaged efficiency of dead reckoning increase from 6%in a worst-case up to 33% in the best case.

Fig. 13. Unique and general data comparison for each robot in group.

The combined implementation of trajectories approximation, dynamic network forming for data exchange and sectoring the terrain has been promising. Simulation has shown that the use of mentioned improvements applied to the behavior of RG allows stable functioning of the group at lower motion energy costs (bending energy) and decreases the trajectories length. As a result, trajectory length has decreased by 21.3% for individual robots and up to 10% for group in general. Thus, we can conclude that a combined solution of the mentioned task using 3D TVS and 3D-map sharing in RG can increase the effectiveness of individual robot navigation,giving it the advanced knowledge (fused scans of other agents in RG) about sector using the data exchange. At the same time, thanks to provided resolution stabilization, the total quantity of information to store are significantly decreased compared to the raw data sum from each robot. It gives the possibility to release a significant part of memories on an individual robot for complementary task solutions in real-time simultaneously.

Acknowledgements

We want to extend our gratitude to the Universidad Autónoma de Baja California, Instituto de Ingeniería and the CONACYT for providing the resources that made this research possible.

猜你喜欢

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Artificial Intelligence Applications in the Development of Autonomous Vehicles: A Survey

- Data-Driven Based Fault Prognosis for Industrial Systems: A Concise Overview

- Review of Antiswing Control of Shipboard Cranes

- Research Progress of Parallel Control and Management

- Effect of a Traffic Speed Based Cruise Control on an Electric Vehicle’s Performance and an Energy Consumption Model of an Electric Vehicle

- Proximity Based Automatic Data Annotation for Autonomous Driving