基于灰度塔评分的匹配模型构建在无人机网络视频拼接中的应用

2019-08-01李南云王旭光吴华强何青林

李南云 王旭光 吴华强 何青林

摘 要:對于复杂非配合情况下,视频拼接中特征匹配对的数目和特征匹配准确率无法同时达到后续稳像和拼接的要求这一问题,提出一种基于灰度塔对特征点进行评分后构建匹配模型来进行精准特征匹配的方法。首先,利用灰度级压缩后相近灰度级合并这一现象,建立灰度塔来实现对特征点的评分;而后,选取评分高的特征点建立基于位置信息的匹配模型;最后,依据匹配模型的定位进行区域分块匹配来避免全局特征点的干扰和大误差噪点匹配,选择误差最小的特征匹配对作为最终结果匹配对。另外,在运动的视频流中,可通过前后帧信息建立掩模进行区域特征提取,匹配模型也可选择性遗传给后帧以节约算法时间。实验结果表明,在运用了基于灰度塔评分的匹配模型后,特征匹配对准确率在95%左右。相同帧特征匹配对的数目相较于随机采样一致性有近10倍的提升,在兼顾匹配数目和匹配准确率的同时且无大误差匹配结果,对于环境和光照有较好的鲁棒性。

关键词:特征提取;特征匹配;视频拼接;灰度塔;匹配模型;分块匹配

中图分类号:TP391.4

文献标志码:A

Abstract: Concerning the problem that in complex and noncooperative situations the number of matching feature pairs and the accuracy of feature matching results in video splicing can not meet the requirements of subsequent image stabilization and splicing at the same time, a method for constructing matching model based on grading of feature points by gradation tower is proposed. In the method of feature extraction, the phenomenon of merging gray scales after gray level compression is firstly used to establish the gray pyramid to realize the scoring of feature points. Then, the highscoring feature points are selected to establish the matching model based on position information. Finally, according to the positioning of the matching model, regional block matching is performed to avoid the influence of global interference and large error noise, and the feature matching pair with the smallest error is selected as the final result of matching pair. In addition, in the motion video stream, the feature extraction can be performed by using the previous frame information to establish a mask, and the matching model can be selectively passed to the back frame to save the algorithm time. The simulation test results show that the feature matching accuracy is about 95% after using this matching model based on the grayscale tower score. The number of matching feature pairs of the same frame is nearly 10 times higher than that of the traditional algorithm. The matching result has better robustness to the environment and illumination while keeping accuracy of matching result and getting rid of large error.

Concerning the problem that in complex and noncooperative situations the number of matching feature pairs and the accuracy of feature matching results in video stitching can not meet the requirements of subsequent image stabilization and stitching at the same time, a method of constructing matching model to match features accurately after feature points being scored by grayscale tower was proposed. Firstly, the phenomenon that the similiar grayscales would merged together after grayscale compression was used to establish a grayscale tower to realize the scoring of feature points. Then, the feature points with high score were selected to establish the matching model based on position information. Finally, according to the positioning of the matching model, regional block matching was performed to avoid the influence of global feature point interference and large error noise matching, and the feature matching pair with the smallest error was selected as the final result of matching pair. In addition, in a motion video stream, regional feature extraction could be performed by using the information of previous and next frames to establish a mask, and the matching model could be selectively passed on to the next frame to save the computation time. The simulation results show that after using this matching model based on grayscale tower score, the feature matching accuracy is about 95% and the number of matching feature pairs of the same frame is nearly 10 times higher than that of the traditional method. The proposed method has good robustness to environment and illumination while guaranteeing the matching number and the matching accuracy without large error matching result.

英文关键词Key words: feature extraction; feature matching; video stitching; grayscale tower; matching model; block matching

0 引言

随着近年图像处理领域的飞速发展,图像处理以及视频处理在社会上的应用也更加广泛。单纯的静态图像拼接已经难以满足目前社会的需求,静态图像难以让人们获取真实场景中的每个物体在时间流上的信息,而时间流上的信息在社会以及国防中是非常重要的一部分,因此近年来人们在视频拼接领域中探讨也愈发深入。

特征提取和匹配作为图像拼接[1]中非常重要的一部分,也广泛应用于图像识别[2]、三维模型构建[3]等领域。提取出来的特征点的质量直接影响着特征匹配的结果;而特征匹配的结果又直接关系到后续拼接、识别和模型构建等最终的准确率。特征匹配对作为图像处理这个大厦中的基石,目前主要的难点为匹配对的数目以及匹配准确率和匹配时间。

基于传统图像算法的特征提取方式主要有尺度不变特征变换(Scale Invariant Feature Transform, SIFT)[4]、加速稳健特征(Speeded Up Robust Feature, SURF)[5]、ORB(Oriented FAST and Rotated BRIEF)[6]、BRISK(Binary Robust Invariant Scalable Keypoints)[7]、BRIEF(Binary Robust Independent Element Feature)[8]、FAST(Features form Accelerated Segment Test),其中SIFT和SURF的描述符具有尺度不变性和旋转不变性,可以获得较高的匹配准确度,但是计算量较大,效率较低。而ORB、BRISK、FAST则是通过比较特征像素点周围像素的差异来形成二值描述子,通过计算两个特征描述子之间的Hamming距离[9]来比较两个特征点之间的相关性。

特征匹配的筛选方式主要有K最近邻(KNearest Neighbors, KNN)匹配和暴力匹配,然后加以随机采样一致性(RANdom Sample Consensus, RANSAC)[10]筛选出内点集。基于全局单应矩阵的RANSAC从图像全局进行考虑,保留图像主平面的匹配结果,去除次平面以及视差平面的匹配对。也就是当存在视差时,相机获取的成像图片中依据景物深度可以分为多个平面,RANSAC的筛选方式就是保留占比最大的深度平面的匹配结果,从原理上来说这种匹配方式会丢失一些平面的信息,在应对存在视差较大情况下配准能力不足。KNN匹配方式可以有效避免由于视差所造成的匹配对之间的差异,直接获取匹配结果,但是由于不同成像图片的不同光照信息和图像信息,KNN的阈值一成不变会造成较大的匹配误差,无法直接应用于视频拼接。上面两种匹配方式无法避免由于噪声影响造成的无规律错误匹配。

本文通过在视频拼接中寻找特征匹配对数目较多且均匀分布的特征匹配方式,来解决视频拼接中特征点数目不够均匀无法考虑全局深度的问题。

3 提出的算法

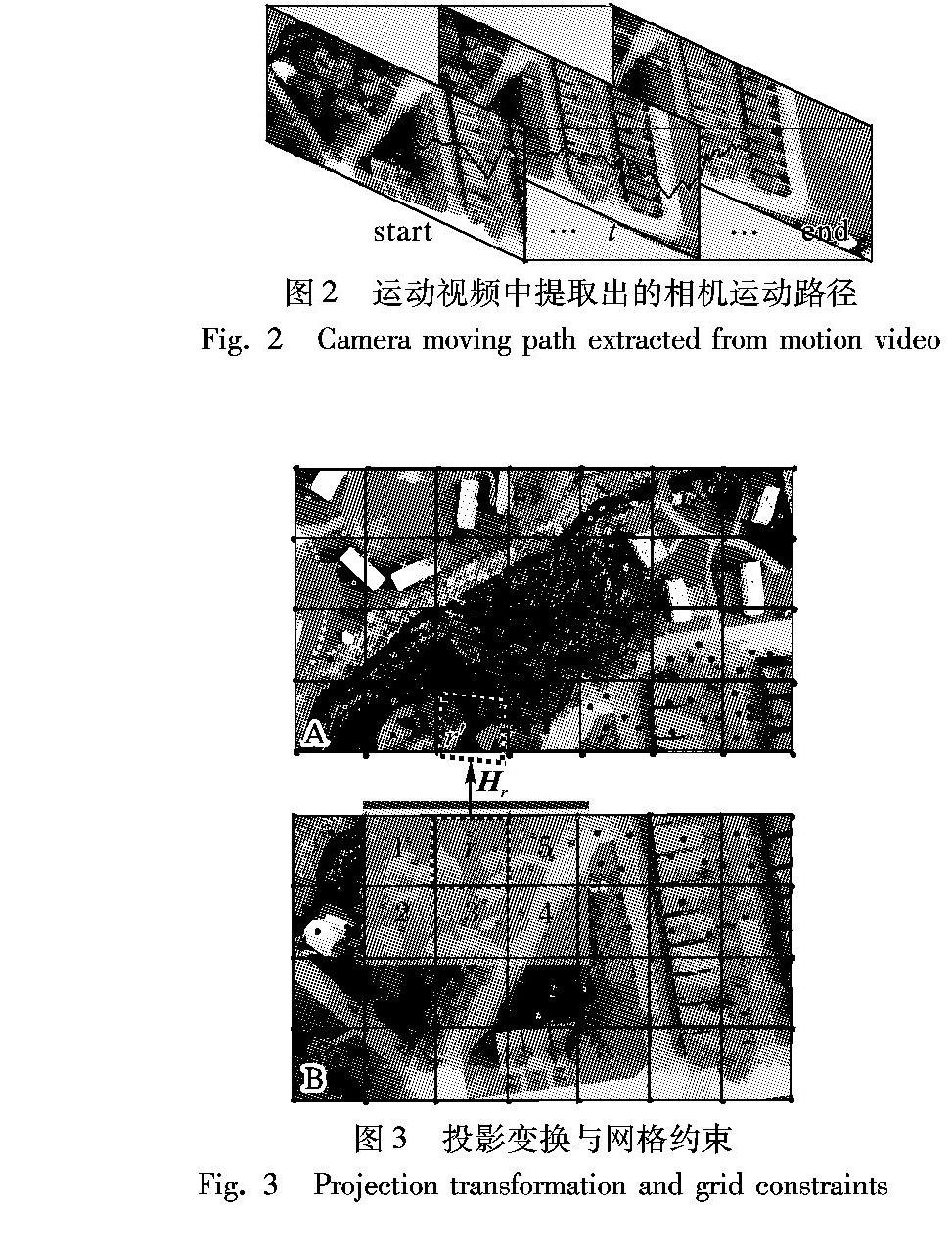

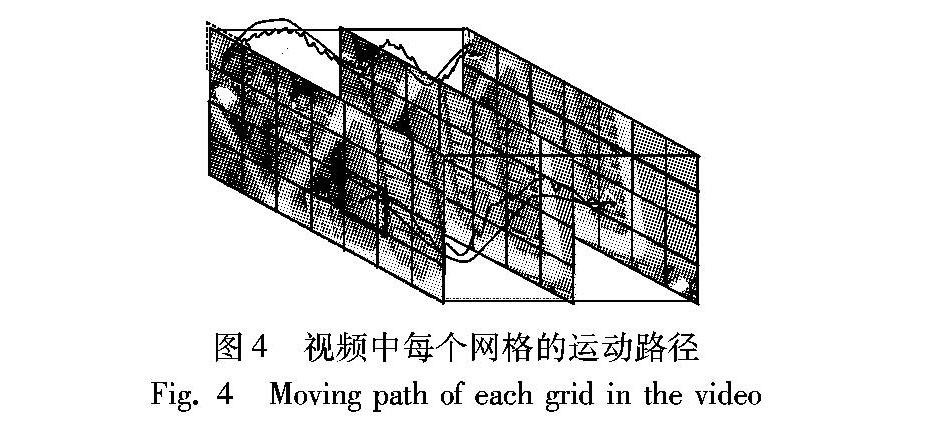

由前文的视频拼接介绍可知:想要获得相机路径和网格变换关系,就要一定程度上保证特征点对匹配的数目较多且尽量均匀分布,还要保证特征点对匹配的正确率,传统的特征匹配方法难以达到这个要求, 所以为了达到这个目的,本文提出了基于特征点评分的匹配模型(Matching Model based on Grayscale Tower score, MMGT)构建的特征匹配方式。

3.1 特征点评分

对输入图像进行特征提取时,优秀的特征点提取方式提取的特征点具有尺度不变性和旋转不变性,但是提取出的特征点包含噪点,数目巨大,因为光照影响并没有完整的评分机制,从而对最终的匹配结果可信度和正确率造成影响。如果可以对特征点进行评分,那么评分较高的特征点匹配结果的可信度上升,在后续构建匹配模型时,匹配模型的可信度也会提高,从而作用到最终的匹配结果上。引入灰度级下降的特征点的评分方式可以在一定程度上解决光照和错误特征点的影响。

灰度图像中一般具有256个灰度级,在传统的特征点提取过程中,首先构建高斯金字塔,在高斯差分图像中与其周围相邻的8个特征点和上下相邻空间中的18个特征点比较灰度值,极值点就是最终获得的特征点,此时的特征点具有尺度不变性。特征点定义为图像中特殊的点,理想的特征点不会随着尺度和光照的变换而消失,也就是说当压缩灰度级时,例如整体图像的灰度级由250变成128,邻近的灰度级会合并,但是优秀的特征点仍然存在。

首先构建高斯差分金字塔,比较其不同尺度空间的像素值后可以获取到初始提取的特征点集P(n)和P′(n)。然后在这个特征点集中的每个特征点周围取一个半径为R的圆,圆内的像素称之为光照关联像素,此时需要构建一个尺度不变的光照金字塔:1)获取特征点周围光照信息关联区域的直方图;2)模拟光照,在不同的倍数下压缩光照信息关联区域的整体灰度级数目;3)比较不同灰度级金字塔上每一层的关联区域的灰度下降梯度信息,当梯度变换低于一定阈值后认为该特征点消失;4)特征点P存在在金字塔上的层数越多,则认为这个特征点的评分越高,同样该特征点的质量越高。如图5所示。

3.2 匹配模型的构建

经过上述评分的步骤可以获得带有评分的特征点集P(n)和P′(n),评分越高代表特征点越可靠。下一步需要依据这个评分来构建一个匹配模型,增加特征匹配對的数目和正确率,避免由于噪点引起的大误差匹配。

5 结语

本文对多节点的UAV网络采取高空视频并进行实时拼接成全景视频特征匹配进行了深入的研究,提出了基于灰度塔进行特征点评分,并构建匹配模型的特征匹配方法,很好地解决了特征点提取的数目不够且分布不够均匀的问题,并利用视频流中信息的遗传机制,将匹配模型和感兴趣区域进行遗传,取得了很好的效果。但是该方法在实时性上还有一些不足,今后的研究应该是如何对匹配模型匹配过程中的一些互相不相关的过程进行多线程并行处理加快匹配速度。另外在灰度塔的应用上还可以进行扩展,但是要注意在图像分割中伪轮廓的处理。

参考文献 (References)

[1] IVAN S K, OLEG V P. Spherical video panorama stitching from multiple cameras with intersecting fields of view and inertial measurement unit[C]// Proceedings of the 2016 International Siberian Conference on Control and Communications. Piscataway, NJ: IEEE, 2016: 1-6.

[2] ZHANG L, HE Z, LIU Y. Deep object recognition across domains based on adaptive extreme learning machine[J]. Neurocomputing, 2017,239:194-203.

[3] YANG B, DONG Z, LIANG F, et al. Automatic registration of largescale urban scene point clouds based on semantic feature points[J]. ISPRS Journal of Photogrammetry & Remote Sensing, 2016, 113: 43-58.

[4] LOWE D G. Distinctive image features from scaleinvariant keypoints[J]. International Journal of Computer Vision, 2004, 60(2): 91-110.

[5] HSU W Y, LEE Y C. Rat brain registration using improved speeded up robust features[J]. Journal of Medical and Biological Engineering, 2017, 37(1): 45-42.

[6] RUBLEE E, RABAUD V, KONOLIGE K, et al. ORB: an efficient alternative to SIFT or SURF[C]// Proceedings of the 2011 International Conference on Computer Vision. Washington, DC: IEEE Computer Society, 2011:2564-2571.

[7] LEUTENEGGER S, CHLI M, SIEGWART Y. BRISK: binary robust invariant scalable keypoints[C]// Proceedings of the 2011 International Conference on Computer Vision. Washington, DC: IEEE Computer Society, 2011:2548-2555.

[8] CALONDER M, LEPETIT V, STRECHA C, et al. BRIEF: binary robust independent elementary features[C]// Proceedings of the 11th European Conference on Computer Vision. Berlin: Springer, 2010: 778-792.

[9] MOR M, FRAENKEL A S. A hash code method for detecting and correcting spellingerrors[J]. Communications of the ACM, 1982, 25(12): 935-938.

[10] SANTHA T, MOHANA M B V. The significance of realtime,biomedical and satellite image processing in understanding the objects & application to computer vision[C]// Proceedings of the 2nd IEEE International Conference on Engineering & Technology. Piscataway, NJ: IEEE, 2016: 661-670.

[11] BROWN M, LOWE D G. Automatic panoramic image stitching using invariant features[J]. International Journal of Computer Vision, 2007, 74(1):59-73.

[12] GUO H, LIU S, HE T, et al. Joint video stitching and stabilization from moving cameras[J]. IEEE Transactions on Image Processing, 2016, 25(11):5491-5503.

[13] 倪国强, 刘琼. 多源图像配准技术分析与展望[J].光电工程, 2004, 31(9):1-6.(NI G Q,LIU Q. Analysis and prospect of multisource image registration techniques[J].OptoElectronic Engineering, 2004,31(9):1-6.)

[14] ZARAGOZA J, CHIN T J, BROWN M S, et al. Asprojectiveaspossible image stitching with moving DLT[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(7):1285-1298.

[15] 朱云芳, 葉秀清, 顾伟康.视频序列的全景图拼接技术[J].中国图象图形学报,2006,11(8):1150-1155.(ZHU Y F,YE X Q,GU W K. Mosaic panorama technique for videos[J]. Journal of Image and Graphics,2006,11(8):1150-1155.)