A Sequence Image Matching Method Based on Improved High-Dimensional Combined Features

2018-11-21,,,

, , ,

College of Astronautics, Nanjing University of Aeronautics and Astronautics, Nanjing 210016, P. R. China

Abstract: Image matching technology is theoretically significant and practically promising in the field of autonomous navigation. Addressing shortcomings of existing image matching navigation technologies, the concept of high-dimensional combined feature is presented based on sequence image matching navigation. To balance between the distribution of high-dimensional combined features and the shortcomings of the only use of geometric relations, we propose a method based on Delaunay triangulation to improve the feature, and add the regional characteristics of the features together with their geometric characteristics. Finally, k-nearest neighbor (KNN) algorithm is adopted to optimize searching process. Simulation results show that the matching can be realized at the rotation angle of -8° to 8° and the scale factor of 0.9 to 1.1, and when the image size is 160 pixel ×160 pixel, the matching time is less than 0.5 s. Therefore, the proposed algorithm can substantially reduce computational complexity, improve the matching speed, and exhibit robustness to the rotation and scale changes.

Key words: sequence image matching; navigation; Delaunay triangulation; high-dimensional combined feature; k-nearest neighbor

0 Introduction

Image matching aided navigation, as one autonomous navigation technology, is of great theoretical value and has been widely used in many fields such as robot, unmanned aerial vehicle and cruise missile[1-2], However, the imaging difference between images usually leads to large calculation in the matching procession[3]. Even though the speed of image matching has been greatly improved, its real-time performance is nevertheless hard to meet the requirements in many scenarios[4]. Therefore, image matching navigation can only assist other navigation systems, forming aided navigation systems to realize high-precision and real-time navigation[5].

To tackle the shortcomings of traditional image navigation, sequence image matching navigation has prominent advantages and has attracted an increasing attentions. Since two adjacent frames are obtained at the same time under the same condition, sequence images just have the same noise distribution and small geometric deformation, which usually surface as translation and small rotation[6]. So matching between sequence images can evidently reduce the time costs and improve the accuracy and speed of image matching substantially.

At present, the studies on image matching navigation mainly focus on matching algorithm, search strategy, filtering algorithm[7], adaptation region analysis[8], and so on. Among them, sequence image matching navigation has heen thoroughly studied. Bodensteiner et al. proposed a method matching video streams to lidar data accurately and efficiently using key frames, and introduced the process of simultaneous localization and map building in detail[9].Ref.[10] proposed a positioning method based on monocular image, computing six-freedom degrees camera pose estimates continuously by matching natural features from input video and three-dimentional points reconstructed by structure from motion. In the algorithm, the fast key point tracker based on binary feature descriptor was used to realize the matching of two-dimentional to three-dimentional data, so the online scale invariant feature extraction was avoided, and the delay of each frame was reduced. Ref.[11] presented an improved Radon transform method to extract the line segments from the edges of the image, which were represented by four dimensional vectors. Time consumption of vector matching was greatly reduced. Ref.[12] adopted a novel algorithm, which constructed high-dimensional combined features based on stable branch points, and determined the position relation of two adjacent frames according to the geometric constraint relationship between the features. The algorithm combined the advantages of point features and line features, and eliminated the adverse effect of inaccuracy line segments extraction on matching results. However, there might be uneven distribution of features and redundant calculation if using the traversal to construct high-dimensional combined features. The requirements for image quality are too strict to meet.

A fast sequence image matching algorithm based on improved high-dimensional combined features is proposed in this paper. In the process of constructing high-dimensional combined features, Triangulation is introduced to achieve better dispersion of features, less repeated calculation, and improved matching performance. Meanwhile, the regional attributes of the features are added, so the matching based on the geometric as well as regional characteristics can greatly improve the robustness of the algorithm. Finally, this paper adoptsk-nearest neighber (KNN) algorithm based on KD-tree structure to optimize the search process and to further improve the efficiency of the algorithm. Simulation results show that the proposed algorithm can improve the real-time performance of image navigation, and meet the requirements of accuracy and robustness of the navigation system.

1 Sequence Image Matching Method

1.1 Improved high-dimensional combined feature

The high-dimensional combined feature is a pair of intersecting lines which is composed of three stable branch points[13]. These branch points are extracted from the edged binary images after denoising and thinning, and each branch point has more than two neighborhood points.

Each high-dimensional combined feature contains three branch points, which are defined as vertexz(zx,zy), the first endpointz1(z1x,z1y), and the second endpointz2(z2x,z2y), where theycoordinate value ofzis the smallest, and the first endpointz1is the largest, if theycoordinate values are equal. The point with the smallestxcoordinate value is defined asz, and the point with the largestxcoordinate value is the first endpointz1[12-14]. In fact, this feature is not the basic feature directly detected from the image, but constructed by basic features according to some rules. Therefore, it is defined as a high-dimensional combined feature.

To balance the distribution of high-dimensional combined features, Delaunay triangulation algorithm is introduced to divide the branch points into a uniform triangle network before constructing the high-dimensional combined features.

Delaunay triangulation, widely used in practice[15], has two important criteria: Empty circle and maximizing the minimum angle[16]. These criteria guarantee that Delaunay triangulation network is unique. No matter where the feature construction starts, the final result will be consistent and adding, deleting or moving a point will only affect adjacent triangles.

Delaunay triangulation is implemented by incremental insertion method in this paper whose time efficiency and space efficiency are relatively higher. The process of constructing improved high-dimensional combined feature is shown in Fig.1, where only one high-dimensional combined feature is marked in the diagram. After the extraction of stable branch points, Delaunay triangulation for the points set is done. Then three vertices of each triangle in the triangle network are used to construct a high-dimensional combined feature.

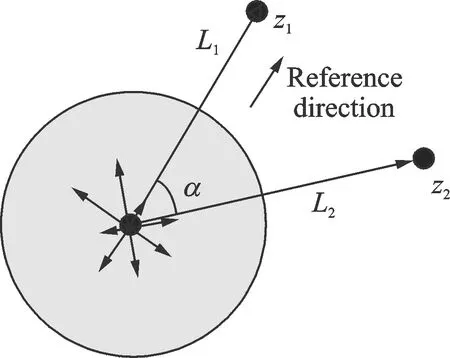

Fig.1 Construction of improved high-dimensional combined features

In Fig.1,L1,L2represent the lengths of the two segments of the pair andarepresents the angle between two line segments, which are described as

(1)

(2)

Usually, the features are not obvious enough and the information provided is limited when the branch points are too close. Contrarily, the features are not stable enough and large matching errors are easy to occur when the branch points are far away. Therefore, eliminating the unconspicuous and unstable features can further improve the matching performance. The eliminating rule is as follows

(3)

whereτ1,τ2are boundary constants whose value can be modified according to the specific experimental environment. The number of high dimensional combined features can be adjusted based on differentτ1andτ2, which directly affects the accuracy and speed of matching. When imaging conditions or image size change, the matching time can be controlled by adjustingτ1andτ2, so the algorithm is flexible.

Figs.2(a),(b) show the results of construction of features without triangulation and the improved high-dimensional combined features, respectively.

Fig.2 Distribution of features

It is observable that the improved high-dimensional combined features retain the pros of unimproved features, with the advantages of point features as well as line features. Further, the number of features is obviously reduced. The distribution is more uniform. And no features aggregation exists.

1.2 Image matching based on geometric and regional characteristics

The improved high-dimensional combined features have some geometric characteristics, and the image matching can be greatly simplified according to the geometric constraints between features, so the vertex angle and the length information can be used to describe the feature.

The vertex angleαand the proportion of two lengthsL1/(L1+L2) are selected as the geometric information descriptor of the feature, which is a two-dimensional vectordg

dg=(dg1,dg2)=(a1α,a2L1/(L1+L2))

(4)

wherea1,a2are weight coefficients.

For more accurate matching relation between the two features, the regional information is added on the basis of the geometric information of the feature[17]. Vertexzof the high-dimensional combined feature is chosen as the center of the region, and the reference direction is pointed fromzto the first endpointz1, as shown in Fig.3.

Fig.3 Construction of regional information descriptor

The gray scale gradientm(x,y) and gradient directionθ(x,y) of the pixels in a circular neighborhood centered onzwith a radius ofL1/2 can be calculated

m(x,y)=

(5)

θ(x,y)=arctan[(L(x+1,y)-L(x-1,y)]/

[L(x,y+1)-L(x,y-1)]

(6)

The gray scale gradients are divided into 8 intervals according to the deviation of the gradient direction relative to the reference direction, so the accumulated value of gray scale gradients in each intervaldr1,dr2,…,dr8can be used as the regional information descriptor, which is an eight-dimensional vectordr

dr=a3(dr1,dr2,…,dr8)

(7)

Therefore, the descriptor of the improved high-dimensional combined featuredis a 10-dimensional vector

d=(d1,d2,…,d10)=(dg,dr)=(a1α,a2L1/

(L1+L2),a3dr1,a3dr2,…,a3dr8)

(8)

Considering that each dimension information descriptor should have similar weight coefficient,a1,a2must satisfy the following relations

(9)

It can be calculated thata1=1/(8π),a2=1/4.Finally, whether the two features are matched can be estimated according to whether the Euclidean distance between two features equals to a certain threshold value.

When Eq.(10) is satisfied, these two features are considered to be matched, and then the position relation of them can be calculated

(10)

whereεis a threshold whose value can be modified according to the specific experimental environment.

The method integrating geometric information descriptor and regional information descriptor can improve the real-time performance and robustness of the algorithm. Moreover, the radius of the region is not a fixed value, but determined by the length of the line segment of the given feature, which ensures that the algorithm has certain scale invariance.

1.3 Search strategy based on KNN

Since the ergodic searching can hardly meet the real-time requirements, we choose KNN algorithm, a supervised learning classification algorithm based on KD-tree structure[18], to optimize the search process and reduce the matching time.

KD-tree is a data structure that divides data points ink-dimensional space, and is suitable for fast nearest neighbor searching and approximate nearest neighbor searching in high dimensional space. In the proposed algorithm, the features of the last frame image is stored in KD-tree structure, that is, the features set of the last frame image is selected as the training sample set, so the sample data is 10-dimensional data.

The clustering strategy of KNN is used to quickly find two features, the nearest and the next nearest to the feature to be matched, and obtain two corresponding distanceslmin,l2. If Eq.(11) holds, the closest feature in the previous frame picture is considered to match this feature,σis a threshold, and the value ofεandσdirectly affects the accuracy of the matching results

(11)

After all the features in current frame image appear in the matching judgment, the matching positions can be counted.

If the matching points concentrated in a certain area, the position is considered as the correct matching point between the current frame image and the last frame image. Then the confidence factorΨcan be introduced to evaluate the credibility of the matching result.

(12)

The position relation of the current frame image with the reference image can be derived on the basis of sequence image matching, but certain cumulative errors would inevitably appear after continuous matching of adjacent frames sequence images. Therefore, so the matching of the real time image with the reference map at regular intervals is needed, based on the improved sequence image matching method.

The flow chart of the algorithm is shown in Fig.4.

Fig.4 Flow chart of the algorithm

2 Simulation

A series of testing experiments to verify the proposed algorithm were performed on a computer with a CPU of Intel CORE(TM) i3-2330M, 2.2 GHz dominant frequency and 4.00 GB memory storage, and the programming language was Visual C++ 6.0.

Firstly, the numbers of branch points, unimproved high-dimensional combined features and the high-dimensional combined features improved by triangulation of different images captured by different sensors were counted, as shown in Table 1, and the results of the distribution of features using this algorithm are shown in Fig.5. It can be seen that the number of high-dimensional combined features is closely related to images quality and sensor types. The features number of synthetic aperture radar (SAR) images is much more than that of optical satellite images. Since Delaunay triangulation algorithm divided the branch points into a triangle network, and the three points of each improved high-dimensional combined feature could only be located on a triangle in the network, the number of high-dimensional combined features was reduced obviously after improvement, which can effectively avoid the aggregation of high-dimensional combined features caused by the aggregation of point features. Therefore, the using of triangulation improves the dispersion of features, reduces the number of features, and then reduces the computational costs and space occupation, which is beneficial to improve the performance of image matching navigation.

Several sets of tests were conducted to verify the effectiveness of the proposed algorithm based on improved high-dimensional combined feature in the presence of translation, small scale change and rotation of images. In the experiment, the size of sequence image was 160 pixel×160 pixel; boundary constantsτ1=5,τ2=40; thresholdsε=0.02,σ=0.2. Considerable experiments have verified this is the best condition. The simulation results are shown in Table 2.

Table 1 Comparison of the number of features

Image classificationNameSize/(pixel×pixel)Number ofbranch pointNumber ofumimproved featureNumber of theimproved featureOptical satellite imagePicture 1300×3008116750Picture 2300×30010630156SAR imagePicture 3160×1601271 457184Picture 4160×16092577137

Fig.5 Results of the distribution of features by the proposed algorithm

Number of fea-tures in currentframe imageNumber of fea-tures in lastframe imageThe correctmatchingpointRotationangle/(°)Scale factor/timeMatching resultsMatchingpointRotationangle /(°)Scale fac-tor/timeRunning100111(20,20)01.00(20,20)01.000.2197756(12,9)-21.10(12,9)-2.331.070.269125138(17,15)30.96(17,15)3.090.950.397146184(7,13)-81.05(7,12)-7.521.050.331137126(9,6)-50.90(9,6)-5.210.920.305183196(7,11)81.06(7,11)8.341.050.384

In Table 2, it can be seen that the matching time was within 0.5 s at the rotation angle of -8° to 8° and the scale factor of 0.9 to 1.1,so the speed of the algorithm was relatively fast. This fast sequence matching algorithm proposed can meet the requirements of navigation system of real-time performance, robustness and accuracy.

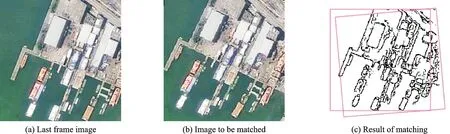

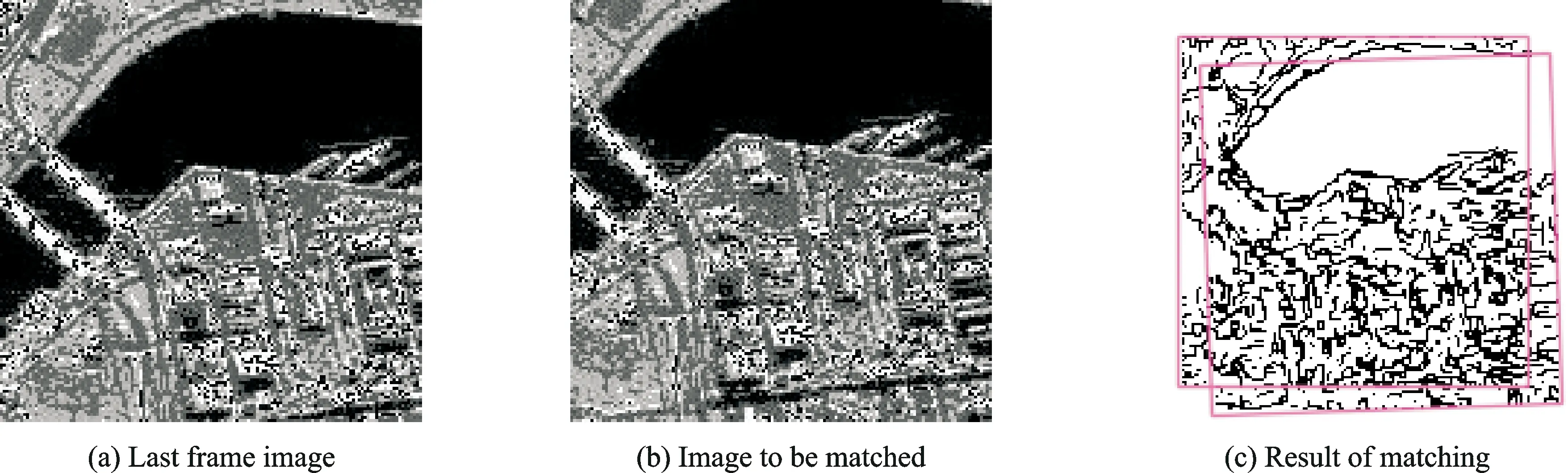

In order to further verify the effectiveness and superiority of the proposed algorithm, we chose the images from different sensors, and compared the proposed algorithm with the algorithm without KNN, the algorithm based on unimproved high-dimensional combined features and the algorithm based on SIFT feature. Given that only translations and rotations of small degrees usually exist in reality, no scale change was adopted in each group experiment. The rotation angles were -5° and -2°,and their correct matching points were (13,7) and (12,10),respectively.Figs.6,7 show the matching results of two group images using the proposed algorithm.Table 3 sh-ows the matching results of different algorithms.

Fig.6 Optical satellite image

Fig.7 Synthetic aperture radar image

Table 3 Results of different algorithms

MatchingobjectSize/(pixel×pixel)The correctmatching pointAlgorithmMatchingpointRotationangle/(°)Scale factor/timeRunningtime/sSAR images200×200(13,7)The proposed algorithm(13,7)-4.611.000.242Algorithm without KNN(13,7)-4.611.000.406Algorithm based on unimproved feature(14,6)-3.91.020.533Algorithm based on SIFT feature(13,7)-4.631.001.989The optical satellite images160×160(12,10)The proposed algorithm(12,10)-1.791.000.267Algorithm without KNN(12,10)-1.791.000.513Algorithm based on unimproved feature(12,10)-1.801.000.482Algorithm based on SIFT feature(12,10)-1.791.001.571

Table 3 imples that time consumption of the proposed algorithm is the shortest. Since the number of the features constructed by this method was reduced, compared with the method of constructing high-dimensional combined features by searching all the branch points, and a large number of redundant computations were avoided, the speed of the algorithm was improved. Although the number of features was reduced, the matching accuracy did not decline because in essence a small amount of more dispersive features which may better represent the image information were selected to participate in the matching. When the rotation angle of optical satellite image was 5°, the algorithm using unimproved features could hardly find the matching position accurately, but our algorithm could still achieve the matching. That means the robustness of the proposed algorithm is further improved, resulting from the adding of regional characteristics. Meanwhile, the descriptor dimension of the feature proposed in this paper was far less than the SIFT feature, so the proposed algorithm has a better speed performance.

3 Conclusions

Based on sequence image matching navigation, the algorithm is introduced in three aspects: Feature detection, matching rules and search strategy. Simulation experiments verified its superiority. The following conclusions can be obtained.

(1) When the high-dimensional combined feature is improved by Delaunay triangulation, the distribution of features is improved, and redundant calculation is reduced.

(2) In the process of matching, after the geometric as well as regional characteristics of the feature are taken into account, the algorithm is more robust to rotation and scale changes.

(3) KNN algorithm is introduced to optimize the search process, which can improve the speed of the algorithm further.

In conclusion, the proposed algorithm can meet navigation requirements, and exhibit good robustness, accuracy, and a promising engineering application. And based on this algorithm, we will conduct further study on key frame extraction and adaptation research.

Acknowledgements

This work was supported by the National Natural Science Foundations of China (Nos.51205193, 51475221).

杂志排行

Transactions of Nanjing University of Aeronautics and Astronautics的其它文章

- Analysis on Deflection Characteristics of Steel Cable-Stayed Bridge

- Nonlinear Control Method for Hypersonic Vehicle Based on Double Power Reaching Law of Sliding Mode

- Dynamic Analysis and Optimization of Pneumatic Wedge-Shaped Launcher for UAV

- Modal and Fatigue Life Analysis on Beam with Multiple Cracks Subjected to Axial Force

- Random Vibration of Steel Catenary Riser Conveying Fluid Under Wave Excitation

- Two Classes of Quaternary Codes from 4-valued Quadratic Forms