A Distributed Decision Mechanism for Controller Load Balancing Based on Switch Migration in SDN

2018-10-13TaoHuPengYiJianhuiZhangJulongLan

Tao Hu, Peng Yi*, Jianhui Zhang, Julong Lan

National Digital Switching System Engineering & Technological R&D Center, Zhengzhou 450002, China

Abstract: Software Defined Networking(SDN) provides flexible network management by decoupling control plane from data plane.And multiple controllers are deployed to improve the scalability and reliability of the control plane, which could divide the network into several subdomains with separate controllers.However, such deployment introduces a new problem of controller load imbalance due to the dynamic traffic and the static configuration between switches and controllers. To address this issue, this paper proposes a Distribution Decision Mechanism (DDM) based on switch migration in the multiple subdomains SDN network. Firstly, through collecting network information, it constructs distributed migration decision fields based on the controller load condition. Then we choose the migrating switches according to the selection probability, and the target controllers are determined by integrating three network costs, including data collection, switch migration and controller state synchronization. Finally, we set the migrating countdown to achieve the ordered switch migration. Through verifying several evaluation indexes, results show that the proposed mechanism can achieve controller load balancing with better performance.

Keywords: software defined networking;controller; load balancing; switch migration;distributed decision

I. INTRODUCTION

Compared with the traditional network, Software Defined Networking (SDN) is a new network paradigm that decouples control plane from data plane. SDN is designed to provide flexible management and rapid innovation through dynamically customizing the behaviors of a network [1]. With the expansion of network size, the single controller (Nox [2],Ryu [3], Floodlight [4]) deployed on control plane couldn’t meet the existing traffic requirements. Therefore, researchers have proposed a series of logically centralized, but physically distributed multiple controller architectures(HyperFlow [5], Onix [6], Kandoo [7]) which partition the network into some subdomains with separate controllers.

The introduction of multiple controllers has improved the scalability and reliability of controllers, but it introduces a new issue that is controller load imbalance. In [8], it shows that the traffic conditions are obviously different in both temporal and spatial. Meanwhile, there are static connections between switches and controllers. Therefore, the dynamic change of the traffic will cause that the flow requests of switches are uneven in the distributed network. It is easy to lead to controller hot spots— not enough control resources provided to meet switches’ requirements or controller cold spots — provided control resources are not utilized efficiently. Both two phenomena could bring about network instability and the unbalanced distribution of controller loads.

This paper proposes a Distribution Decision Mechanism (DDM)based on switch migration in the multiple subdomains SDN network.

In recent years, research work about controller load balancing could be divided into two categories: controller optimization and switch migration. For the first category, the studies concentrate on optimizing the number and locations of controllers to achieve the balanced distributions of controller loads in the network. However, this approach simply optimizes the controller nodes, and can’t cope with the real-time change of the traffic. For the second category, researchers adopt switch migration to balance the controller loads. Though the existing methods could adjust controller loads dynamically and improve the flexibility of network, they are lack of the detailed description of the communication process during migration and don’t consider the specific costs of switch migration. Besides, each migration will involve all controllers, which cause the great network overheads and the complex algorithm design.

In this paper, from the perspective of elastic control, we study controller load balancing further building on the work of switch migration, and design Distributed Decision Mechanism (DDM) to improve the efficiency of switch migration and optimize the controller loads. DDM is implemented in a progressive way, which mainly includes three stages. In stage 1, through collecting the network information, we build the distributed Migration Decision Field (MDF) which is an occasional and loose area. In stage 2, migrating switches and target controllers are determined according to the different network parameters in each MDF.In stage 3, we implement switch migration and transform the corresponding controller role.The main contributions are as follows.

• Integrated with the thought of switch migration, we make the first attempt to explore a Distributed Decision Mechanism(DDM) to balance controller loads in the multi-domain SDN network. Through combining several SDN subdomains, we build MDF as a specific area for implementing switch migration.

• By analyzing the process of switch migration, we define the relevant parameters and calculate the network costs. The migrating switch is determined by the switch selection probability. Meanwhile, considering the costs of data collection, switch migration and controller state synchronization simultaneously, we optimize the selection of the target controller based on the greedy algorithm. Furthermore, we introduce the migrating countdown to ensure the ordered switch migration.

• We set up a series of simulation experiments and compare DDM with the typical methods, and the results demonstrate DDM has the better performance regarding controller load balancing based on the certain evaluation indexes.

The rest of this paper is organized as follows. Section II presents the related work.Section III provides the problem analysis and the model formulation. In Section IV, we explain the implementation details of DDM. The simulations and results are elaborated in Section V. We finally present our conclusion and future work in Section VI.

II. RELATED WORK

To address the challenge of the control plane in the distributed network, the researchers have proposed a series of plans, which mainly include controller optimization and switch migration, to balance controller loads.

The controller optimization pays attention to change the number and locations of controllers to ensure the performance of control plan.[9] firstly proposed the problem of controller deployment, and focused on the average delay and the maximum delay in the network. By building the corresponding deployment model,it determined the optimum deployment status of controllers. [10] presented a concept named Dynamic Controller Provisioning Problem(DCPP) to minimize flow setup time and communication overhead, and it could adapt the number of controllers and their locations with changing network conditions. [11] introduced a mathematical model for controller placement, which simultaneously determined the optimal numbers, locations, types of controllers and the interconnections between all the network elements. [12] designed K-Critical algorithm to discover the minimum number of controllers and their locations. This work tried to create a robust control topology and balance load among the selected controllers.

The introduction of OpenFlow 1.3 protocol[13] provides the possibility for implementing switch migration, and it defines three roles(master, slave and equal) for controller. A switch may be simultaneously connected to multiple controllers in equal state, multiple controllers in slave state, and at most one controller in master state. Each controller may communicate its role to the switch via a role request message, and the switch must remember the role of each controller connection. The subdomain controller is the master role of the subdomain switches, but those switches can set the other subdomain controllers as slave roles. In a subdomain, when the controller overloads or the flow requests of switches increase sharply, some switches will be reassigned to the controller which is in the other subdomain. Therefore, researchers have proposed balancing controller loads through switch migration. [14] designed ElastiCon with double overload threshold values to decide the shift of controller load. [15] introduced the switch migration algorithm based on game theory, and set controller and switch as gamers. Through increasing or decreasing the commodity value of switch, controller selected the optimal element to implement the transaction. [16] also set a switch migration model and proposed an associated coordination framework to achieve scalability and reliability under heavy data center loads. But this work didn’t consider the failure scenario of the network and made the network routing become more complex. [17] presented a load balancing mechanism based on a load informing strategy for controllers. Meanwhile, it built a distributed decision architecture, including four components that were load measurement, load informing, balancing decision and switch migration. However, each controller continually performed load informing, and the communication overhead of the network was higher. [18] introduces a load variance-based synchronization (LVS) method to improve the load balancing performance in the multi-controller and multi-domain network. LVS conducts state synchronization among controllers if and only if the load of a specific server or subdomain exceeds a certain threshold. [19]proposes BalCon (Balanced Controller): a heuristic solution that is able to maintain load balancing among SDN controllers, through SDN switch migration, even under dynamic traffic load. BalCon is achieved by a realistic prototype based on Ryu, and it can obviously reduce the number of migrating switches.

III. ANALYSIS AND MODELING

In this section, we will present the controller load imbalance under multiple controllers, and describe the basic process of switch migration.On this basis, we introduce the relevant definition and parameters.

3.1 Problem analysis

The background of research is in the multi-domain SDN network in this paper, and we consider the communication between switches and controllers is in-band. The entire network is partitioned into several subdomains, which are controlled with the separate controllers.

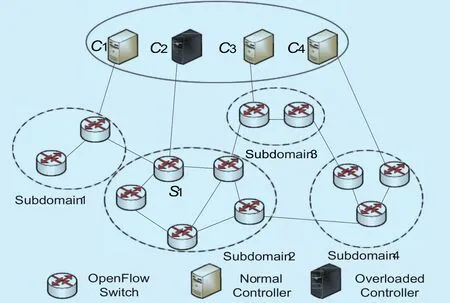

As shown in figure 1, there are four controllers in the network, and each controller connects with some switches to construct subdomain. When the number of switches connected with controller c2is larger or Subdomain 2 has aggregated great amounts of flow requests,then c2is under overburdened state and the distributions of controller loads are unbalanced in the network. Benefiting from the different controller roles defined with OpenFlow 1.3 protocol [13], if the master controller of the switch is in the overloaded or inefficient state, this switch can choose a controller from its slave controller group as the new master controller, so the process of changing master controller of the selected switch is described as switch migration. In figure 1, we select s1as migrating switch and c1as target controller from the slave controller group (c1, c2and c4). Therefore, the switch migration is implemented in a specific area in the multi-domain SDN network. Based on the thought of switch migration, the focuses of this paper are: (i)how to construct the migration filed to determine migrating switch and target controller;(ii) how to design the effective switch migration mode to improve the efficiency of the load balancing.

Fig. 1. Multiple controller deployment architecture.

Fig. 2. The indication of MDF.

3.2 Model formulation

3.2.1 Notation and definition

We describe the network according to the relevant knowledge of graph theory. The entire network is represented by an undirected graph G=(V,E), where V is the set of nodes and E is the set of links. There are M controllers in the network, and the controller set is C. The number of switches is N, and the switch set is represented by S, so |V|=M+N. It assumes that the locations of all controllers have been optimized in advance [12], and each controller manages several switches in the subdomain.The hop between devices is defined as d, andΩ=[xij]N×Mis the connection matrix for all network elements, where xijis the connection relationship between switch siand controller cj, as shown in Eq. (1). λiis the flow request rate of switch si, which mainly includes Packet-in message [20][21]. The number of switches controlled by cjis defined as Γj, and ωjis the process capacity ofis the set of switches connected with controller cr, thus Scrand crconstruct subdomain Gr.

Definition1.Migration Decision Field(MDF) Fris a temporary and loose subdomain alliance that includes the subdomain Grand its eligible neighbor subdomains. There is an overloaded controller crin Gr, but all selected neighbor subdomains must contain underloaded controllers. The construction of MDF is to provide the specific area for switch migration, which only involve overloaded controllers and some neighbor controllers without disturb the work states of the other controllers. As shown in figure 2, the network is divided into four subdomains, and c2is an overloaded controller in G2, which has three neighbor subdomains. However, only G1and G3are selected to construct MDF with G2,because G4includes the overloaded controller c4. Therefore, F2={G1,G2,G3} is established.

An MDF has two basic characteristics: (i)the network could have several migration decision fields in the meantime; (ii) there is no intersection between different migration decision fields.

Therefore, the fundamental principle of DDM is MDF and distributed migrating in the network. When the overloaded controller cris determined, it will combine the suitable subdomains to build MDF. In each MDF,we select the switchfrom switch setin the overloaded subdomain and migrate it to the target controller ck.Meanwhile, the fields don’t affect with each other

3.2.2 Parameter setting

To address the problems of selecting migrating switch and target controller in MDF, we consider the follow parameters.

(a) Switch selection probability

In order to lower the loads of overloaded controller as quickly as possible, we should consider both the longest node distance and high flow request rate for migrating switch.The selection probability of switchis set as ρirin Eq. (2),

where ηirpresents the control resources that switchoccupies on controller cr, diris the hop between switches and controllers. The larger ρir, the greater likelihoodwill be migrated.

(b) Data collection cost

The switches and controllers interact the information of hop and traffic with each other periodically in the network. The data collection cost of controller cris set as Pdatain Eq.(3),

where vcris the average bit rate for traversing one switch, which depends on the connected links for each switch.

(c) Switch migration cost

The communication process of switch migration is shown in figure 3. G1, G2and G3construct MDF together, and c2is an overloaded controller in G2in this field. c2selects switchand installs the migrating rule into this switch. After that,sends the request to the normal controller c1through switch.Finally, c1acceptsand the migration is over. Therefore, switch migration cost Pmoveincludes migrating rule installation cost, communication cost and migration request cost.

When a switch is selected to migrate, the controller must install the flow_mod rules into this switch in intra-domain. Thus, the migrating rule installation cost Pruleis shown in Eq.(4),

where δruleis the average size of flow_mod packet.

The migrating request may pass the different switches to target controller, so this process will produce the switch communication cost Pcom,where ε is the average communication rate of switch, xirand xjkrepresent the connection of devices in crand ckintra-domain, respectively.

Fig. 3. The communication process of switch migration.

When the migrating switch sends flow request to target controller, the migration request cost Preqis generated, as shown in Eq. (6),

where mindikis the minimum hop from migrating switch to target controller.

Therefore, the switch migration cost Pmoveis the linear summation of the above three costs.

(d) Controller state synchronization cost

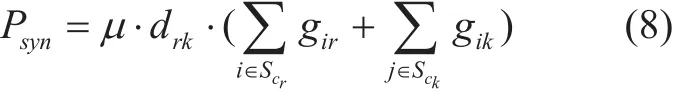

State synchronization is implemented among controllers after the switches have been migrated. The state synchronization cost between crand ckis Psynin Eq. (8),

where µ is the data shared between controllers, which is related to ε. Since the controllers don’t share all subdomain information,thus µ<ε. And drkis the hop between crand ck.

Fig. 4. The flow chart of DDM.

3.2.3 Objective function

Through synthesizing the costs of data collection, switch migration and controller state synchronization, we transform the selection of target controller into the multi-cost mixed linear planning problem in MDF. Implementing weighted summation of three costs, we get the objective function Pobject,

where τ1, τ2and τ3are the corresponding weights of three costs, respectively. Equation(10) ensures that each switch only has one master controller. Equation (11) guarantees the number of controllers in MDF is less than the total number of controllers in the network.Equation (12) makes sure that there is no intersection between any two migration decision fields, and the controller should only belong to one migration decision field.

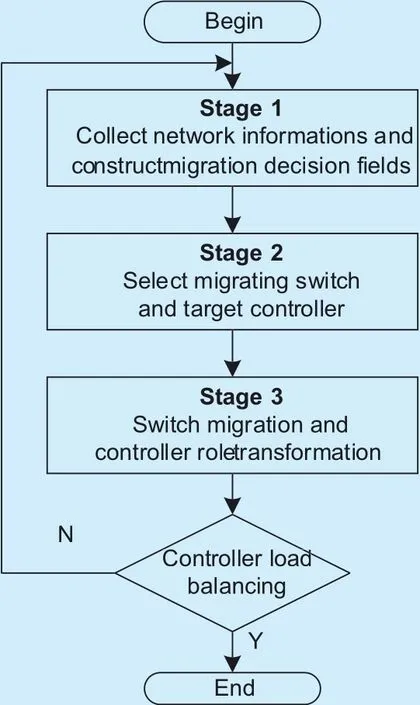

IV. IMPLEMENTATION

Based on the above analysis and modeling,we will introduce the detailed implementation of DDM. The flow chart of DDM is shown in figure 4, and there are three main stages in DDM. In stage 1, it gathers the network information and builds the distributed migration decision fields. In stage 2, it selects the migrating switches and target controllers. In stage 3, it completes to migrate switches and transform the controller roles. The controller load balancing will be judged after finishing three stages. If the verdict accords with the constraint of controller resource utilization rate, then the process jumps out of DDM, otherwise, returns to stage 1 to continue.

4.1 Stage 1

In this stage, DDM concentrates on collecting network information and constructing migration decision fields. The network information includes the connection relationship xij, hop information dijand the controller resource utilization rate γr.

The construction of MDF adopts the method of ergodic searching, and the process is as follows. In Eq. (14), we set the overload condition of controller by referring to [13],and the controller state is determined by γr.The controller cris considered as overloaded controller if meets 0.9≤γr≤1, then it will send switch migration request to all neighbor controllers. Once the neighbor controller cnreceives this request, it has two response modes (Agree or Discard) and chooses one of the responses to craccording to its response condition in Eq. (15). Finally, Fris successfully constructed by Grand some neighbor subdomains together.

4.2 Stage 2

Based on MDF, in stage 2, DDM selects migrating switch from the switch set of the overloaded controller, and chooses target controller from MDF.

The selection of migrating switch depends on switch selection probability ρir, and we will choose switch with maxρiras migrating switch.

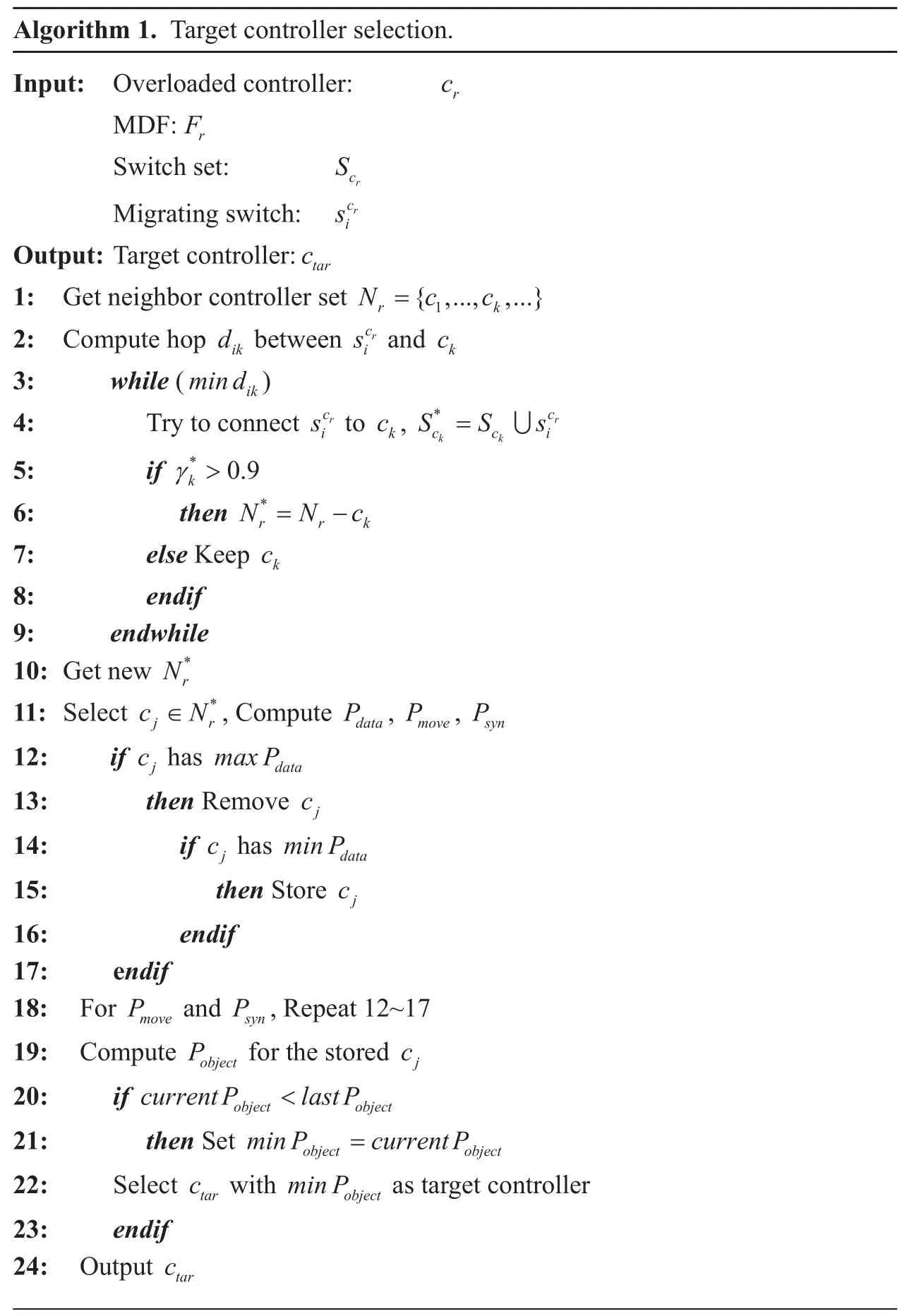

The selection of target controller considers Pdata, Pmoveand Psynsimultaneously. This problem is optimized as the multi-cost mixed linear program, and the minPobjectis solved based on greedy algorithm [22]. Greedy algorithm is a heuristic algorithm, which makes the local optimal choice based on specific measures without considering the overall situation. Therefore, it is suitable for selecting the optimal target controller under multiple costs environment in this stage.

Based on the above analysis, we design the Target Controller Selection algorithm to select the optimal target controller. Initially, all controllers are in active state in MDF, and the selected migrating switchsearches the nearest controller in round robin. Firstly, it will detect whether the controller resource utilization rate γkexceeds the threshold value 0.9 whenis connected to neighbor controller (Line 5). If so, this controller will be removed from neighbor controller set Nr(Line 6). Then Pdata, Pmoveand Psyn, the costs of migrating,will be calculated (Line 11). If the calculation cost is less than the previous minimum value,this controller will be stored (Lines 14 to 15).The algorithm tries to remove controller with the unilateral high cost in each round and uses the minimum cost configuration as a starting point. When the round robin is over, Pobjectis calculated according to the stored controller,and the controller with minPobjectwill be set as a target controller (Line 19 to 22). We take Fras an example, and the algorithm pseudo code is shown in Table 1.

The complexity of the algorithm is related to the number of controllers.The algorithm will be implemented|Nr|+(|Nr|−1)+...+(|Nr|−i )+...+1 times,so the complexity is O(m2) and m=|Nr|−i.Through traversing all controllers in MDF, the controllers which are not satisfied with migration condition will be removed. In the data processing, all operations are simple, including summation, multiplication and so on, and the algorithm will converge quickly in the end.

4.3 Stage 3

?

Fig. 5. The process of switch migration.

DDM will carry out switch migration and controller role conversion in each MDF in stage 3. The process of migration is shown in figure 5. The overloaded controller crchooses the migration switchto send Move signal to target controller ctar. ctarwill respond a Move-Start signal after receiving Move and select a random number num to start the countdown,where num is generated in Eq. (16). Before num declines to 0, if the switch migration completes, the master controller ofwill be shifted from crto ctar, and crbecomes the slave controller of. MDF will breaks up by itself, and Update Messages are broadcasted to the whole network. However, if the countdown is overtime or cris still in the overloaded state (0.9≤γr≤1), the countdown will be reset and DDM returns to stage 1.

V. EVALUATION

5.1 Simulation environment setting

According to the simulation setting in [15], we establish the experimental environment shown in figure 6.

5.1.1 Experimental platform

We select OpenDaylight as the experimental controller [23] and use Mininet [24] for the test platform. OpenDaylight is an open source platform, whose southbound interface supports various OpenFlow protocols. Considering performance interference between OpenDaylight and Mininet, thus we will install Mininet and OpenDaylight on different physical devices,respectively. There are six machines with the same configuration, Intel Corei7, 3.4GHz,8GB RAM, 2Gbps network card and Ubuntu14.04 LTS. Four machines run OpenDaylight with DDM, connected through a H3C 8500 switch, while one machine only installs OpenDaylight to simulate single centralized controller and another runs Mininet. Iperf [25]is used to generate TCP traffic between hosts.

5.1.2 Topology selecting

In order to make experiment more persuasiveness, we use two real network topologies Abilene [26] and Internet 2 OS3E [27] to verify the validity and topology adaptability of DDM. Abilene with 11 nodes and 14 links is derived from Topology Zoo. Created by several universities, research institutions and companies, Internet 2 OS3E is an abstraction of the actual backbone network of the United States, which has 34 nodes and 42 links.

5.1.3 Parameter setting

To simulate the real traffic situation, all flows have the specific traffic characteristics like[10], and the average flow rate is 450KB/s.It assumes that all controllers have the same performance and the upper limit of processing capacity is 10MB. Controller resource utilization rate γris between 0 and 1. As shown in Eq. (3) to Eq. (8), vcr=60KB/s; δrule=30Byte;ε=15KB/s; µ=3KB/s. In Eq. (9), since the changes of Pdata, Pmoveand Psynhave the similar effects on controller loads, we set τ1:τ2:τ3=1:1:1. We assume the flow table space is large enough, and the routing path has been optimized in advance using existing schemes, such as [28] [29]. To restrict the controller capacity at a low level, we set one controller could connect five to twenty switches in a subdomain.

5.2 Simulation analysis

We design a series of experiments to illustrate the performance of DDM in this paper.Moreover, DDM is compared with some other mechanisms such as Single Controller Management (SCM), Nearest Migration Decision(NMD) and Controller Redundancy Decision(CRD). There is just one controller in SCM,while NMD, CRD and DDM will deploy multiple controllers, respectively. The switch is migrated to the nearest controller in NMD,and CRD sets backup controllers to balance controller loads.

The traffic distributions of Abilene and OS3E are shown in figure 7, and each simulation time is 12 hours. Through repeating experiments, we record the results of network communication overhead, flow establishment time and controller load balancing, respectively.

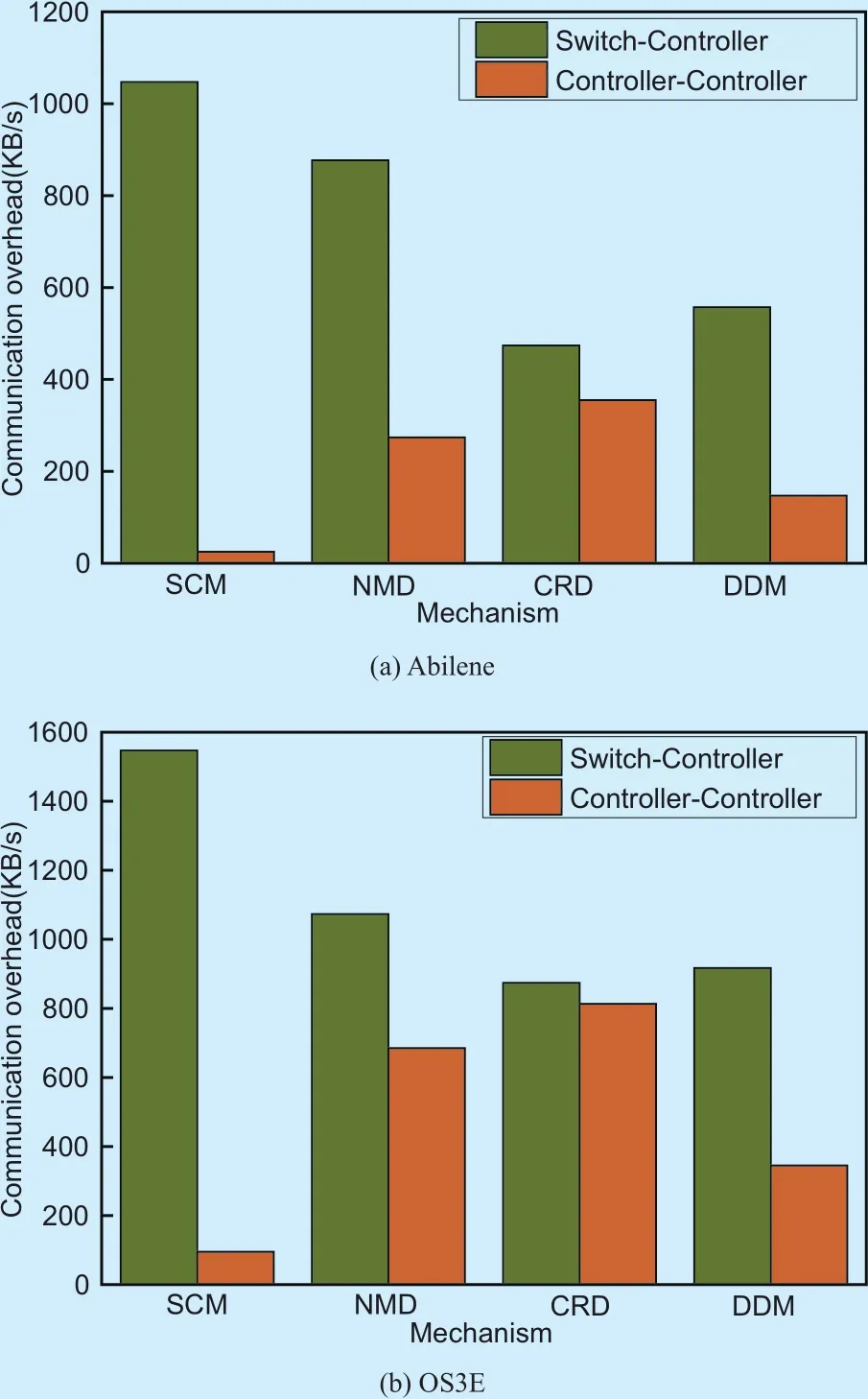

5.2.1 Communication overheads

The communication overheads obtained in two network environments are shown in figure 8. From the perspective of communication overheads between switches and controllers,SCM is the maximum; NMD takes the second place; CRD is no different than DDM. From the perspective of communication overheads between controllers, CRD is the highest; the next are NMD and DDM; SCM is the lowest.

Fig. 6. Experimental environment.

Fig. 7. The network traffic.

We analyze the experimental results in figure 8(a) and figure 8(b). Since SCM only deploys one controller, the communication overhead between controllers is 0 basically.This single controller is easily in the overloaded state, because it has to process all flow requests. Therefore, the communication overheads between switches and controllers are the maximum in SCM. NMD migrates switch to the closest controller to simplify the selection of target controller, which lowers the overheads between controllers. However,the nearest migration is easy to produce traffic congestion that may increase communication overheads between switches and controllers if multiple switches swarm into the closest controller at the same time. CRD reduces controller overload by adding extra controller, and the communication overheads between switches and controllers are lowest. But the added controller needs to synchronize network state with other devices, thus the communication overheads between controllers are highest. DDM considers the multiple costs and adopts greedy algorithm to seek the optimal result, which has the similar results with CRD in terms of communication overheads between switches and controllers (The difference doesn’t exceed 10%). The design of distributed migration decision fields reduces the information interaction of irrelevant controllers, which could reduce the communication overheads between controllers obviously. The communication overhead between controllers in DDM is the half of CRD’s and also less than NMD’s too.In conclusion, compared with the other three mechanisms, DDM produces the least total communication overheads (both switch-controller and controller-controller) on the premise of realizing controller load balancing.

Fig. 8. Communication overhead.

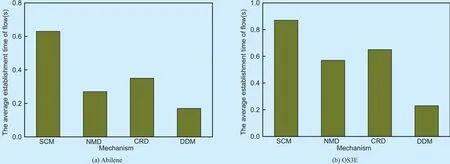

5.2.2 Flow establishment time

In this experiment, we employ flow establishment time to reflect the controller load variation dynamically. The shorter flow establishment time, the more balanced controller loads.As shown in figure 9, no matter in Abilene or OS3E, we use SCM as a reference and the single controller is always in the overloaded state, so flow establishment time doesn’t change in different network environments,maintaining at the highest value. In the other three mechanisms, the flow establishment time fluctuates with the change of flow requests in the network. CRD has the greatest dramatic time fluctuation and highest peak; NMD takes the second place; DDM is the lowest. Because adding new controller will cause the violent change of the network state, the fluctuation of flow establishment time of CRD is most obvious. In NMD, when the neighbor controllers are under overloaded state, it is required to spend more time to expand the migration area,and the flow establishment time also increases.Through dividing the non-interfering MDF,DDM chooses multiple switches to migrate and sets the countdown num for controller to make sure well-organized migration. Therefore, the process of flow establishment of DDM is less affected by load fluctuation, and flow establishment time of DDM is lower than the above three mechanisms.

We get the average establishment time of flow in different network environments, as shown in figure 10. Compared with SCM,NMD and CRD, the flow establishment time of DDM has been reduced by 53.4%, 25.6%and 33.7%, respectively.

5.2.3 Controller load balancing

We record the number of requests processed by each controller to reflect the distribution of controller loads. The more counterbalanced of requests, the better performance of controller load balancing. Due to SCM only has one controller, it doesn’t exist load balancing.So, we compare the results of NMD, CRD and DDM, which are shown in figure 11. For the two topologies (Abilene and OS3E) with four controllers, NMD has the big difference in the number of requests processed by each controller, CRD takes the second place and DDM only has the slight fluctuation. There are several reasons to explain the result. As NMD migrates the switches into the closest controller, the neighbors of the overloaded controller are likely to produce switch migration again if receiving too many migrating switches.Frequent migration leads to the big difference in the number of requests. When traffic bursts in CRD, the backup controllers will be active.However, the traffic of the active controller has the characteristics of transition and convergence, it can’t share much requests of the overloaded controller. Thus, CRD still has the bigger difference in the number of request processed by controllers. By setting the distributed MDFs, DDM could migrate switches in parallel to ensure migration efficiency, and the performance of flow processing has been improved. Therefore, the number of requests processed by controllers has the slight difference. By analyzing the results of figure 11,we conclude that, compared with NMD and CRM, DDM has the better load balancing performance.

Fig. 9. Flow establishment time.

Fig. 10. The average establishment time of flow.

Fig. 11. The requests processed by each controller in different mechanisms.

VI. CONCLUSIONS

In this paper, we make further efforts to study controller load balancing in software defined networking, and propose Distributed Decision Mechanism (DDM) based on switch migration to achieve controller load balancing dynamically. In DDM, we introduce migration decision field, which is a loose and temporary area combined with several specific subdomains. And the migrating objects (migrating switch and target controller) are determined according to selection probability and multicost synthesis in each MDF. Moreover, the migrating countdown ensures the sequential switch migration. Numerical results demonstrate that our mechanism could improve the performance of controller load balancing in distributed SDN network.

Our future work will focus on two aspects.First, we will add the failure analysis for both switch and controller. Then we will go into more details on facility selection through adopting another heuristic algorithm.

ACKNOWLEDGEMENTS

This work was supported in part by This work is supported by the Project of National Network Cyberspace Security (Grant No.2017YFB0803204), the National High-Tech Research and Development Program of China(863 Program) (Grant No. 2015AA016102),Foundation for Innovative Research Group of the National Natural Science Foundation of China (Grant No.61521003). Foundation for the National Natural Science Foundation of China (Grant No. 61502530).

Specially, I sincerely appreciate my girlfriend Tongtong (Sha Niu), thank her love and concern.

杂志排行

China Communications的其它文章

- A Precise Information Extraction Algorithm for Lane Lines

- Secure Mobile Crowdsensing Based on Deep Learning

- Two-Phase Rate Adaptation Strategy for Improving Real-Time Video QoE in Mobile Networks

- SQoE KQIs Anomaly Detection in Cellular Networks: Fast Online Detection Framework with Hourglass Clustering

- Topology Based Reliable Virtual Network Embedding from a QoE Perspective

- Heterogeneous Quality of Experience Guarantees Over Wireless Networks