Parameter identification of the fractional-order systems based on a modified PSO algorithm

2018-04-12LiuLuShanLiangJiangChaoDaiYueweiLiuChenglinQiZhidong

Liu Lu Shan Liang Jiang Chao Dai Yuewei Liu Chenglin Qi Zhidong

(1School of Automation, Nanjing University of Science and Technology, Nanjing 210094, China)(2Department of Electrical and Computer Engineering, Stevens Institute of Technology, Hoboken, NJ 07030, USA)(3Key Laboratory of Advanced Process Control for Light Industry of Ministry of Education, Jiangnan University, Wuxi 214122, China)

Fractional calculus and integral calculus were proposed 300 years ago.With the development of computer technology, the fractional calculus theory has drawn increasing attention[1-2].This is because we have found that some systems, such as economic systems[3], biology[4], thermal systems[5], electric power systems[6], can be described in detail by fractional differential equations.

The fractional-order model is widely used in all types of fields due to its lower order, fewer parameters and higher modelling accuracy.At present, the research of the fractional-order system identification is still in the initial stage, and there is no uniform identification method.Since the physical meaning of fractional calculus is still not certain, many identification methods for the fractional-order system do not take into account the structural parameters of the model.Therefore, many experts have focused on the study of the identification of fractional-order structure parameters[7-8].

Recently, considerable attention has been paid to evolutionary algorithms, such as differential evolution (DE)[9], the genetic algorithm (GA)[10]and particle swarm optimization (PSO)[11].Especially, compared with other evolutionary methods, PSO has the advantages of high speed, high efficiency and is easy to understand.A number of identification results show that the PSO algorithm is effective in the fractional-order system identification[12-13].

However, the classical PSO can easily fall into a local optimum, especially in a complex and high dimensional situation.In order to improve the performance of the traditional PSO, various PSO variants were proposed.One of the PSO variants proposed by Shi and Eberhart introduced the concept of inertia weight[14].The inertia weight is added to balance the global optimization and local optimization abilities.Some PSO variants try to improve the convergence performance of the algorithm by adding the characteristics of other methods[15-16].Aghababa[17]proposed a new PSO with adaptive time varying accelerators (ACPSO).However, these improvements did not change the three components of the traditional PSO algorithm.Therefore, the information transmission among individuals cannot be carried out, which ignores some useful information.Considering the integrity of group information, He et al.[18]proposed an improved PSO with passive congregation (IPSO).This algorithm only considers the information of a single individual and does not make full use of the information of the group.In this paper, a modified PSO algorithm (MPSO)is presented.This method can not only enable the individuals to obtain the information of the global best individual, but also achieve the information exchange among individuals.

1 Theory

1.1 Definition of fractional-order derivatives and integrals

(1)

where Re(α)is the real part ofα.

So far, fractional calculus has no uniform definition.In the development of fractional calculus, there are several definitions of fractional calculus.Three most commonly used definitions are Caputo, Riemann-Liouville (RL)and Grumwald-Letnikov (GL)[19].For example, the definition of GL is defined as

(2)

(3)

where Γ(·)is the Gamma function; [(t-a)/h] is the largest integer that is not greater than (t-a)/h, andhis the sampling period.

1.2 Fractional-order systems

Conventionally, integer-order calculus is used to describe natural phenomena, but many phenomena in nature cannot be accurately described by the traditional integer-order differential equations.Therefore, it is necessary to extend the traditional calculus to describe such phenomena.Fractional differential equation is an extension of traditional calculus, which can describe complex physical systems more accurately.

The general expression of the SISO linear fractional-order system differential equation is shown below.

anDαny(t)+an-1Dαn-1y(t)+…+a0y(t)=

bmDβmu(t)+bm-1Dβm-1u(t)+…+b0u(t)

(4)

whereaiandbiare real numbers;αiandβiare calculus orders;u(t)andy(t)are the input and output signals of the system, respectively.Under a zero initial condition, the expression of the transfer function is described as

(5)

At present, it is difficult to identify the general fractional-order system, because it is difficult to determine the order of the system.In Ref.[20], the concept of continuous order distribution was proposed, and the general expression of the fractional-order system was transformed into an expression with common factor order, which is shown below.

anDnαy(t)+an-1D(n-1)αy(t)+…+a0y(t)=

bmDmβu(t)+bm-1D(m-1)βu(t)+…+b0u(t)

(6)

The corresponding transfer function is

(7)

1.3 PSO variants

1.3.1ACPSO algorithm

PSO is a new evolutionary algorithm, which seeks for the optimal solution by sharing information among individuals.In the process of optimization, each particle updates its velocity and position as follows[15]:

vi(t+1)=wvi(t)+c1r1(Pi(t)-xi(t))+c2r2(G(t)-xi(t))

(8)

xi(t+1)=xi(t)+vi(t+1)

(9)

wherewis the inertia weight;vi(t)is the velocity of particleiat iterationt;c1andc2are two positive constants between 0 and 1;r1andr2are two random numbers between 0 and 1;Pi(t)is the optimum value of particleiat iterationt;xi(t)is the current position of particleiat iterationt;G(t)is the optimum value of the particle population.

In Ref.[14], a linearly decreasing inertia weight strategy was introduced in conventional PSO.The modified inertia weightwis shown below.

(10)

The corresponding velocity updating formula is

vi(t+1)=w(t)vi(t)+c1r1(Pi(t)-xi(t))+

c2r2(G(t)-xi(t))

(11)

wherewmaxis the maximum value of inertia weightw;wminis the minimum value of inertia weightw;Tis the maximum number of iterations.Usually, the inertia weightwis set from 0.9 to 0.4[21].

In Ref.[17], in order to improve the convergence rate of PSO, the ACPSO is proposed, which is expressed as

(12)

wheref(Pi(t))is the best fitness function found by thei-th particle at iterationt;f(G(t))is the best objective function found by the swarm up to the iterationt.With this method, the velocity can be updated adaptively, which accelerates the convergence of the algorithm.

1.3.2IPSO algorithm

The classical PSO algorithm only uses the individual optimal value and the optimal value of the population, and does not take into account the information of other individuals in the population.With this in mind, an improved PSO with the passive congregation was proposed in Ref.[18].The velocity updating of the IPSO algorithm is shown as

vi(t+1)=w(t)vi(t)+c1r1(Pi(t)-xi(t))+

c2r2(G(t)-xi(t))+c3r3(P(t)-xi(t))

(13)

whereP(t)is the current position of a random particle at iterationt.The introduction of the passive congregation can further increase the information exchange between groups, so that the population can find the optimal value more quickly.

2 Proposed MPSO Algorithm

In order to increase the exchange of information among individuals, and make full use of the information in the population, so as to improve the search speed and avoid the local optimum, the average value of position is introduced in this paper.Furthermore, in order to avoid the extreme value existing in the position information, a modified Tent mapping is proposed.

2.1 Modified Tent mapping

Tent mapping is a type of piecewise linear one-dimensional chaotic mapping, which has the advantages of simple form, uniform power spectrum density and good correlation properties.Compared with other chaotic sequences, such as logistics sequence, the improved Tent mapping has better uniformity and is more suitable for computer digital computation.The recursive formula of Tent mapping is

(14)

There are small cycles and some unstable periodic points in the Tent sequence, e.g.(0.2, 0.4, 0.8, 0.6)and (0.25, 0.5, 0.75).In order to prevent the iterations from falling into the small cycles, we design the modified sequence generation method.The specific steps are as follows.

1)Take a pointx0that does not fall into the small cycle, and setz(1)=x0,i=j=1.

2)Generate sequencexaccording to Eq.(14),i=i+1.

3)Suppose that the maximum iteration number isM1, ifi>M1, then execute Step 5); else ifx(i)∈{0,0.25,0.5,0.75} orx(i)=x(i-k),k∈{0,1,2,3,4}, then return to Step 4), else return to Step 2).

4)Change the initial value of iteration:x(i)=z(j+1)=z(j)+ε,j=j+1, return to Step 2).

5)Terminate the operation and save sequencex.

The initial value and the iteration number are set to be 0.435 8 and 1 000, respectively.Fig.1 shows the generated sequencex.

Fig.1 The generated sequence diagram

2.2 MPSO algorithm

Suppose that the number of particles isN, the specific steps of the Tent chaotic search are shown below.

1)The average valueMof position is obtained, and the definition ofMis

2)The optimization variables from the interval [a,b] are transformed into chaotic variableszfrom the interval [0, 1],

zi=(Mi-a)/(b-a)i=1,2,…,D

whereDis the number of variable dimensions.

4)The chaotic sequence is translated to the original variable range as follows:

Then,Nfeasible solutions can be obtained by

5)The average value is calculated as

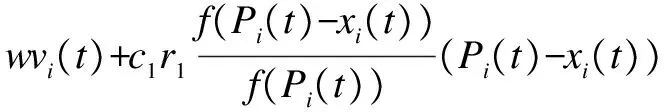

The velocity updating of the MPSO algorithm is shown as

vi(t+1)=w(t)vi(t)+c1r1(Pi(t)-xi(t))+

c2r2(G(t)-xi(t))+c3r3(Pav(t)-xi(t))

(15)

The inertia weightwin Eq.(15)is updated according to Eq.(10), and the position is updated according to Eq.(9).

2.3 Performance evaluation

2.3.1Classical test functions

In the test stage, eight classical test functions are introduced to test the performance of the MPSO and other algorithms as follows:

Ackley

Schewefel

Rastrigrin function

Griewank function

Rosenbrock function

Sphere function

Sum squares

Dixon-price

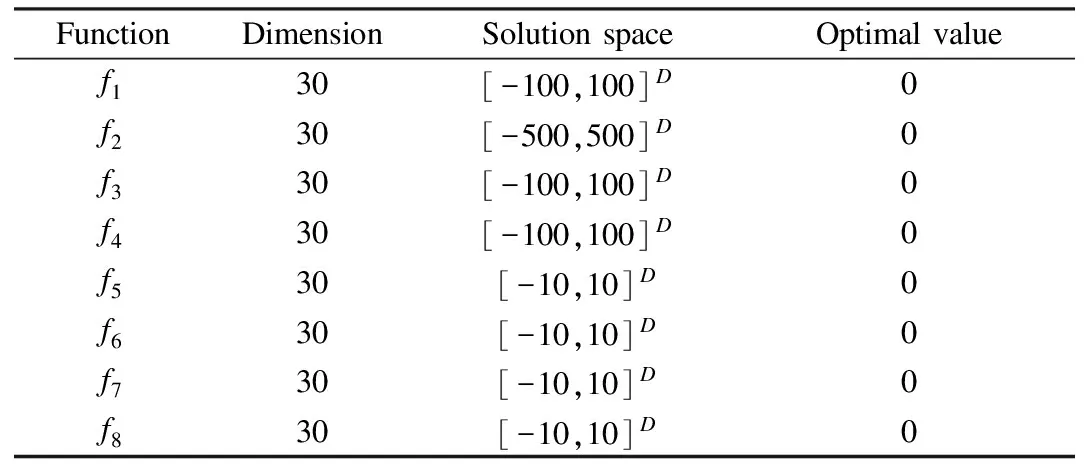

f1tof8have a common global minimum.Besides,f1tof4are multimodal test functions, whilef5tof8are unimodal test functions.Unlike the unimodal test functions, the number of local optimal values in the multimodal test functions increases with the increase of the dimension.The basic information of the eight test functions is listed in Tab.1.

Tab.1 Basic information of the test functions

2.3.2Parameter analysis

In the MPSO algorithm, the selection ofc3influences the performance of the algorithm.In order to analyze the effect ofc3on the performance of the algorithm, four multimodal functionsf1tof4and two unimodal functionsf5andf6are used to test the algorithm with differentc3values.In the MPSO algorithm, the population sizeNis set to be 50; the inertia weightwadopts the linear decreasing strategy in Ref.[14], and its variation range is 0.7-0.9; the learning factorsc1andc2are set to be 0.5-10.The test results forc3are shown in Tab.2.

Tab.2 Average fitness values of functions f1-f6 with different c3

From Tab.2, it can be seen that MPSO obtains good results on functionf1whenc3=0.3.The best result for functionf2is obtained whenc3=0.4.MPSO obtains good results on functionf6whenc3=0.1.For functionf5, the best result is obtained whenc3=0.7.Withc3=0.2, MPSO obtains the best results for functionsf3andf4.The test results of MPSO on functionf4andf6deteriorate withc3≥0.8.Considering the situation mentioned above, the value range ofc3is set to be 0.1-0.7.

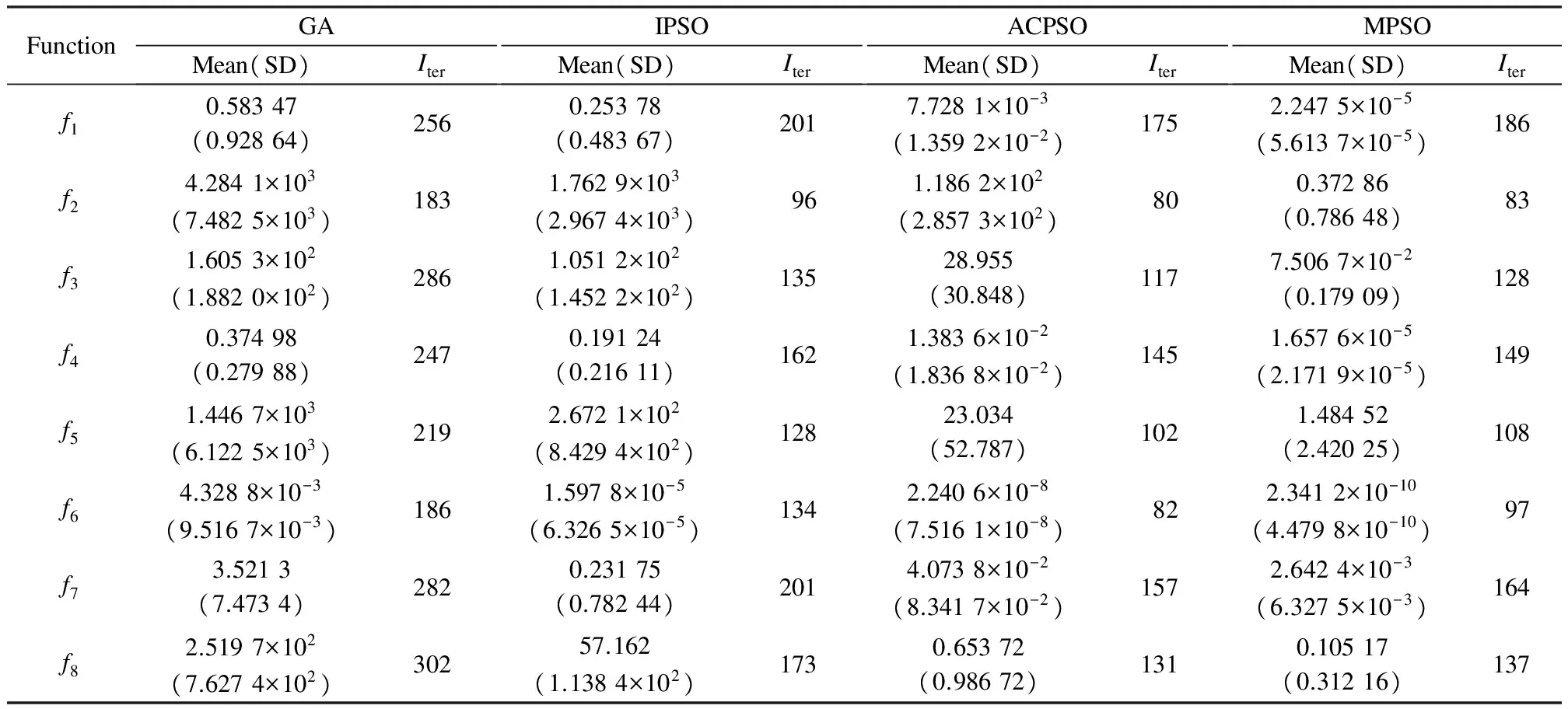

2.3.3Evaluation results

The population size of all the algorithms is set to be 50.For the GA algorithm, the crossover and mutation probabilities arePc=0.7 andPm=0.15, respectively.For the IPSO algorithm, the learning factorsc1andc2are set to be 0.5, and the inertia weightwis set to be [0.7, 0.9].For the ACPSO algorithm, the learning factorsc1andc2are set to be 2, and the inertia weightwis the same as that in Ref.[18].The proposed MPSO algorithm is tested by the classical test functionsf1tof8, and is compared with GA, IPSO and ACPSO algorithms.The maximum number of iterations is 1 000.In order to make the results more convincing, 100 experiments were carried out.The mean, the standard deviations(SD)and the average number of iterations (Iter)of the test results are listed in Tab.3.

Tab.3 Performance comparisons of MPSO, ACPSO, IPSO and GA algorithms

From Tab.3, compared with GA and IPSO algorithms, it can be clearly found that the MPSO algorithm has better performance in terms of both the mean and standard deviation.Compared with the ACPSO algorithm, the overall convergence rate of MPSO is relatively slow, but the MPSO algorithm has better convergence precision.One reason is that the ACPSO algorithm uses an adaptive learning factor, which can speed up the convergence rate of the algorithm.On the other hand, the proposed MPSO algorithm increases the information exchange among individuals in the population, and can search the solution space more carefully, so as to achieve better convergence precision.

In conclusion, the introduction of the average value of position takes full advantage of the information in the population, and the application of Tent mapping avoids the emergence of extreme values, which improves the convergence precision and the searching efficiency of the MPSO algorithm.

3 Simulations

The simulations are implemented using MATLAB 7.11 on Intel(R)Core(TM)i5-2320 CPU, 3.00 GHz with 4 GB RAM.The specific identification steps are as follows.

1)Determine the vector to be searched (model parameters and order).

2)Determine the performance index (evaluation function).In this paper, the step signal is introduced to be as the input signal.The sum of the squared errors between the outputy(t)and the true valuey0(t), i.e.,

3)The parameters of the algorithm are initialized to generate random search vectors.

4)According to the steps of the MPSO algorithm, the parameters in the fractional-order system are identified.

5)The iteration is repeated until the performance index is satisfactory.Output the identification results.

The schematic diagram of parameter estimation for the fractional-order system is shown in Fig.2.

Fig.2 Schematic diagram of parameter estimation for the fractional-order system

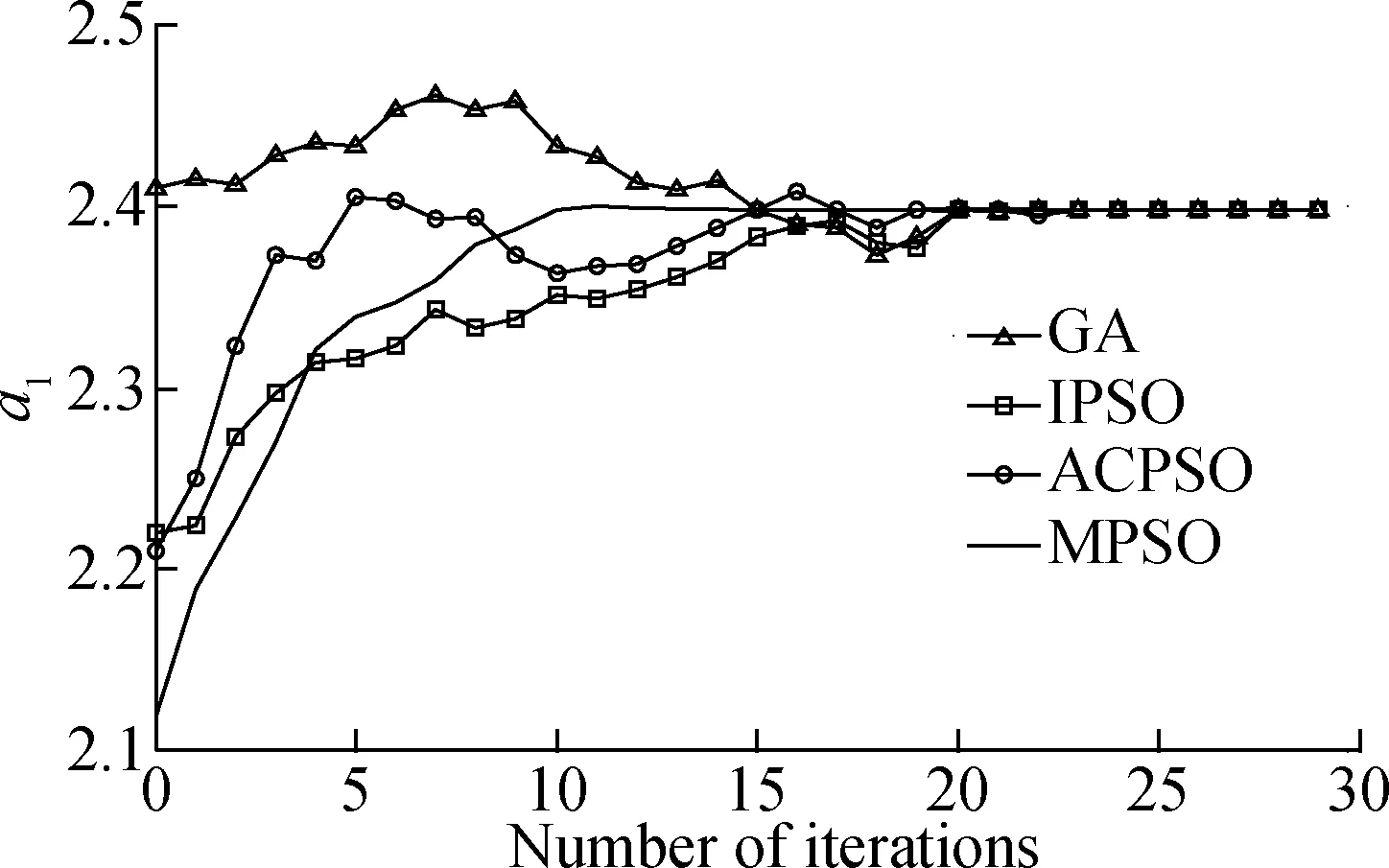

3.1 Identification of known fractional-order model structure

Assume that the transfer function of the identified object is

(16)

The range of parameters is set to be [0, 3], and the random number for the model order changes from -0.05 to 0.05.The identification vector is defined as

x=[a1a2a3b1b2]

wherea1,a2,a3are the model parameters andb1,b2are the orders of the model.The statistical results of the best, the mean and the worst estimated parameters over 20 independent runs are shown in Tab.4.

We can see from Tab.4 that the obtained estimated values by MPSO are closer to the true values, which shows that the MPSO algorithm is more accurate than GA, IPSO and ACPSO algorithms.Furthermore, it can also be easily found that the best objective function values obtained by MPSO are better than those obtained by other algorithms.

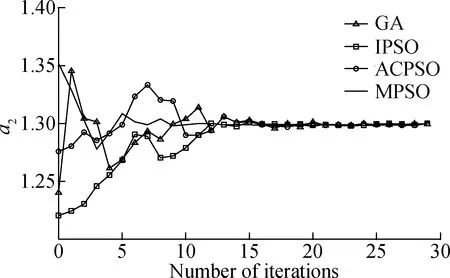

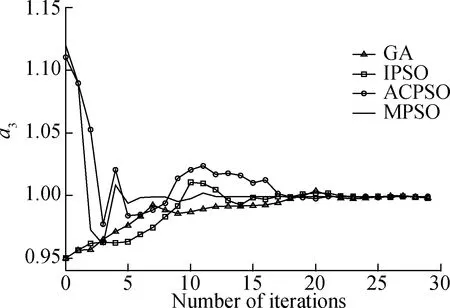

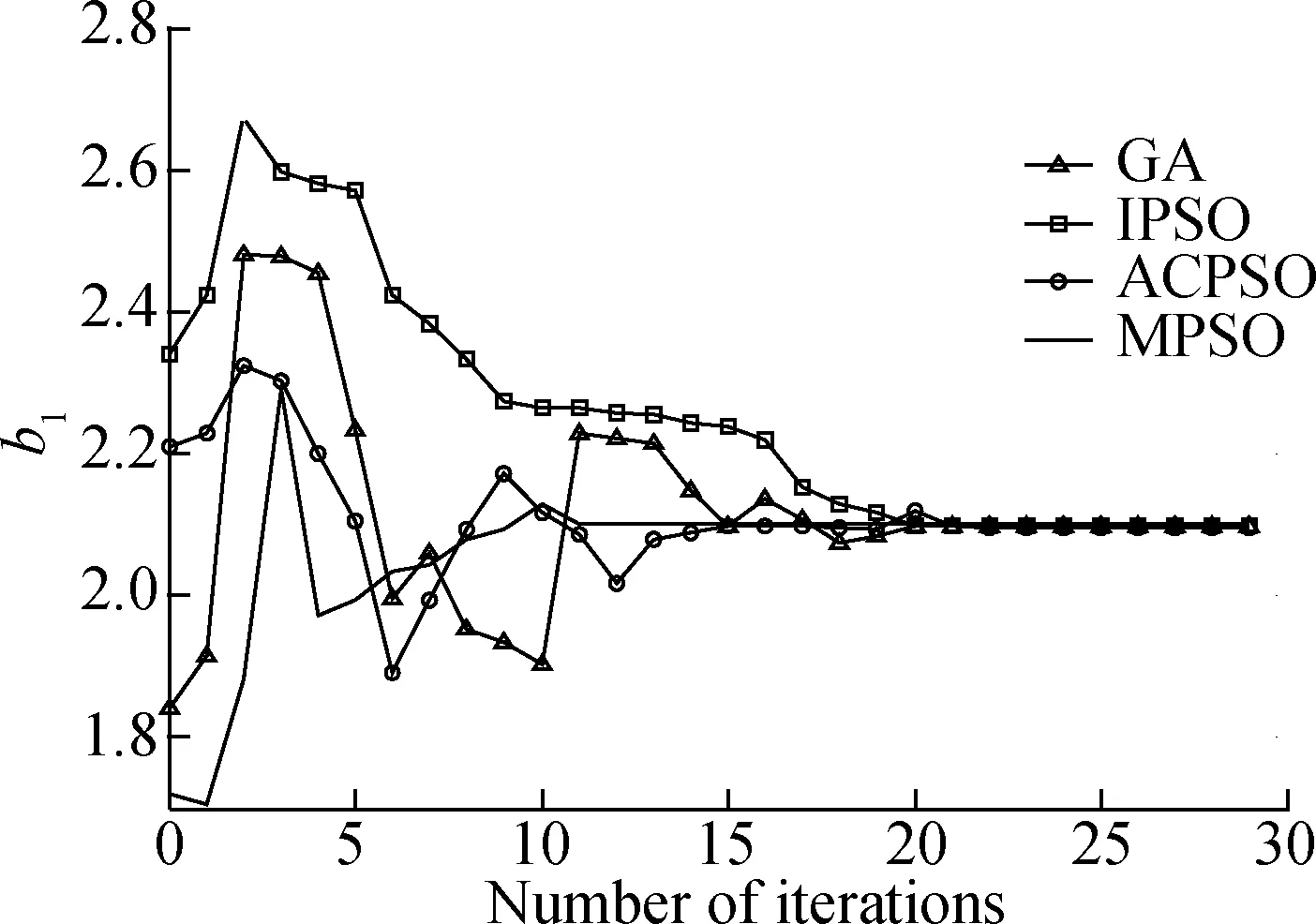

The estimated parameters generated by various algorithms in a single run are shown in Fig.3.Fig.3 reveals that the performance of the MPSO algorithm surpasses the IPSO algorithm due to the full use of information among individuals.Moreover, in the early stage of evolution, the convergence rate of the ACPSO algorithm is slightly faster due to the introduction of an adaptive learning factor.However, the estimated parameters generated by MPSO yield a better precision as compared to that of other comparison algorithms, which suggests that our presented algorithm is more susceptible to a small change in parameters.

(a)

(b)

(c)

(d)

(e)

Moreover, considering the time complexity, we can easily see that the MPSO algorithm outperforms the GA algorithm whether in the convergence characteristics or in the time complexity.In addition, under the same level of time complexity with the IPSO and ACPSO algorithms, the MPSO algorithm has a better convergence rate and accuracy.

In order to study the influence of the variation range of order on the identification, the variation range of order is set to be [-0.5, 0.5], and other conditions remain unchanged.The statistical results are shown in Tab.5.

From Tab.5, we can see that the identification effect can be improved significantly with the decrease of the random number range of order.The main reason is that the objective function value affected by the order changes exponentially, which indicates that there is a significant change in the function value with a minor change of order.Therefore, the appropriate reduction of the order helps to shorten the convergence time of the algorithm and improve search ability.

3.2 Identification for unknown fractional-order model structure

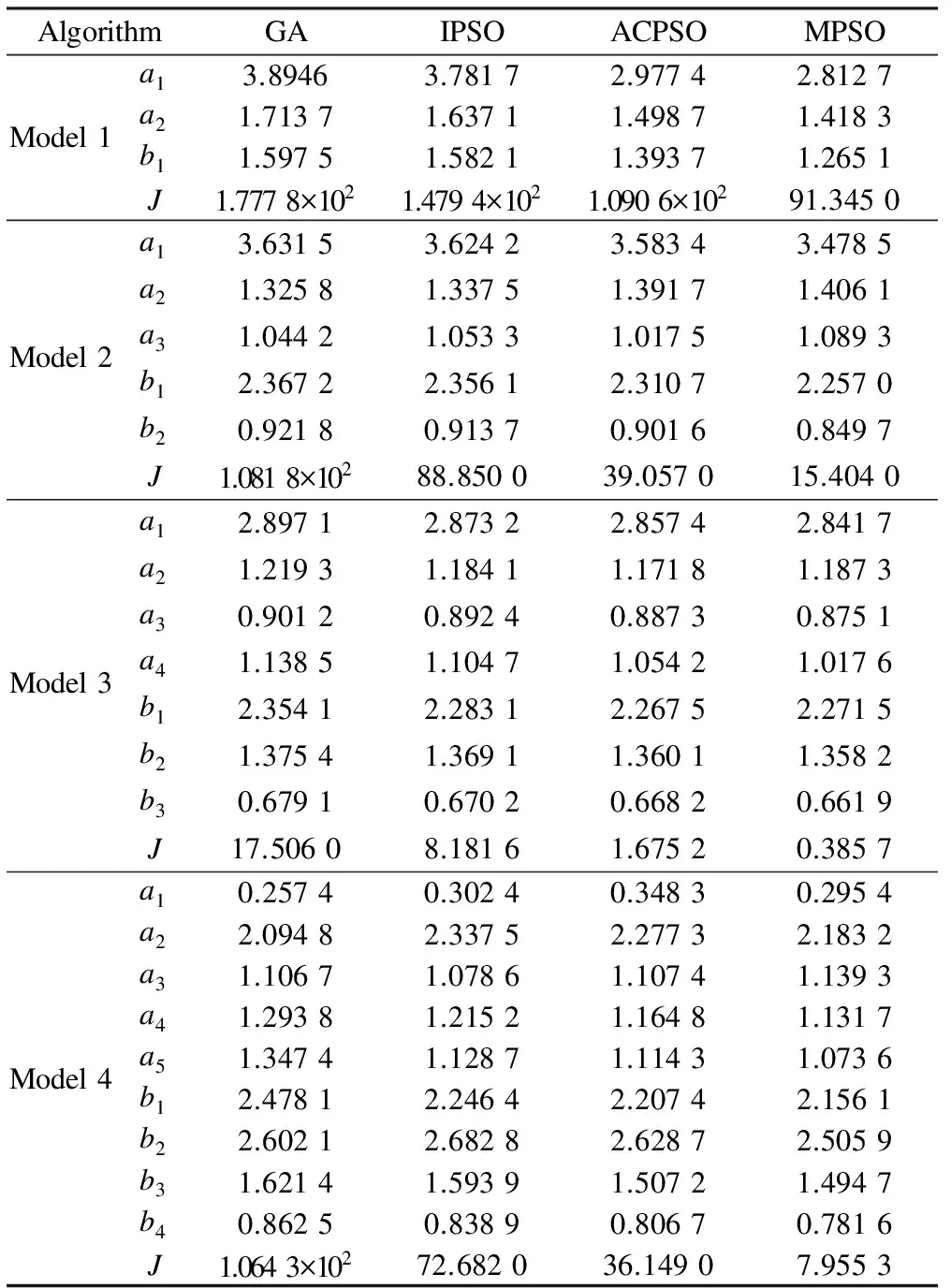

In the model identification, the model structure is often unknown.When the assumed model structure matches the real model, the error is smaller than that of other models.In the actual identification, the high-order system is relatively small, and the higher order fractional-order system can be reduced to one of the models in Tab.6.Therefore, the three models in Tab.6 are selected for simulation study.Assume that the transfer function of the identified object is

(17)

The statistical results are shown in Tab.7.From Tab.7, we notice that the MPSO algorithm outperforms other algorithms being compared.In addition, we can also find that the performance indexJof model 3 is significantly small, so model 3 is the closest to the target.In addition, taking theJvalue of model 3 as the dividing point, theJvalue decreases first and then increases.Furthermore, it is clear that the difficulty of parameter identification greatly increases with the increase in the number of the parameters in the system.

4 Conclusions

1)A new parameter identification scheme based on a modified PSO algorithm is proposed.The introduction of the average value of position information makes full use of the information exchange among individuals in the population, which can improve the precision and efficiency of optimization.By utilizing the uniformity and ergodicity of Tent mapping, MPSO can avoid the extreme value of position information, so as not to fall into local optimal values.

Tab.7 The estimation results of various algorithms for Eq.(17)

2)Compared with the other three algorithms, the identification results verify the fine searching capability and efficiency of the presented algorithm.For the systems with known model structures and unknown model structures, the proposed approach can obtain the estimated parameters with a faster convergence rate and higher convergence precision.

3)In conclusion, the proposed MPSO algorithm is an efficient and promising approach for parameter identification of the fractional-order system.

We mainly study the fractional-order linear model with constant coefficient in this paper.In future work, we will further study the parameter identification of the fractional-order system which contains nonlinearity models and input signals with fractional differential components.

[1]Diethelm K.An efficient parallel algorithm for the numerical solution of fractional differential equations[J].FractionalCalculusandAppliedAnalysis, 2011,14(3): 475-490.DOI:10.2478/s13540-011-0029-1.

[2]Gepreel K A.Explicit Jacobi elliptic exact solutions for nonlinear partial fractional differential equations [J].AdvancesinDifferenceEquations, 2014,2014(1): 1-14.DOI:10.1186/1687-1847-2014-286.

[3]Laskin N.Fractional market dynamics[J].PhysicaA:StatisticalMechanicsandItsApplications, 2000,287(3): 482-492.DOI:10.1016/s0378-4371(00)00387-3.

[4]Ionescu C, Machado J T, de Keyser R.Fractional-order impulse response of the respiratory system[J].Computers&MathematicswithApplications, 2011,62(3): 845-854.DOI:10.1016/j.camwa.2011.04.021.

[5]Badri V, Tavazoei M S.Fractional order control of thermal systems: Achievability of frequency-domain requirements[J].NonlinearDynamics, 2014,80(4): 1773-1783.DOI:10.1007/s11071-014-1394-1.

[6]Vikhram Y R, Srinivasan L.Decentralised wide-area fractional order damping controller for a large-scale power system[J].IETGeneration,Transmission&Distribution, 2016,10(5): 1164-1178.DOI:10.1049/iet-gtd.2015.0747.

[7]Safarinejadian B, Asad M, Sadeghi M S.Simultaneous state estimation and parameter identification in linear fractional order systems using coloured measurement noise [J].InternationalJournalofControl, 2016,89(11): 2277-2296.

[8]Taleb M A, Béthoux O, Godoy E.Identification of a PEMFC fractional order model[J].InternationalJournalofHydrogenEnergy, 2017,42(2): 1499-1509.DOI:10.1016/j.ijhydene.2016.07.056.

[9]Storn R, Price K.Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces [J].JournalofGlobalOptimization, 1997,11(4): 341-359.

[10]Tao C, Zhang Y, Jiang J J.Estimating system parameters from chaotic time series with synchronization optimized by a genetic algorithm [J].PhysicalReviewE, 2007,76(2): 016209.DOI:10.1103/PhysRevE.76.016209.

[11]Kennedy J, Eberhart R.Particle swarm optimization[J].ProceedingsofICNN’95—InternationalConferenceonNeuralNetworks.Perth, Australia, 1995: 1942-1948.DOI:10.1109/icnn.1995.488968.

[12]Gupta R, Gairola S, Diwiedi S.Fractional order system identification and controller design using PSO [C]//2014InnovativeApplicationsofComputationalIntelligenceonPower,EnergyandControlswithTheirImpactonHumanity.Ghaziabad, India, 2015: 149-153.DOI:10.1109/cipech.2014.7019053.

[13]Hammar K, Djamah T, Bettayeb M.Fractional hammerstein system identification using particle swarm optimization [C]//2015 7thIEEEInternationalConferenceonModelling,IdentificationandControl.Sousse, Tunisia, 2015: 1-6.DOI:10.1109/icmic.2015.7409483.

[14]Shi Y, Eberhart R C.A modified particle swarm optimizer [C]//1998IEEEInternationalConferenceonEvolutionaryComputation.Anchorage, USA, 1998: 69-73.DOI:10.1109/icec.1998.699146.

[15]Koulinas G, Kotsikas L, Anagnostopoulos K.A particle swarm optimization based hyper-heuristic algorithm for the classic resource constrained project scheduling problem [J].InformationSciences, 2014,277: 680-693.DOI:10.1016/j.ins.2014.02.155.

[16]Gong Y J, Li J J, Zhou Y, et al.Genetic learning particle swarm optimization[J].IEEETransCybern, 2016,46(10): 2277-2290.DOI:10.1109/TCYB.2015.2475174.

[17]Aghababa M P.Optimal design of fractional-order PID controller for five bar linkage robot using a new particle swarm optimization algorithm[J].SoftComputing, 2016,20(10): 4055-4067.DOI:10.1007/s00500-015-1741-2.

[18]He S, Wu Q H, Wen J Y, et al.A particle swarm optimizer with passive congregation[J].Biosystems, 2004,78(1/2/3): 135-147.DOI:10.1016/j.biosystems.2004.08.003.

[19]Rossikhin Y A, Shitikova M V.Application of fractional calculus for dynamic problems of solid mechanics: Novel trends and recent results [J].AppliedMechanicsReviews, 2009,63(1): 010801.DOI:10.1115/1.4000563.

[20]Hartley T T, Lorenzo C F.Fractional-order system identification based on continuous order-distributions[J].SignalProcessing, 2003,83(11): 2287-2300.DOI:10.1016/s0165-1684(03)00182-8.

[21]Gaing Z L.A particle swarm optimization approach for optimum design of PID controller in AVR system[J].IEEETransactionsonEnergyConversion, 2004,19(2): 384-391.DOI:10.1109/tec.2003.821821.

杂志排行

Journal of Southeast University(English Edition)的其它文章

- Design of a 12-Gbit/s CMOS DNFFCG differential transimpedance amplifier

- A method to improve PUF reliability in FPGAs

- A hybrid algorithm based on ILP and genetic algorithm for time-aware test case prioritization

- Nonlinear identification and characterization of structural joints based on vibration transmissibility

- Molecular dynamics simulations of strain-dependent thermal conductivity of single-layer black phosphorus

- Efficient and reliable road modeling for digital maps based on cardinal spline