Single Image Super-Resolution Based on the Feature Sign Method

2015-10-14LIXiaofengZENGLeiXUJinandMAShiqi

LI Xiao-feng, ZENG Lei, XU Jin, and MA Shi-qi

Single Image Super-Resolution Based on the Feature Sign Method

LI Xiao-feng, ZENG Lei, XU Jin, and MA Shi-qi

(School of Communication and Information Engineering, University of Electronic Science and Technology of China Chengdu 611731)

Recently, the super-resolution methods based on sparse representation has became a research hotpot in signal processing. How to calculate the sparse coefficients fast and accurately is the key of sparse representation algorithm. In this paper, we propose a feature sign method to compute the sparse coefficients in the search step. Inspired by the compressed sensing theory, two dictionaries are jointly learnt to conduct super-resolution in this method. The feature sign algorithm changes the non-convex problem to a convex one by guessing the sign of the sparse coefficient at each iteration. It improves the accuracy of the obtained sparse coefficients and speeds the algorithm. Simulation results show that the proposed scheme outperforms the interpolation methods and classic sparse representation algorithms in both subjective inspects and quantitative evaluations.

feature sign method; image reconstruction; image resolution; sparse representation

Image super-resolution (SR) is a technique aiming at the estimation of a high-resolution (HR) image from one or several low-resolution (LR) observation images, which is widely used in remote sensing, biometrics identification, medical imaging, video surveillance, etc. There are three categories of super resolution methods. Interpolation methods are simple but short of high-frequency component, while reconstructed methods degrade rapidly when the desired magnification factor is large.

Recently, the third category called learning based methods are developed, which become the most active research area. In this method, a HR image can be predicted by learning the co-occurrence relationship between a set of LR example patches and corresponding HR patches. Standard methods are example based method[1], neighbor embedding[2], etc. Motivated by compressive sensing theories[3], Ref.[4] proposed an algorithm which used sparse representation of the input LR image to reconstruct the corresponding HR image with two jointly trained dictionaries. The method used the inherent data message, mapped the data in some dictionaries, and reflected the data information by some sparse coefficients, which turned out to reconstruct the HR image greatly.

There are many classic sparse representation methods. Greedy methods, like orthogonal matching pursuit (OMP[5]), attack the problem heuristically by fitting the sparse models using greedy stepwise least squares. But they are often computationally slow. The least angel regression (LARS[6]) algorithm adds a new element to the active set of nonzero coefficients by taking a step along a direction having equal angles with the vectors in the active set at each iteration. But when the iteration number is large, the method is not efficient. Preconditioned conjugate gradients (PCG[7]) uses the internal Newton’s method to minimize the logarithmic barrier function and receives good results. But it is time-consuming.

In this paper, the feature sign method for single image SR via sparse representation is proposed which can overcome above drawbacks. By determining the sign of the sparse coefficients, the non-differentiable problem is changed to an unconstrained quadratic optimization problem (QP) which can be solved easily. It is competent to capture the sparse coefficient of data in an over-completed dictionary efficiently and rapidly. Simulation results demonstrate that it obtains better reconstruction performance qualitatively and quantitatively over other classic SR methods.

1 Single Image SR Using Sparse Representation

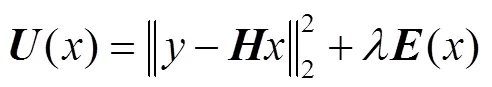

The super-resolution image reconstruction problem is known to be an ill-posed inverse problem. Various approaches have been proposed to regularize it, while they all share two parts of constraints priors:

The SR algorithm is a patch-wise based sparse recovery with the joint learning dictionaries. The input LR imageis interpolated to the size of desired HR image at first, and then divided into a set of overlapping patches of size. For each LR image patch, the featureis extracted in the training phase, and sparse representationis computed with respect to low-resolution dictionary.is then used to predict the underlying HR image patch(feature) with respect to high-resolution. The predicted HR patches are tiled together to reconstruct the HR image.

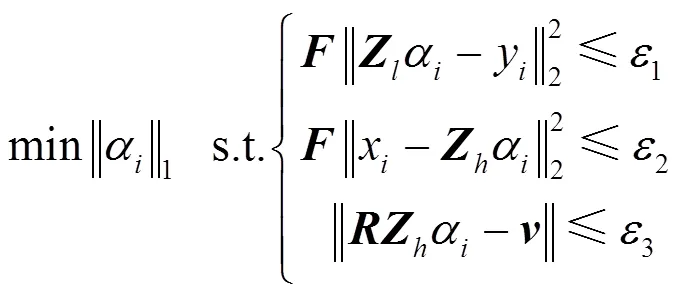

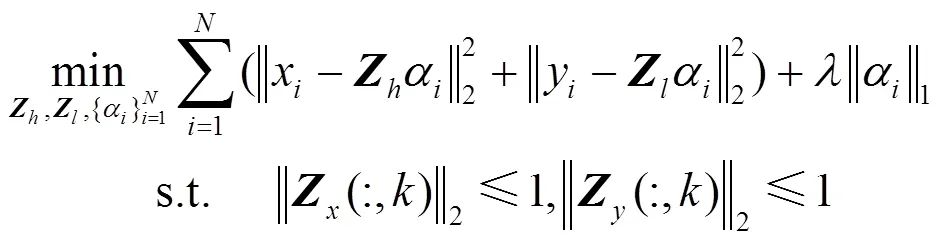

In this work, the pair of over-complete dictionaryare jointly trained[9]by using the K-SVD[10]method:

2 Feature Sign Method

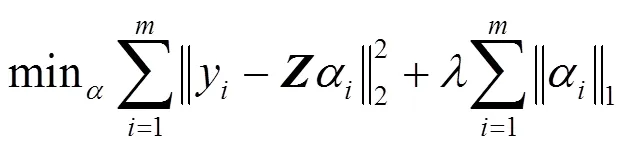

How to solve formula (2) fast and efficiently is the fundamental of sparse representation and quality determination of reconstructed HR image. It is a typical-regularized least squares problem which can be solved by many sparse algorithms.

To keep it simple, it can be rewritten in a vector form as:

(5)

The sign can be settled by 4 steps:

3) So the optimality conditions for achieving the optimal value ofare translated to

4) Consider how to select the optimal sub-gradientwhen the optimality conditions are violated. Consider the case whereand. Suppose, which means. In order to decrease,must be decreased. Sinceis now at the null point, the very first decrease tois changing it to a negative number. So set. Similarly, if, then set.

3 Compute Sparse Coefficients

The algorithmic procedure of learning coefficients using feature sign is described in the following 5 steps.

2) Activate: From zero coefficient of, select. Activate(addto the active set) only if it locally improves the objective (6);

(8)

Perform a discrete line search on the closed line segment fromto: Check the objective value atand all points where any coefficient changes sign, and update(and the entries in) to the point with the lowest objective value.Remove zero coefficients offrom the active set and update= sign();

4) Check the optimality conditions: Check the optimality conditions based on formula (6);

5) Output:The optimal coefficient matrix is.

4 Experiment Results

In the following section, simulation results are given to illustrate the performance of our scheme. The simulation and comparison are carried out by Matlab implementations. All experiments are executed on a 2.33 GHz Intel Core 2 Quad CPU Q8200 processor with 2 GB memory in Windows XP OS.

This paper samples 20 000 HR and LR patch pairs from the training images to learn the over-complete dictionary randomly.is 0.15 and the dictionary size is 1 024 in all experiments, which is proved to the best suitable number to balance between computation complexity and image quality. The input LR images are magnified by a factor of 3. The input patches are 5×5 pixels with an overlap of 3 pixels. For color images, the SR algorithm is only applied on the(intensity) channel, and the Cb and Cr chromatic channels are only interpolated by Bicubic. The three channels are then combined to form our SR images. The results of various methods are evaluated both visually and qualitatively in root-mean-square error (RMSE) and SSIM (structural SIMilarity[11]).

4.1 Experiment results on image SR

In this part, some experiments are conducted to compare the proposed method and other sparse representation methods. Contrast algorithms are classic interpolation method bicubic, and sparse representation methods OMP[5], LARS[6], PCG[7].

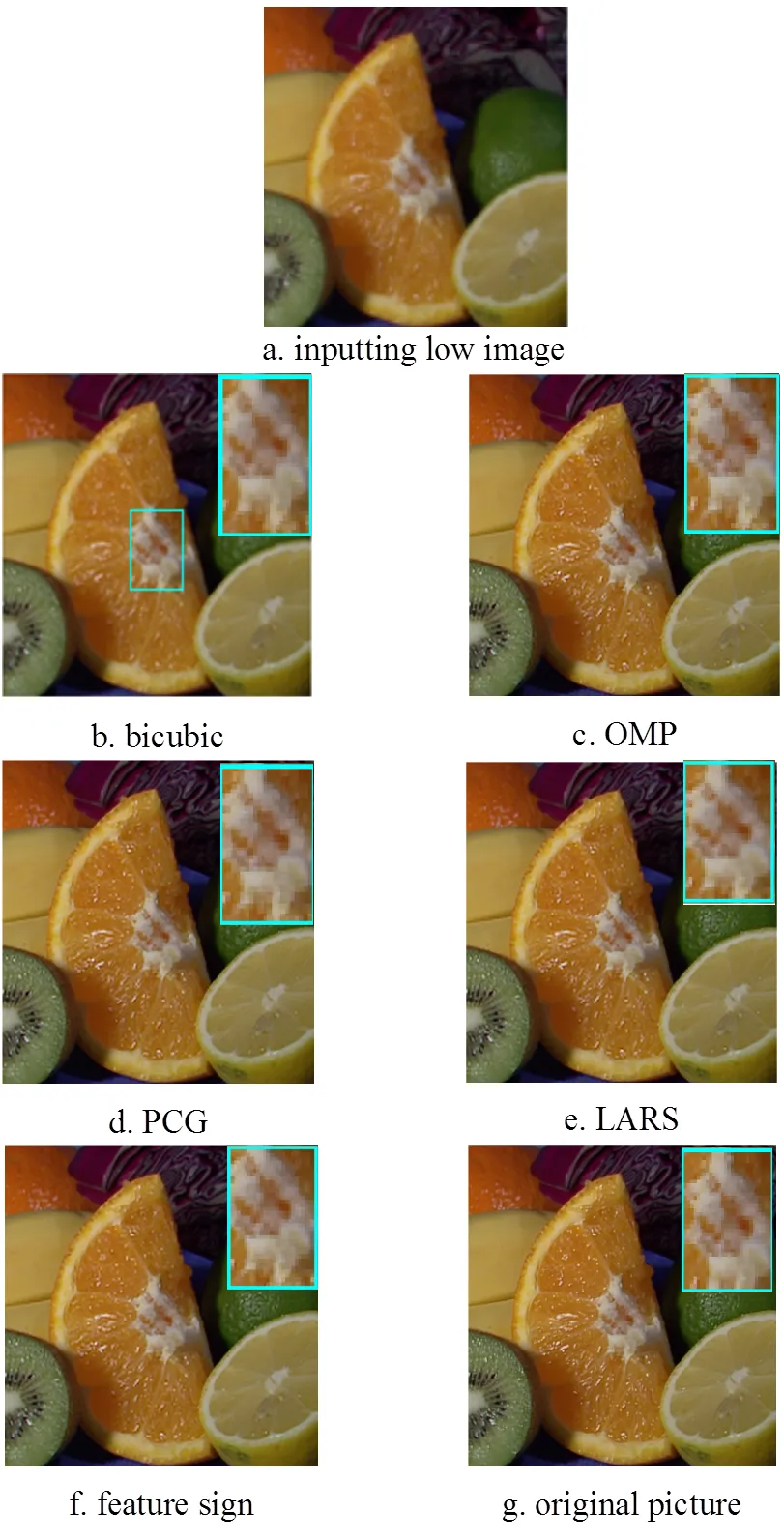

Bicubic reconstructed images are quite fuzzy for lacking of high frequencies while OMP, LARS, and PCG images are short of image details. The edge blur and sawtooth effect are much more obvious in bicubic, OMP, LARS, and PCG, while feature sign pictures are more distinct and clearer. Specifically, as Fig.1 shows, the line of the hat in Lena picture is more fluent and distinct than others in the reconstructed picture. And from the white part of the orange magnified in Fig.2, the proposed method has the clearest picture. Comparing the water ripple in yacht picture, bicubic picture is quite fuzzy, LARS, OMP, PCG pictures have blocking artifact, and the feature sign picture is more discerning.

Fig.2 Reconstructed HR images (scaling factor 3) of Fruit and the magnified core part by different methods.

a. inputting low image

b. bicubic c. OMP

d. PCG e. LARS

f. feature sign g. original picture

Fig.3 Reconstructed HR images (scaling factor 3) of Yacht and the magnified water ripple by different methods.

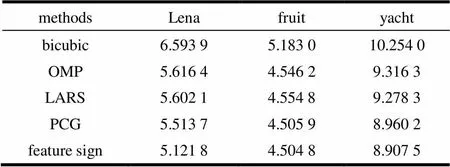

Table 1 compares the RMSEs of the reconstructed images generated by different methods with different input images. The results show that the proposed algorithm achieves the lowest RMSE against bicubic, LARS, OMP, and PCG. Illustrated by the case of Lena, the RMSE reductions of feature sign over bicubic, LARS, OMP, and PCG are 1.472 1, 0.494 6, 0.480 3, and 0.391 9 respectively.

The reconstruction time of the algorithms is shown in table 2. It can be concluded that the feature sign method yields the best performance with almost the least time. The time of proposed method is nearly one half of LARS and one percent of PCG.

Table 2 Time of each method s

As table 3 presents, the feature sign method can yield the biggest SSIM number, which indicates that the feature sign method can best restore the image. Furthermore, using the feature sign method can improve the efficiency of sparse representation in super resolution.

Table 3 SSIM of the reconstructed HR images

4.2 Experiment results on a 100-image SR

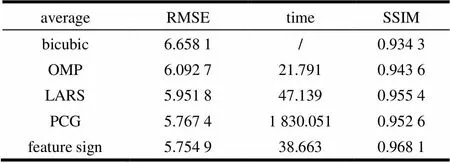

To test the efficiency of the proposed method more comprehensively, 100 SR experiments are performed by using different algorithms. The 100 test images are downloaded from the international open source image library. The average of RMSE and SSIM are computed in Table 4.

Table 4 General RMSE and time of 100 pictures

From table 4, the feature sign method yields the lowest RMSE with much less time, also the SSIM value is the biggest, It demonstrates the good quality of the proposed method used in image SR.

5 Conclusion

This paper proposes an efficient sparse representation method called feature sign for single image super-resolution. This method guesses the sign of sparse coefficients, then changes the complicated-norm question to a QP question. Simulation results demonstrate the advantage of the proposed scheme over existing schemes. Output images from the bicubic have edge blur, OMP pictures have badly jagged artifacts, those from LARS have some blocky effect, and PCG is time wasting, while feature sign reconstructions are distinct and have better visual performance in details. Reconstructed RMSE and SSIM all illustrate the good quality of the proposed method over other methods.

[1] FREEMAN W T, PASZTOR E C, CARMICHAEL O T. Learning low-level vision[C]//Proceedings of the Seventh IEEE International Conference on Computer Vision. Los Alamitos, CA, USA: IEEE Comput Soc. 1999.

[2] CHANG H, YEUNG D Y, XIONG Y. Super-resolution through neighbor embedding[C]//Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Los Alamitos, CA, USA: IEEE Comput Soc 2004: 275-282.

[3] CANDES, EMMANUEL J. Compressive sampling[C]//25th International Congress of Mathematicians, ICM 2006. United States: Asociacion International Congress of Mathematicians, 2006: 1433-1452.

[4] YANG J, WRIGHT J, HUANG T S, et al. Image super- resolution via sparse representation[J]. IEEE Transactions on Image Processing. 2010, 19(11): 2861-2873.

[5] DAVIS G, MALLAT S, AVELLANEDA M. Adaptive greedy approximation[J]. Constructive Approximation, 1997,13(1): 57-98.

[6] EFRON B, HASTIE T, JOHNSTONE I, et al. Least angle regression[J]. Annals of Statistics, 2004, 32(2): 407-499.

[7] KOH K, KIM S J, BOYD S. An interior-point method for large scale-regularized logistic regression[J]. Journal of Machine learning research, 2007,1(4): 1519-1555.

[8] DONOHO D L. For most large underdetermined systems of linear equations, the minimal-norm solution is also the sparsest solution[J]. Communication on Pure and Applied Mathematics, 2006: 59(7), 907-934.

[9] ZENG L, LI X F, XU J. An improved joint dictionary training method for single image super resolution[J]. COMPEL-The International Journal for Computation and Mathematics in Electrical and Electronic Engineering, 2013, 32(2): 721-727.

[10] AHARON M, ELAD M, BRUCKSTEIN A. K-SVD: an algorithm for designing over-complete dictionaries for sparse representation[J]. IEEE Transactions on Image Processing. 2006, 54(11): 4311-4322.

[11] WANG Z, BOVIK A C, SHEIKH H R, et al. Image quality assessment: From error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 2004, 13(4): 600- 612.

编 辑 张 俊

基于特征表征的单幅图像超分辨方法

李晓峰,曾 蕾,徐 进,马世琪

(电子科技大学通信与信息工程学院 成都 611731)

基于稀疏表示的图像超分辨是近年信号处理中的研究热点,快速准确地找到图像的稀疏表示系数是该方法的关键。该文提出了一种基于特征表征的算法来求解图像块的稀疏表示系数。受压缩感知理论启发,使用联合训练的字典来进行图像超分辨。特征表征算法在每一次迭代中,通过确定稀疏系数的符号,将求解的非凸问题变为凸问题,有效提高所得稀疏系数的准确性和超分辨算法速度。仿真结果显示,与插值法和经典的稀疏表示法比较,特征表征法可以得到更好的主观视觉评价和客观量化评价。

特征表征方法; 图像重建; 图像分辨率; 稀疏表示

TP301.6

A

2013-07-10;

2014-09-05

国家自然科学基金(61075013);中国博士后科学基金(20100471671)

2013-07-10;Revised data:2014-09-05

Supported by the National Natural Science Foundation of China (61075013); China Postdoctoral Science Foundation (20100471671).

10.3969/j.issn.1001-0548.2015.01.003

Biography:LI Xiao-feng, born in 1963, Ph.D and a professor of UESTC, male. Research interests:image processing and communication systems.

李晓峰(1963-),男,博士生,教授,主要从事图像处理和通信系统方面的研究.